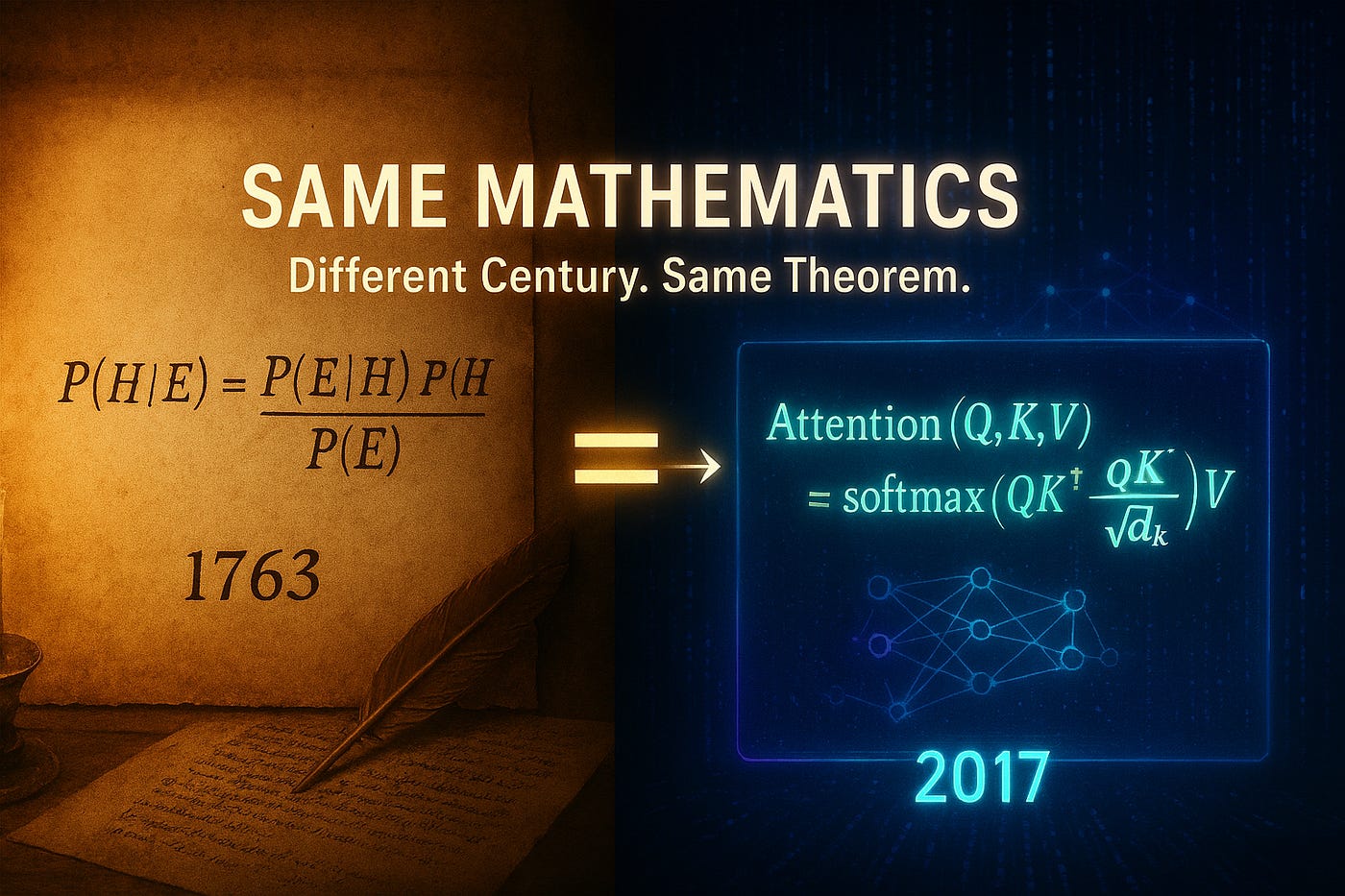

Your Decoder Is Having a Crisis of Faith: How Transformer Attention Is Actually Bayesian Inference

Every translation you’ve ever seen is a Bayesian belief update wrapped in matrix multiplication. Here’s the mathematical proof the AI industry doesn’t want you to see.

Keywords: transformer attention mechanism, Bayesian inference, encoder-decoder architecture, cross attention explained, neural machine translation, attention is all you need, deep learning mathematics, NLP transformers, machine learning theory, AI mathematics

There’s a moment in every transformer that nobody talks about.

The decoder thinks it knows what word comes next. It’s confident. It’s been trained on billions of examples. It has strong opinions.

Then the encoder speaks. And everything the decoder believed? Gets updated. Recalibrated. Sometimes, it is completely overturned.

This isn’t intelligence. This isn’t magic. This is Bayesian inference wearing a mask called “attention.”

Let me prove it to you with one sentence: “The bank was steep.”

The Decoder’s Confident Mistake

Your decoder starts generating a translation. It sees “The bank…”

Its prior belief screams: “FINANCIAL INSTITUTION!”

Why? Because in its training data:

“Bank” + “financial context” appears 10 million times

“Bank” + “river context” appears 50,000 times

The prior probability is overwhelming:

P(finance | “bank”) = 0.995

P(river | “bank”) = 0.005

The decoder is 99.5% sure that this is about money. It’s already preparing financial vocabulary: compte, argent, crédit.

Then the encoder says: “steep.”

Everything changes.

The Encoder’s Evidence

The encoder has been quietly processing the full sentence. It knows something the decoder doesn’t.

It computes contextual embeddings for every word. And when it sees “steep” after “bank,” it creates a devastating piece of evidence:

P(steep | finance bank) = 0.001

(Banks don’t have slopes)

P(steep | river bank) = 0.95

(Riverbanks absolutely have slopes)

This is where the magic happens. This is cross-attention — the moment the decoder’s prior belief meets the encoder’s evidence.