Top Important LLMs Papers for the Week from 24/06 to 30/06

Stay Updated with Recent Large Language Models Research

Large language models (LLMs) have advanced rapidly in recent years. As new generations of models are developed, researchers and engineers need to stay informed on the latest progress.

This article summarizes some of the most important LLM papers published during the Fourth Week of June 2024. The papers cover various topics shaping the next generation of language models, from model optimization and scaling to reasoning, benchmarking, and enhancing performance.

Keeping up with novel LLM research across these domains will help guide continued progress toward models that are more capable, robust, and aligned with human values.

Table of Contents:

LLM Progress & Benchmarking

LLM Training, Evaluation & Inference

LLM Quantization & Alignment

LLM Reasoning

Attention Models

LLM Safety & Alignment

My New E-Book: LLM Roadmap from Beginner to Advanced Level

I am pleased to announce that I have published my new ebook LLM Roadmap from Beginner to Advanced Level. This ebook will provide all the resources you need to start your journey towards mastering LLMs. The content of the book covers the following topics:

1. LLM Progress & Benchmarking

1.1. BigCodeBench: Benchmarking Code Generation with Diverse Function Calls and Complex Instructions

Automated software engineering has been greatly empowered by the recent advances in Large Language Models (LLMs) for programming. While current benchmarks have shown that LLMs can perform various software engineering tasks like human developers, the majority of their evaluations are limited to short and self-contained algorithmic tasks.

Solving challenging and practical programming tasks requires the capability of utilizing diverse function calls as tools to implement functionalities like data analysis and web development efficiently. In addition, using multiple tools to solve a task needs compositional reasoning by accurately understanding complex instructions.

Fulfilling both of these characteristics can pose a great challenge for LLMs. To assess how well LLMs can solve challenging and practical programming tasks, we introduce Bench, a benchmark that challenges LLMs to invoke multiple function calls as tools from 139 libraries and 7 domains for 1,140 fine-grained programming tasks.

To evaluate LLMs rigorously, each programming task encompasses 5.6 test cases with an average branch coverage of 99%. In addition, we propose a natural-language-oriented variant of Bench, Benchi, that automatically transforms the original docstrings into short instructions only with essential information.

Our extensive evaluation of 60 LLMs shows that LLMs are not yet capable of following complex instructions to use function calls precisely, with scores up to 60%, significantly lower than the human performance of 97%. The results underscore the need for further advancements in this area.

1.2. Unlocking Continual Learning Abilities in Language Models

Language models (LMs) exhibit impressive performance and generalization capabilities. However, LMs struggle with the persistent challenge of catastrophic forgetting, which undermines their long-term sustainability in continual learning (CL).

Existing approaches usually address the issue by incorporating old task data or task-wise inductive bias into LMs. However, old data and accurate task information are often unavailable or costly to collect, hindering the availability of current CL approaches for LMs.

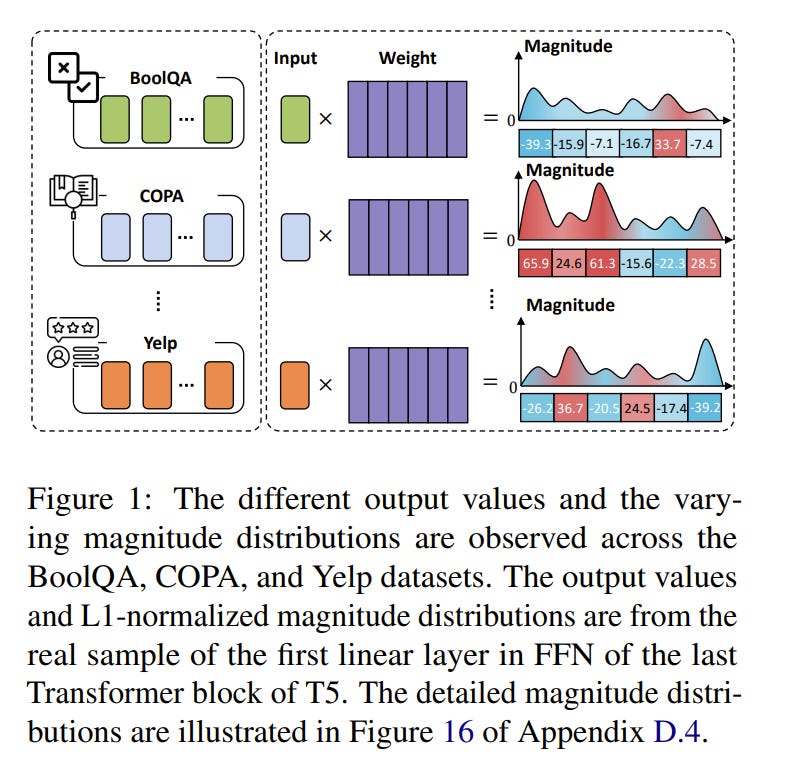

To address this limitation, we introduce MIGU (MagnItude-based Gradient Updating for continual learning), a rehearsal-free and task-label-free method that only updates the model parameters with large magnitudes of output in LMs’ linear layers.

MIGU is based on our observation that the L1-normalized magnitude distribution of the output in LMs’ linear layers is different when the LM models deal with different task data. By imposing this simple constraint on the gradient update process, we can leverage the inherent behaviors of LMs, thereby unlocking their innate CL abilities.

Our experiments demonstrate that MIGU is universally applicable to all three LM architectures (T5, RoBERTa, and Llama2), delivering state-of-the-art or on-par performance across continual finetuning and continual pre-training settings on four CL benchmarks.

For example, MIGU brings a 15.2% average accuracy improvement over conventional parameter-efficient finetuning baselines in a 15-task CL benchmark. MIGU can also seamlessly integrate with all three existing CL types to further enhance performance.

1.3. Large Language Models Assume People Are More Rational than We Really Are

In order for AI systems to communicate effectively with people, they must understand how we make decisions. However, people’s decisions are not always rational, so the implicit internal models of human decision-making in Large Language Models (LLMs) must account for this.

Previous empirical evidence seems to suggest that these implicit models are accurate — LLMs offer believable proxies of human behavior, acting how we expect humans would in everyday interactions.

However, by comparing LLM behavior and predictions to a large dataset of human decisions, we find that this is actually not the case: when both simulating and predicting people’s choices, a suite of cutting-edge LLMs (GPT-4o & 4-Turbo, Llama-3–8B & 70B, Claude 3 Opus) assume that people are more rational than we really are.

Specifically, these models deviate from human behavior and align more closely with a classic model of rational choice — expected value theory. Interestingly, people also tend to assume that other people are rational when interpreting their behavior.

As a consequence, when we compare the inferences that LLMs and people draw from the decisions of others using another psychological dataset, we find that these inferences are highly correlated. Thus, the implicit decision-making models of LLMs appear to be aligned with the human expectation that other people will act rationally, rather than with how people actually act.

1.4. MoA: Mixture of Sparse Attention for Automatic Large Language Model Compression

Sparse attention can effectively mitigate the significant memory and throughput demands of Large Language Models (LLMs) in long contexts. Existing methods typically employ a uniform sparse attention mask, applying the same sparse pattern across different attention heads and input lengths.

However, this uniform approach fails to capture the diverse attention patterns inherent in LLMs, ignoring their distinct accuracy-latency trade-offs. To address this challenge, we propose the Mixture of Attention (MoA), which automatically tailors distinct sparse attention configurations to different heads and layers.

MoA constructs and navigates a search space of various attention patterns and their scaling rules relative to input sequence lengths. It profiles the model, evaluates potential configurations, and pinpoints the optimal sparse attention compression plan.

MoA adapts to varying input sizes, revealing that some attention heads expand their focus to accommodate longer sequences, while other heads consistently concentrate on fixed-length local contexts.

Experiments show that MoA increases the effective context length by 3.9 times with the same average attention span, boosting retrieval accuracy by 1.5–7.1 times over the uniform-attention baseline across Vicuna-7B, Vicuna-13B, and Llama3–8B models.

Moreover, MoA narrows the capability gaps between sparse and dense models, reducing the maximum relative performance drop from 9%-36% to within 5% across two long-context understanding benchmarks.

MoA achieves a 1.2–1.4 times GPU memory reduction and boosts decode throughput by 5.5–6.7 times for 7B and 13B dense models on a single GPU, with minimal impact on performance.

1.5. Semantic Entropy Probes: Robust and Cheap Hallucination Detection in LLMs

We propose semantic entropy probes (SEPs), a cheap and reliable method for uncertainty quantification in Large Language Models (LLMs). Hallucinations, which are plausible-sounding but factually incorrect and arbitrary model generations, present a major challenge to the practical adoption of LLMs.

Recent work by Farquhar et al. (2024) proposes semantic entropy (SE), which can detect hallucinations by estimating uncertainty in the space semantic meaning for a set of model generations. However, the 5-to-10-fold increase in computation cost associated with SE computation hinders practical adoption.

To address this, we propose SEPs, which directly approximate SE from the hidden states of a single generation. SEPs are simple to train and do not require sampling multiple model generations at test time, reducing the overhead of semantic uncertainty quantification to almost zero.

We show that SEPs retain high performance for hallucination detection and generalize better to out-of-distribution data than previous probing methods that directly predict model accuracy.

Our results across models and tasks suggest that model hidden states capture SE, and our ablation studies give further insights into the token positions and model layers for which this is the case.

1.6. A Closer Look into Mixture-of-Experts in Large Language Models

Mixture-of-experts (MoE) is gaining increasing attention due to its unique properties and remarkable performance, especially for language tasks. By sparsely activating a subset of parameters for each token, MoE architecture could increase the model size without sacrificing computational efficiency, achieving a better trade-off between performance and training costs.

However, the underlying mechanism of MoE still lacks further exploration, and its modularization degree remains questionable. In this paper, we make an initial attempt to understand the inner workings of MoE-based large language models.

Concretely, we comprehensively study the parametric and behavioral features of three recent MoE-based models and reveal some intriguing observations, including:

Neurons act like fine-grained experts.

The router of MoE usually selects experts with larger output norms.

The expert diversity increases as the layer increases, while the last layer is an outlier.

Based on the observations, we also provide suggestions for a broad spectrum of MoE practitioners, such as router design and expert allocation. We hope this work could shed light on future research on the MoE framework and other modular architectures.

1.7. Leave No Document Behind Benchmarking Long-Context LLMs with Extended Multi-Doc QA

Long-context modeling capabilities have garnered widespread attention, leading to the emergence of Large Language Models (LLMs) with ultra-context windows. Meanwhile, benchmarks for evaluating long-context LLMs are gradually catching up.

However, existing benchmarks employ irrelevant noise texts to artificially extend the length of test cases, diverging from the real-world scenarios of long-context applications. To bridge this gap, we propose a novel long-context benchmark, Loong, aligning with realistic scenarios through extended multi-document question answering (QA).

Unlike typical document QA, in Loong’s test cases, each document is relevant to the final answer, ignoring any document will lead to the failure of the answer. Furthermore, Loong introduces four types of tasks with a range of context lengths: Spotlight Locating, Comparison, Clustering, and Chain of Reasoning, to facilitate a more realistic and comprehensive evaluation of long-context understanding.

Extensive experiments indicate that existing long-context language models still exhibit considerable potential for enhancement. Retrieval augmented generation (RAG) achieves poor performance, demonstrating that Loong can reliably assess the model’s long-context modeling capabilities.

1.8. LongIns: A Challenging Long-context Instruction-based Exam for LLMs

The long-context capabilities of large language models (LLMs) have been a hot topic in recent years. To evaluate the performance of LLMs in different scenarios, various assessment benchmarks have emerged.

However, as most of these benchmarks focus on identifying key information to answer questions, which mainly requires the retrieval ability of LLMs, these benchmarks can partially represent the reasoning performance of LLMs from large amounts of information.

Meanwhile, although LLMs often claim to have context windows of 32k, 128k, 200k, or even longer, these benchmarks fail to reveal the actual supported length of these LLMs. To address these issues, we propose the LongIns benchmark dataset, a challenging long-context instruction-based exam for LLMs, which is built based on the existing instruction datasets.

Specifically, in our LongIns, we introduce three evaluation settings: Global Instruction & Single Task (GIST), Local Instruction & Single Task (LIST), and Local Instruction & Multiple Tasks (LIMT). Based on LongIns, we perform comprehensive evaluations on existing LLMs and have the following important findings:

The top-performing GPT-4 with 128k context length performs poorly on the evaluation context window of 16k in our LongIns.

For the multi-hop reasoning ability of many existing LLMs, significant efforts are still needed under short context windows (less than 4k).

2. Retrieval Augmented Generation (RAG)

2.1. LongRAG: Enhancing Retrieval-Augmented Generation with Long-context LLMs

In the traditional RAG framework, the basic retrieval units are normally short. Common retrievers like DPR normally work with 100-word Wikipedia paragraphs. Such a design forces the retriever to search over a large corpus to find the `needle’ unit.

In contrast, the readers only need to extract answers from the short retrieved units. Such an imbalanced `heavy’ retriever and `light’ reader design can lead to sub-optimal performance. To alleviate the imbalance, we propose a new framework LongRAG, consisting of a `long retriever’ and a `long reader’.

LongRAG processes the entire Wikipedia into 4K token units, which is 30 times longer than before. By increasing the unit size, we significantly reduce the total units from 22M to 700K. This significantly lowers the burden of retriever, which leads to a remarkable retrieval score: answer recall@1=71% on NQ (previously 52%) and answer recall@2=72% (previously 47%) on HotpotQA (full-wiki).

Then we feed the top-k retrieved units (approx 30K tokens) to an existing long-context LLM to perform zero-shot answer extraction. Without requiring any training, LongRAG achieves an EM of 62.7% on NQ, which is the best-known result.

LongRAG also achieves 64.3% on HotpotQA (full-wiki), which is on par with the SoTA model. Our study offers insights into the future roadmap for combining RAG with long-context LLMs.

2.2. Towards Retrieval Augmented Generation over Large Video Libraries

Video content creators need efficient tools to repurpose content, a task that often requires complex manual or automated searches. Crafting a new video from large video libraries remains a challenge.

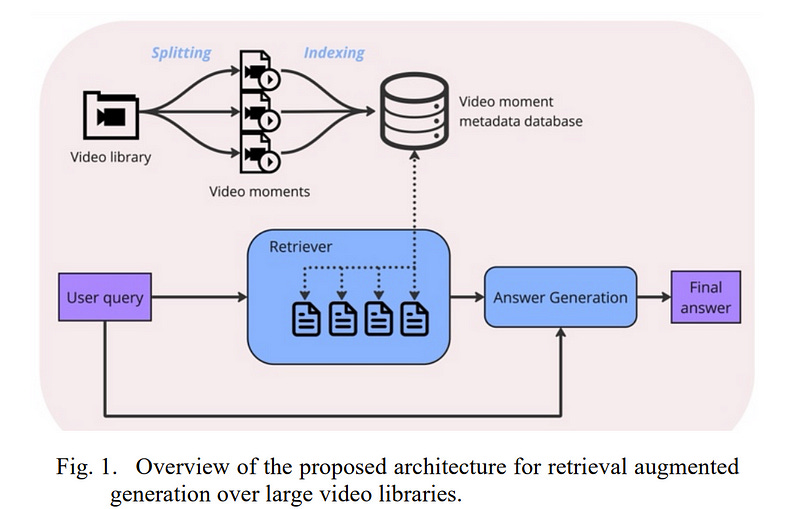

In this paper, we introduce the task of Video Library Question Answering (VLQA) through an interoperable architecture that applies Retrieval Augmented Generation (RAG) to video libraries.

We propose a system that uses large language models (LLMs) to generate search queries, retrieving relevant video moments indexed by speech and visual metadata.

An answer generation module then integrates user queries with this metadata to produce responses with specific video timestamps. This approach shows promise in multimedia content retrieval and AI-assisted video content creation.

2.3. Evaluating RAG-Fusion with RAGElo: an Automated Elo-based Framework

Challenges in the automated evaluation of Retrieval-Augmented Generation (RAG) Question-Answering (QA) systems include hallucination problems in domain-specific knowledge and the lack of gold standard benchmarks for company internal tasks.

This results in difficulties in evaluating RAG variations, like RAG-Fusion (RAGF), in the context of a product QA task at Infineon Technologies. To solve these problems, we propose a comprehensive evaluation framework, that leverages Large Language Models (LLMs) to generate large datasets of synthetic queries based on real user queries and in-domain documents, uses LLM-as-a-judge to rate retrieved documents and answers, evaluates the quality of answers, and ranks different variants of Retrieval-Augmented Generation (RAG) agents with RAGElo’s automated Elo-based competition.

LLM-as-a-judge rating of a random sample of synthetic queries shows a moderate, positive correlation with domain expert scoring in relevance, accuracy, completeness, and precision. While RAGF outperformed RAG in Elo score, a significance analysis against expert annotations also shows that RAGF significantly outperforms RAG in completeness, but underperforms in precision.

In addition, Infineon’s RAGF assistant demonstrated slightly higher performance in document relevance based on MRR@5 scores. We find that RAGElo positively aligns with the preferences of human annotators, though due caution is still required. Finally, RAGF’s approach leads to more complete answers based on expert annotations and better answers overall based on RAGElo’s evaluation criteria.

2.4. A Tale of Trust and Accuracy: Base vs. Instruct LLMs in RAG Systems

Retrieval Augmented Generation (RAG) represents a significant advancement in artificial intelligence combining a retrieval phase with a generative phase, with the latter typically being powered by large language models (LLMs).

The current common practices in RAG involve using “instructed” LLMs, which are fine-tuned with supervised training to enhance their ability to follow instructions and are aligned with human preferences using state-of-the-art techniques.

Contrary to popular belief, our study demonstrates that base models outperform their instructed counterparts in RAG tasks by 20% on average under our experimental settings. This finding challenges the prevailing assumptions about the superiority of instructed LLMs in RAG applications.

Further investigations reveal a more nuanced situation, questioning fundamental aspects of RAG and suggesting the need for broader discussions on the topic; or, as Fromm would have it, “Seldom is a glance at the statistics enough to understand the meaning of the figures”.

2.5. Understand What LLM Needs: Dual Preference Alignment for Retrieval-Augmented Generation

Retrieval-augmented generation (RAG) has demonstrated effectiveness in mitigating the hallucination problem of large language models (LLMs). However, the difficulty of aligning the retriever with the diverse LLMs’ knowledge preferences inevitably poses an inevitable challenge in developing a reliable RAG system.

To address this issue, we propose DPA-RAG, a universal framework designed to align diverse knowledge preferences within RAG systems. Specifically, we initially introduce a preference knowledge construction pipeline and incorporate five novel query augmentation strategies to alleviate preference data scarcity.

Based on preference data, DPA-RAG accomplishes both external and internal preference alignment:

It jointly integrates pair-wise, point-wise, and contrastive preference alignment abilities into the reranker, achieving external preference alignment among RAG components.

It further introduces a pre-aligned stage before vanilla Supervised Fine-tuning (SFT), enabling LLMs to implicitly capture knowledge aligned with their reasoning preferences, achieving LLMs’ internal alignment.

Experimental results across four knowledge-intensive QA datasets demonstrate that DPA-RAG outperforms all baselines and seamlessly integrates both black-box and open-sourced LLM readers. Further qualitative analysis and discussions also provide empirical guidance for achieving reliable RAG systems.

3. LLM Fine-Tuning

3.1. Dataset Size Recovery from LoRA Weights

Model inversion and membership inference attacks aim to reconstruct and verify the data on which a model was trained. However, they are not guaranteed to find all training samples as they do not know the size of the training set.

In this paper, we introduce a new task: dataset size recovery, that aims to determine the number of samples used to train a model, directly from its weights. We then propose DSiRe, a method for recovering the number of images used to fine-tune a model, in the common case where fine-tuning uses LoRA.

We discover that both the norm and the spectrum of the LoRA matrices are closely linked to the fine-tuning dataset size; we leverage this finding to propose a simple yet effective prediction algorithm.

To evaluate dataset size recovery of LoRA weights, we develop and release a new benchmark, LoRA-WiSE, consisting of over 25000 weight snapshots from more than 2000 diverse LoRA fine-tuned models. Our best classifier can predict the number of fine-tuning images with a mean absolute error of 0.36 images, establishing the feasibility of this attack.

3.2. Can Few Shots Work in a Long Context? Recycling the Context to Generate Demonstrations

Despite recent advancements in Large Language Models (LLMs), their performance on tasks involving long contexts remains sub-optimal. In-context learning (ICL) with few-shot examples may be an appealing solution to enhance LLM performance in this scenario.

However, naively adding ICL examples with long context introduces challenges, including substantial token overhead added for each few-shot example and context mismatch between the demonstrations and the target query. In this work, we propose to automatically generate few-shot examples for long-context QA tasks by recycling contexts.

Specifically, given a long input context (1–3k tokens) and a query, we generate additional query-output pairs from the given context as few-shot examples, while introducing the context only once. This ensures that the demonstrations are leveraging the same context as the target query while only adding a small number of tokens to the prompt.

We further enhance each demonstration by instructing the model to explicitly identify the relevant paragraphs before the answer, which improves performance while providing fine-grained attribution to the answer source.

We apply our method on multiple LLMs and obtain substantial improvements (+23\% on average across models) on various QA datasets with long context, especially when the answer lies within the middle of the context. Surprisingly, despite introducing only single-hop ICL examples, LLMs also successfully generalize to multi-hop long-context QA using our approach.

4. LLM Reasoning

4.1. Two Giraffes in a Dirt Field: Using Game Play to Investigate Situation Modelling in Large Multimodal Models

While the situation has improved for text-only models, it again seems to be the case currently that multimodal (text and image) models develop faster than ways to evaluate them.

In this paper, we bring a recently developed evaluation paradigm from text models to multimodal models, namely evaluation through the goal-oriented game (self) play, complementing reference-based and preference-based evaluation.

Specifically, we define games that challenge a model’s capability to represent a situation from visual information and align such representations through dialogue.

We find that the largest closed models perform rather well on the games that we define, while even the best open-weight models struggle with them.

On further analysis, we find that the exceptional deep captioning capabilities of the largest models drive some of the performance. There is still room to grow for both kinds of models, ensuring the continued relevance of the benchmark.

4.2. Math-LLaVA: Bootstrapping Mathematical Reasoning for Multimodal Large Language Models

Large language models (LLMs) have demonstrated impressive reasoning capabilities, particularly in textual mathematical problem-solving.

However, existing open-source image instruction fine-tuning datasets, containing limited question-answer pairs per image, do not fully exploit visual information to enhance the multimodal mathematical reasoning capabilities of Multimodal LLMs (MLLMs).

To bridge this gap, we address the lack of high-quality, diverse multimodal mathematical datasets by collecting 40K high-quality images with question-answer pairs from 24 existing datasets and synthesizing 320K new pairs, creating the MathV360K dataset, which enhances both the breadth and depth of multimodal mathematical questions.

We introduce Math-LLaVA, a LLaVA-1.5-based model fine-tuned with MathV360K. This novel approach significantly improves the multimodal mathematical reasoning capabilities of LLaVA-1.5, achieving a 19-point increase and comparable performance to GPT-4V on MathVista’s minutest split.

Furthermore, Math-LLaVA demonstrates enhanced generalizability, showing substantial improvements on the MMMU benchmark. Our research highlights the importance of dataset diversity and synthesis in advancing MLLMs’ mathematical reasoning abilities.

5. LLM Safety & Alignment

5.1. Judging the Judges: Evaluating Alignment and Vulnerabilities in LLMs-as-Judges

Offering a promising solution to the scalability challenges associated with human evaluation, the LLM-as-a-judge paradigm is rapidly gaining traction as an approach to evaluating large language models (LLMs).

However, there are still many open questions about the strengths and weaknesses of this paradigm, and what potential biases it may hold. In this paper, we present a comprehensive study of the performance of various LLMs acting as judges.

We leverage TriviaQA as a benchmark for assessing objective knowledge reasoning of LLMs and evaluate them alongside human annotations which we found to have a high inter-annotator agreement.

Our study includes 9 judge models and 9 exam taker models — both base and instruction-tuned. We assess the judge model’s alignment across different model sizes, families, and judge prompts.

Among other results, our research rediscovers the importance of using Cohen’s kappa as a metric of alignment as opposed to simple percent agreement, showing that judges with high percent agreement can still assign vastly different scores.

We find that both Llama-3 70B and GPT-4 Turbo have an excellent alignment with humans, but in terms of ranking exam taker models, they are outperformed by both JudgeLM-7B and the lexical judge Contains, which have up to 34 points lower human alignment.

Through error analysis and various other studies, including the effects of instruction length and leniency bias, we hope to provide valuable lessons for using LLMs as judges in the future.

5.2. Cross-Modality Safety Alignment

As Artificial General Intelligence (AGI) becomes increasingly integrated into various facets of human life, ensuring the safety and ethical alignment of such systems is paramount.

Previous studies primarily focus on single-modality threats, which may not suffice given the integrated and complex nature of cross-modality interactions. We introduce a novel safety alignment challenge called Safe Inputs but Unsafe Output (SIUO) to evaluate cross-modality safety alignment.

Specifically, it considers cases where single modalities are safe independently but could potentially lead to unsafe or unethical outputs when combined. To empirically investigate this problem, we developed the SIUO, a cross-modality benchmark encompassing 9 critical safety domains, such as self-harm, illegal activities, and privacy violations.

Our findings reveal substantial safety vulnerabilities in both closed- and open-source LVLMs, such as GPT-4V and LLaVA, underscoring the inadequacy of current models to reliably interpret and respond to complex, real-world scenarios.

5.3. Ruby Teaming: Improving Quality Diversity Search with Memory for Automated Red Teaming

We propose Ruby Teaming, a method that improves on Rainbow Teaming by including a memory cache as its third dimension. The memory dimension provides cues to the mutator to yield better-quality prompts, both in terms of attack success rate (ASR) and quality diversity.

The prompt archive generated by Ruby Teaming has an ASR of 74%, which is 20% higher than the baseline. In terms of quality diversity, Ruby Teaming outperforms Rainbow Teaming by 6% and 3% on Shannon’s Evenness Index (SEI) and Simpson’s Diversity Index (SDI), respectively.

5.4. Aligning Teacher with Student Preferences for Tailored Training Data Generation

Large Language Models (LLMs) have shown significant promise as copilots in various tasks. Local deployment of LLMs on edge devices is necessary when handling privacy-sensitive data or latency-sensitive tasks.

The computational constraints of such devices make direct deployment of powerful large-scale LLMs impractical, necessitating the Knowledge Distillation from large-scale models to lightweight models.

Lots of work has been done to elicit diversity and quality training examples from LLMs, but little attention has been paid to aligning teacher instructional content based on student preferences, akin to “responsive teaching” in pedagogy.

Thus, we propose ARTE, dubbed Aligning TeacheR with StudenT PreferencEs, a framework that aligns the teacher model with student preferences to generate tailored training examples for Knowledge Distillation.

Specifically, we elicit draft questions and rationales from the teacher model, then collect student preferences on these questions and rationales using students’ performance with in-context learning as a proxy, and finally align the teacher model with student preferences.

In the end, we repeat the first step with the aligned teacher model to elicit tailored training examples for the student model on the target task. Extensive experiments on academic benchmarks demonstrate the superiority of ARTE over existing instruction-tuning datasets distilled from powerful LLMs.

Moreover, we thoroughly investigate the generalization of ARTE, including the generalization of fine-tuned student models in reasoning ability and the generalization of aligned teacher models to generate tailored training data across tasks and students.

In summary, our contributions lie in proposing a novel framework for tailored training example generation, demonstrating its efficacy in experiments, and investigating the generalization of both student & aligned teacher models in ARTE.

5.5. WildTeaming at Scale: From In-the-Wild Jailbreaks to (Adversarially) Safer Language Models

We introduce WildTeaming, an automatic LLM safety red-teaming framework that mines in-the-wild user-chatbot interactions to discover 5.7K unique clusters of novel jailbreak tactics, and then composes multiple tactics for systematic exploration of novel jailbreaks.

Compared to prior work that performed red-teaming via recruited human workers, gradient-based optimization, or iterative revision with LLMs, our work investigates jailbreaks from chatbot users who were not specifically instructed to break the system.

WildTeaming reveals previously unidentified vulnerabilities of frontier LLMs, resulting in up to 4.6x more diverse and successful adversarial attacks compared to state-of-the-art jailbreak methods. While many datasets exist for jailbreak evaluation, very few open-source datasets exist for jailbreak training, as safety training data has been closed even when model weights are open.

With WildTeaming we create WildJailbreak, a large-scale open-source synthetic safety dataset with 262K vanilla (direct request) and adversarial (complex jailbreak) prompt-response pairs.

To mitigate exaggerated safety behaviors, WildJailbreak provides two contrastive types of queries:

Harmful queries (vanilla & adversarial)

Benign queries that resemble harmful queries in form but contain no harm.

As WildJailbreak considerably upgrades the quality and scale of existing safety resources, it uniquely enables us to examine the scaling effects of data and the interplay of data properties and model capabilities during safety training.

Through extensive experiments, we identify the training properties that enable an ideal balance of safety behaviors: appropriate safeguarding without over-refusal, effective handling of vanilla and adversarial queries, and minimal, if any, decrease in general capabilities. All components of WildJailbeak contribute to achieving balanced safety behaviors of models.

Are you looking to start a career in data science and AI and do not know how? I offer data science mentoring sessions and long-term career mentoring:

Mentoring sessions: https://lnkd.in/dXeg3KPW

Long-term mentoring: https://lnkd.in/dtdUYBrM

LongRAG is a new retrieval-augmented generation framework that focuses on addressing the workload imbalance between the retriever and the reader in traditional RAG frameworks.

Traditional RAG frameworks typically use short texts as retrieval units, such as paragraphs of around 100 words. This requires the retriever to search through a massive corpus for a 'needle in a haystack' (i.e., the exact short text unit containing the answer).

In contrast, the reader only needs to extract the answer from the retrieved short text units, resulting in a relatively lighter workload.

This imbalance, with a 'heavy' retriever and a 'light' reader, can lead to suboptimal performance.

To alleviate this imbalance, LongRAG introduces the concepts of a 'long retriever' and a 'long reader,' constructing the framework around retrieval units of 4,000 words.