Top Important LLM Papers for the Week from 20/11 to 26/11

Stay Updated with Recent Large Language Models Research

Large language models (LLMs) have advanced rapidly in recent years. As new generations of models are developed, it’s important for researchers and engineers to stay informed on the latest progress. This article summarizes some of the most important LLM papers published during the fourth week of November.

The papers cover various topics shaping the next generation of language models, from model optimization and scaling to reasoning, benchmarking, and enhancing performance. Keeping up with novel LLM research across these domains will help guide continued progress toward models that are more capable, robust, and aligned with human values.

Table of Contents:

LLM Progress & Benchmarking

LLM Training & Optimization

LLM Fine Tuning

LLM Reasoning

Responsible AI & LLM Ethics

Transformers & Attention Models

1. LLM Progress & Benchmarking

1.1. GAIA: a benchmark for General AI Assistants

This paper introduces GAIA, a benchmark for General AI Assistants that, if solved, would represent a milestone in AI research. GAIA proposes real-world questions that require a set of fundamental abilities such as reasoning, multi-modality handling, web browsing, and generally tool-use proficiency.

GAIA questions are conceptually simple for humans yet challenging for most advanced AIs: They showed that human respondents obtain 92\% vs. 15\% for GPT-4 equipped with plugins. This notable performance disparity contrasts with the recent trend of LLMs outperforming humans on tasks requiring professional skills e.g. law or chemistry.

GAIA’s philosophy departs from the current trend in AI benchmarks suggesting to target tasks that are ever more difficult for humans. They posit that the advent of Artificial General Intelligence (AGI) hinges on a system’s capability to exhibit similar robustness as the average human on such questions. Using GAIA’s methodology, we devised 466 questions and their answer.

1.2. Exponentially Faster Language Modelling

Language models only need to use an exponential fraction of their neurons for individual inferences. As proof, this paper presents FastBERT, a BERT variant that uses 0.3\% of its neurons during inference while performing on par with similar BERT models.

FastBERT selectively engages just 12 out of 4095 neurons for each layer inference. This is achieved by replacing feedforward networks with fast feedforward networks (FFFs).

While no truly efficient implementation currently exists to unlock the full acceleration potential of conditional neural execution, we provide high-level CPU code achieving 78x speedup over the optimized baseline feedforward implementation, and a PyTorch implementation delivering 40x speedup over the equivalent batched feedforward inference.

1.3. GPQA: A Graduate-Level Google-Proof Q&A Benchmark

This paper presents GPQA, a challenging dataset of 448 multiple-choice questions written by domain experts in biology, physics, and chemistry. The authors ensure that the questions are high-quality and tough: experts who have or are pursuing PhDs in the corresponding domains reach 65% accuracy (74% when discounting clear mistakes the experts identified in retrospect), while highly skilled non-expert validators only reach 34% accuracy, despite spending on average over 30 minutes with unrestricted access to the web (i.e., the questions are “Google-proof”).

The questions are also difficult for state-of-the-art AI systems, with the strongest GPT -4-based baseline achieving 39% accuracy. If we are to use future AI systems to help us answer very hard questions, for example, when developing new scientific knowledge, we need to develop scalable oversight methods that enable humans to supervise their outputs, which may be difficult even if the supervisors are themselves skilled and knowledgeable.

The difficulty of GPQA both for skilled non-experts and frontier AI systems should enable realistic scalable oversight experiments, which we hope can help devise ways for human experts to reliably get truthful information from AI systems that surpass human capabilities.

1.4. Memory Augmented Language Models through Mixture of Word Experts

Scaling up the number of parameters of language models has proven to be an effective approach to improving performance. For dense models, increasing model size proportionally increases the model’s computation footprint. In this work, we seek to aggressively decouple learning capacity and FLOPs through Mixture-of-Experts (MoE) style models with large knowledge-rich vocabulary-based routing functions and experts.

The proposed approach, dubbed Mixture of Word Experts (MoWE), can be seen as a memory-augmented model, where a large set of word-specific experts play the role of a sparse memory. They demonstrate that MoWE performs significantly better than the T5 family of models with a similar number of FLOPs in a variety of NLP tasks.

Additionally, MoWE outperforms regular MoE models on knowledge-intensive tasks and has similar performance to more complex memory-augmented approaches that often require invoking custom mechanisms to search the sparse memory.

1.5. Camels in a Changing Climate: Enhancing LM Adaptation with Tulu 2

Since the release of TULU, open resources for instruction tuning have developed quickly, from better base models to new finetuning techniques. We test and incorporate a number of these advances into TULU, resulting in TULU 2, a suite of improved TULU models for advancing the understanding and best practices of adapting pretrained language models to downstream tasks and user preferences.

Concretely, we release

TULU-V2-mix, an improved collection of high-quality instruction datasets

TULU 2, LLAMA-2 models finetuned on the V2 mixture

TULU 2+DPO, TULU 2 models trained with direct preference optimization (DPO), including the largest DPO-trained model to date (TULU 2+DPO 70B)

CODE TULU 2, CODE LLAMA models finetuned on our V2 mix that outperforms CODE LLAMA and its instruction-tuned variant, CODE LLAMA-Instruct.

Our evaluation from multiple perspectives shows that the TULU 2 suite achieves state-of-the-art performance among open models and matches or exceeds the performance of GPT-3.5-turbo-0301 on several benchmarks. We release all the checkpoints, data, training, and evaluation code to facilitate future open efforts on adapting large language models.

2. LLM Training & Evaluation

2.1. ToolTalk: Evaluating Tool Usage in a Conversational Setting

Large language models (LLMs) have displayed massive improvements in reason- ing and decision-making skills and can hold natural conversations with users. Many recent works seek to augment LLM-based assistants with external tools so they can access private or up-to-date information and carry out actions on behalf of users.

To better measure the performance of these assistants, this paper introduces ToolTalk, a benchmark consisting of complex user intents requiring multi-step tool usage specified through dialogue. ToolTalk contains 28 tools grouped into 7 plugins, and includes a complete simulated implementation of each tool, allowing for fully automated evaluation of assistants that rely on execution feedback.

ToolTalk also emphasizes tools that externally affect the world rather than only tools for referencing or searching information. We evaluate GPT-3.5 and GPT-4 on ToolTalk resulting in success rates of 26% and 50% respectively. Our analysis of the errors reveals three major categories and suggests some future directions for improvement.

2.2. MultiLoRA: Democratizing LoRA for Better Multi-Task Learning

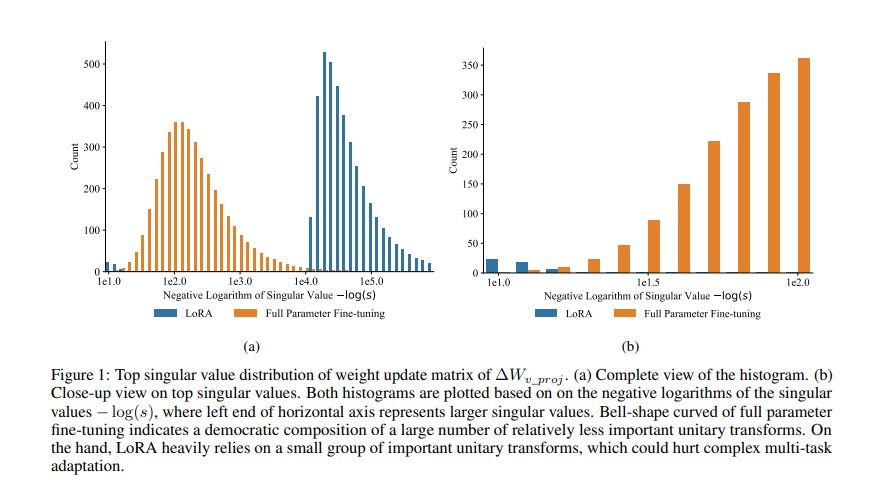

LoRA achieves remarkable resource efficiency and comparable performance when adapting LLMs for specific tasks. Since ChatGPT demonstrated superior performance on various tasks, there has been a growing desire to adapt one model for all tasks. However, the explicit low rank of LoRA limits the adaptation performance in complex multi-task scenarios.

LoRA is dominated by a small number of top singular vectors while fine-tuning decomposes into a set of less important unitary transforms. In this paper, we propose MultiLoRA for better multi-task adaptation by reducing the dominance of top singular vectors observed in LoRA.

MultiLoRA scales LoRA modules horizontally and changes parameter initialization of adaptation matrices to reduce parameter dependency, thus yielding more balanced unitary subspaces. We unprecedentedly construct specialized training data by mixing datasets of instruction, natural language understanding, and world knowledge, to cover semantically and syntactically different samples.

With only 2.5% of additional parameters, MultiLoRA outperforms single LoRA counterparts and fine-tunes on multiple benchmarks and model scales. Further investigation into weight update matrices of MultiLoRA exhibits reduced dependency on top singular vectors and more democratic unitary transform contributions.

2.3. TPTU-v2: Boosting Task Planning and Tool Usage of Large Language Model-based Agents in Real-world Systems

Large Language Models (LLMs) have demonstrated proficiency in addressing tasks that necessitate a combination of task planning and the usage of external tools that require a blend of task planning and the utilization of external tools, such as APIs. However, real-world complex systems present three prevalent challenges concerning task planning and tool usage:

The real system usually has a vast array of APIs, so it is impossible to feed the descriptions of all APIs to the prompt of LLMs as the token length is limited

The real system is designed for handling complex tasks, and the base LLMs can hardly plan a correct sub-task order and API-calling order for such tasks

Similar semantics and functionalities among APIs in real systems create challenges for both LLMs and even humans in distinguishing between them. In response, this paper introduces a comprehensive framework aimed at enhancing the Task Planning and Tool Usage (TPTU) abilities of LLM-based agents operating within real-world systems.

Our framework comprises three key components designed to address these challenges:

The API Retriever selects the most pertinent APIs for the user task among the extensive array available.

LLM Finetuner tunes a base LLM so that the finetuned LLM can be more capable of task planning and API calls.

The Demo Selector adaptively retrieves different demonstrations related to hard-to-distinguish APIs, which is further used for in-context learning to boost the final performance.

We validate our methods using a real-world commercial system as well as an open-sourced academic dataset, and the outcomes clearly showcase the efficacy of each individual component as well as the integrated framework.

3. LLM Fine Tuning

3.1. Adapters: A Unified Library for Parameter-Efficient and Modular Transfer Learning

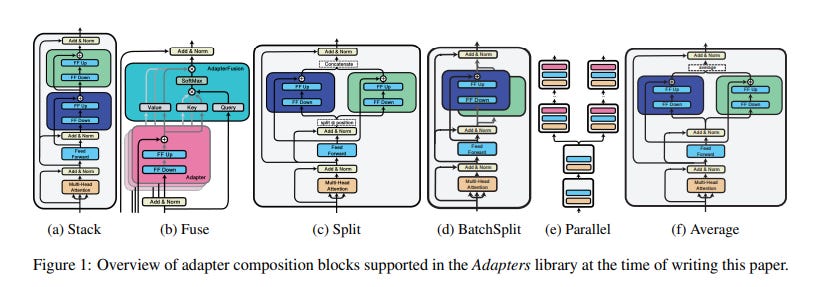

We introduce Adapters, an open-source library that unifies parameter-efficient and modular transfer learning in large language models. By integrating 10 diverse adapter methods into a unified interface, Adapters offer ease of use and flexible configuration.

Our library allows researchers and practitioners to leverage adapter modularity through composition blocks, enabling the design of complex adapter setups. We demonstrate the library’s efficacy by evaluating its performance against full fine-tuning on various NLP tasks.

Adapters provide a powerful tool for addressing the challenges of conventional fine-tuning paradigms and promoting more efficient and modular transfer learning.

4. LLM Reasoning

4.1. Orca 2: Teaching Small Language Models How to Reason

Orca 1 learns from rich signals, such as explanation traces, allowing it to outperform conventional instruction-tuned models on benchmarks like BigBench Hard and AGIEval. In Orca 2, we continue exploring how improved training signals can enhance smaller LMs’ reasoning abilities. Research on training small LMs has often relied on imitation learning to replicate the output of more capable models.

We contend that excessive emphasis on imitation may restrict the potential of smaller models. We seek to teach small LMs to employ different solution strategies for different tasks, potentially different from the one used by the larger model. For example, while larger models might provide a direct answer to a complex task, smaller models may not have the same capacity. In Orca 2, we teach the model various reasoning techniques (step-by-step, recall then generate, recall-reason-generate, direct answer, etc.).

More crucially, we aim to help the model learn to determine the most effective solution strategy for each task. We evaluate Orca 2 using a comprehensive set of 15 diverse benchmarks (corresponding to approximately 100 tasks and over 36,000 unique prompts).

Orca 2 significantly surpasses models of similar size and attains performance levels similar or better to those of models 5–10x larger, as assessed on complex tasks that test advanced reasoning abilities in zero-shot settings. We open-source Orca 2 to encourage further research on the development, evaluation, and alignment of smaller LMs.

5. Responsible AI & LLM Ethics

5.1. Testing Language Model Agents Safely in the Wild

A prerequisite for safe autonomy in the wild is safe testing in the wild. Yet real-world autonomous tests face several unique safety challenges, both due to the possibility of causing harm during a test, as well as the risk of encountering new unsafe agent behavior through interactions with real-world and potentially malicious actors.

We propose a framework for conducting safe autonomous agent tests on the open internet: agent actions are audited by a context-sensitive monitor that enforces a stringent safety boundary to stop an unsafe test, with suspect behavior ranked and logged to be examined by humans.

We design a basic safety monitor that is flexible enough to monitor existing LLM agents, and, using an adversarial simulated agent, we measure its ability to identify and stop unsafe situations. Then we apply the safety monitor on a battery of real-world tests of AutoGPT, and we identify several limitations and challenges that will face the creation of safe in-the-wild tests as autonomous agents grow more capable.

6. Transformers & Attention Models

6.1. Rethinking Attention: Exploring Shallow Feed-Forward Neural Networks as an Alternative to Attention Layers in Transformers

This work presents an analysis of the effectiveness of using standard shallow feed-forward networks to mimic the behavior of the attention mechanism in the original Transformer model, a state-of-the-art architecture for sequence-to-sequence tasks.

We substitute key elements of the attention mechanism in the Transformer with simple feed-forward networks, trained using the original components via knowledge distillation. Our experiments, conducted on the IWSLT2017 dataset, reveal the capacity of these “attentionless Transformers” to rival the performance of the original architecture.

Through rigorous ablation studies, and experimenting with various replacement network types and sizes, we offer insights that support the viability of our approach. This not only sheds light on the adaptability of shallow feed-forward networks in emulating attention mechanisms but also underscores their potential to streamline complex architectures for sequence-to-sequence tasks.

6.2. System 2 Attention (is something you might need too)

Soft attention in Transformer-based Large Language Models (LLMs) is susceptible to incorporating irrelevant information from the context into its latent representations, which adversely affects the next token generations.

To help rectify these issues, we introduce System 2 Attention (S2A), which leverages the ability of LLMs to reason in natural language and follow instructions in order to decide what to attend to. S2A regenerates the input context to only include the relevant portions, before attending to the regenerated context to elicit the final response.

In experiments, S2A outperforms standard attention-based LLMs on three tasks containing opinion or irrelevant information, QA, math word problems, and long-form generation, where S2A increases factuality and objectivity and decreases sycophancy.

Are you looking to start a career in data science and AI and do not know how? I offer data science mentoring sessions and long-term career mentoring:

Mentoring sessions: https://lnkd.in/dXeg3KPW

Long-term mentoring: https://lnkd.in/dtdUYBrM

👏👏👏👏👏👏