Top Important Computer Vision Papers for the Week from 24/06 to 30/06

Stay Updated with Recent Computer Vision Research

Every week, researchers from top research labs, companies, and universities publish exciting breakthroughs in various topics such as diffusion models, vision language models, image editing and generation, video processing and generation, and image recognition.

This article provides a comprehensive overview of the most significant papers published in the Fourth Week of June 2024, highlighting the latest research and advancements in computer vision.

Whether you’re a researcher, practitioner, or enthusiast, this article will provide valuable insights into the state-of-the-art techniques and tools in computer vision.

Table of Contents:

Diffusion Models

Vision Language Models (VLMs)

Image Generation & Editing

Video Understanding & Generation

My New E-Book: LLM Roadmap from Beginner to Advanced Level

I am pleased to announce that I have published my new ebook LLM Roadmap from Beginner to Advanced Level. This ebook will provide all the resources you need to start your journey towards mastering LLMs. The content of the book covers the following topics:

1. Diffusion Models

1.1. FreeTraj: Tuning-Free Trajectory Control in Video Diffusion Models

The diffusion model has demonstrated remarkable capability in video generation, which further sparks interest in introducing trajectory control into the generation process.

While existing works mainly focus on training-based methods (e.g., conditional adapter), we argue that the diffusion model itself allows decent control over the generated content without requiring any training.

In this study, we introduce a tuning-free framework to achieve trajectory-controllable video generation, by imposing guidance on both noise construction and attention computation.

Specifically:

We first show several instructive phenomenons and analyze how initial noises influence the motion trajectory of generated content.

Subsequently, we propose FreeTraj, a tuning-free approach that enables trajectory control by modifying noise sampling and attention mechanisms.

Furthermore, we extend FreeTraj to facilitate longer and larger video generation with controllable trajectories.

Equipped with these designs, users have the flexibility to provide trajectories manually or opt for trajectories automatically generated by the LLM trajectory planner. Extensive experiments validate the efficacy of our approach in enhancing the trajectory controllability of video diffusion models.

1.2. Aligning Diffusion Models with Noise-Conditioned Perception

Recent advancements in human preference optimization, initially developed for Language Models (LMs), have shown promise for text-to-image Diffusion Models, enhancing prompt alignment, visual appeal, and user preference.

Unlike LMs, Diffusion Models typically optimize in pixel or VAE space, which does not align well with human perception, leading to slower and less efficient training during the preference alignment stage. We propose using a perceptual objective in the U-Net embedding space of the diffusion model to address these issues.

Our approach involves fine-tuning Stable Diffusion 1.5 and XL using Direct Preference Optimization (DPO), Contrastive Preference Optimization (CPO), and supervised fine-tuning (SFT) within this embedding space. This method significantly outperforms standard latent-space implementations across various metrics, including quality and computational cost.

For SDXL, our approach provides 60.8\% general preference, 62.2\% visual appeal, and 52.1\% prompt following against the original open-sourced SDXL-DPO on the PartiPrompts dataset, while significantly reducing compute.

Our approach not only improves the efficiency and quality of human preference alignment for diffusion models but is also easily integrated with other optimization techniques.

2. Vision Language Models (VLMs)

2.1. Cambrian-1: A Fully Open, Vision-Centric Exploration of Multimodal LLMs

We introduce Cambrian-1, a family of multimodal LLMs (MLLMs) designed with a vision-centric approach. While stronger language models can enhance multimodal capabilities, the design choices for vision components often need to be more explored and connected to visual representation learning research.

This gap hinders accurate sensory grounding in real-world scenarios. Our study uses LLMs and visual instruction tuning as an interface to evaluate various visual representations, offering new insights into different models and architectures — self-supervised, strongly supervised, or combinations thereof — based on experiments with over 20 vision encoders.

We critically examine existing MLLM benchmarks, addressing the difficulties involved in consolidating and interpreting results from various tasks, and introduce a new vision-centric benchmark, CV-Bench. To further improve visual grounding, we propose the Spatial Vision Aggregator (SVA), a dynamic and spatially-aware connector that integrates high-resolution vision features with LLMs while reducing the number of tokens.

Additionally, we discuss the curation of high-quality visual instruction-tuning data from publicly available sources, emphasizing the importance of data source balancing and distribution ratio.

Collectively, Cambrian-1 not only achieves state-of-the-art performance but also serves as a comprehensive, open cookbook for instruction-tuned MLLMs. We provide model weights, code, supporting tools, datasets, and detailed instruction-tuning and evaluation recipes. We hope our release will inspire and accelerate advancements in multimodal systems and visual representation learning.

2.2. AUTOHALLUSION: Automatic Generation of Hallucination Benchmarks for Vision-Language Models

Large vision-language models (LVLMs) hallucinate: certain context cues in an image may trigger the language module’s overconfident and incorrect reasoning on abnormal or hypothetical objects.

Though a few benchmarks have been developed to investigate LVLM hallucinations, they mainly rely on hand-crafted corner cases whose fail patterns may hardly generalize, and finetuning on them could undermine their validity.

These motivate us to develop the first automatic benchmark generation approach, AUTOHALLUSION, that harnesses a few principal strategies to create diverse hallucination examples.

It probes the language modules in LVLMs for context cues and uses them to synthesize images by:

Adding objects abnormal to the context cues

For two co-occurring objects, keeping one and excluding the other

Removing objects closely tied to the context cues.

It then generates image-based questions whose ground-truth answers contradict the language module’s prior. A model has to overcome contextual biases and distractions to reach correct answers, while incorrect or inconsistent answers indicate hallucinations.

AUTOHALLUSION enables us to create new benchmarks at the minimum cost and thus overcomes the fragility of hand-crafted benchmarks. It also reveals common failure patterns and reasons, providing key insights to detect, avoid, or control hallucinations.

Comprehensive evaluations of top-tier LVLMs, e.g., GPT-4V(ision), Gemini Pro Vision, Claude 3, and LLaVA-1.5, show a 97.7% and 98.7% success rate of hallucination induction on synthetic and real-world datasets of AUTOHALLUSION, paving the way for a long battle against hallucinations.

2.3. OMG-LLaVA: Bridging Image-level, Object-level, Pixel-level Reasoning and Understanding

Current universal segmentation methods demonstrate strong capabilities in pixel-level image and video understanding. However, they lack reasoning abilities and cannot be controlled via text instructions.

In contrast, large vision-language multimodal models exhibit powerful vision-based conversation and reasoning capabilities but lack pixel-level understanding and have difficulty accepting visual prompts for flexible user interaction.

This paper proposes OMG-LLaVA, a new and elegant framework combining powerful pixel-level vision understanding with reasoning abilities. It can accept various visual and text prompts for flexible user interaction.

Specifically, we use a universal segmentation method as the visual encoder, integrating image information, perception priors, and visual prompts into visual tokens provided to the LLM. The LLM is responsible for understanding the user’s text instructions and providing text responses and pixel-level segmentation results based on the visual information.

We propose perception prior embedding to better integrate perception priors with image features. OMG-LLaVA achieves image-level, object-level, and pixel-level reasoning and understanding in a single model, matching or surpassing the performance of specialized methods on multiple benchmarks.

Rather than using LLM to connect each specialist, our work aims at end-to-end training on one encoder, one decoder, and one LLM. The code and model have been released for further research.

2.4. MG-LLaVA: Towards Multi-Granularity Visual Instruction Tuning

Multi-modal large language models (MLLMs) have made significant strides in various visual understanding tasks. However, the majority of these models are constrained to process low-resolution images, which limits their effectiveness in perception tasks that necessitate detailed visual information.

In our study, we present MG-LLaVA, an innovative MLLM that enhances the model’s visual processing capabilities by incorporating a multi-granularity vision flow, which includes low-resolution, high-resolution, and object-centric features.

We propose the integration of an additional high-resolution visual encoder to capture fine-grained details, which are then fused with base visual features through a Conv-Gate fusion network. To further refine the model’s object recognition abilities, we incorporate object-level features derived from bounding boxes identified by offline detectors.

Being trained solely on publicly available multimodal data through instruction tuning, MG-LLaVA demonstrates exceptional perception skills. We instantiate MG-LLaVA with a wide variety of language encoders, ranging from 3.8B to 34B, to evaluate the model’s performance comprehensively.

Extensive evaluations across multiple benchmarks demonstrate that MG-LLaVA outperforms existing MLLMs of comparable parameter sizes, showcasing its remarkable efficacy.

3. Image Generation & Editing

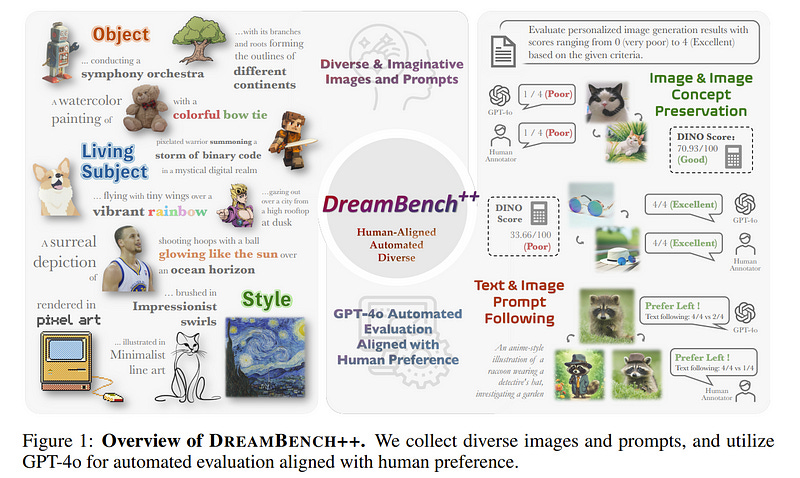

3.1. DreamBench++: A Human-Aligned Benchmark for Personalized Image Generation

Personalized image generation holds great promise in assisting humans in everyday work and life due to its impressive function in creatively generating personalized content.

However, current evaluations either are automated but misalign with humans or require human evaluations that are time-consuming and expensive. In this work, we present DreamBench++, a human-aligned benchmark automated by advanced multimodal GPT models.

Specifically, we systematically design the prompts to let GPT be both human-aligned and self-aligned, empowered with task reinforcement. Further, we construct a comprehensive dataset comprising diverse images and prompts.

By benchmarking 7 modern generative models, we demonstrate that DreamBench++ results in significantly more human-aligned evaluation, helping boost the community with innovative findings.

3.2. MUMU: Bootstrapping Multimodal Image Generation from Text-to-Image Data

We train a model to generate images from multimodal prompts of interleaved text and images such as “a <picture of a man> man and his <picture of a dog> dog in an <picture of a cartoon> animated style.”

We bootstrap a multimodal dataset by extracting semantically meaningful image crops corresponding to words in the image captions of synthetically generated and publicly available text-image data.

Our model, MUMU, is composed of a vision-language model encoder with a diffusion decoder and is trained on a single 8xH100 GPU node. Despite being only trained on crops from the same image, MUMU learns to compose inputs from different images into a coherent output.

For example, an input of a realistic person and a cartoon will output the same person in the cartoon style, and an input of a standing subject and a scooter will output the subject riding the scooter.

As a result, our model generalizes to tasks such as style transfer and character consistency. Our results show the promise of using multimodal models as general-purpose controllers for image generation.

3.3. ClotheDreamer: Text-Guided Garment Generation with 3D Gaussians

High-fidelity 3D garment synthesis from text is desirable yet challenging for digital avatar creation. Recent diffusion-based approaches via Score Distillation Sampling (SDS) have enabled new possibilities but either intricately couple with the human body or struggle to reuse.

We introduce ClotheDreamer, a 3D Gaussian-based method for generating wearable, production-ready 3D garment assets from text prompts. We propose a novel representation Disentangled Clothe Gaussian Splatting (DCGS) to enable separate optimization.

DCGS represents a clothed avatar as one Gaussian model but freezes body Gaussian splats. To enhance quality and completeness, we incorporate bidirectional SDS to supervise clothed avatar and garment RGBD renderings respectively with pose conditions and propose a new pruning strategy for loose clothing.

Our approach can also support custom clothing templates as input. Benefiting from our design, the synthetic 3D garment can be easily applied to virtual try-on and support physically accurate animation. Extensive experiments showcase our method’s superior and competitive performance.

3.4. YouDream: Generating Anatomically Controllable Consistent Text-to-3D Animals

3D generation guided by text-to-image diffusion models enables the creation of visually compelling assets. However previous methods explore generation based on image or text.

The boundaries of creativity are limited by what can be expressed through words or the images that can be sourced. We present YouDream, a method to generate high-quality anatomically controllable animals. YouDream is guided using a text-to-image diffusion model controlled by 2D views of a 3D pose prior. Our method generates 3D animals that are not possible to create using previous text-to-3D generative methods.

Additionally, our method is capable of preserving anatomic consistency in the generated animals, an area where prior text-to-3D approaches often struggle. Moreover, we design a fully automated pipeline for generating commonly found animals.

To circumvent the need for human intervention to create a 3D pose, we propose a multi-agent LLM that adapts poses from a limited library of animal 3D poses to represent the desired animal. A user study conducted on the outcomes of YouDream demonstrates the preference of the animal models generated by our method over others.

4. Video Understanding & Generation

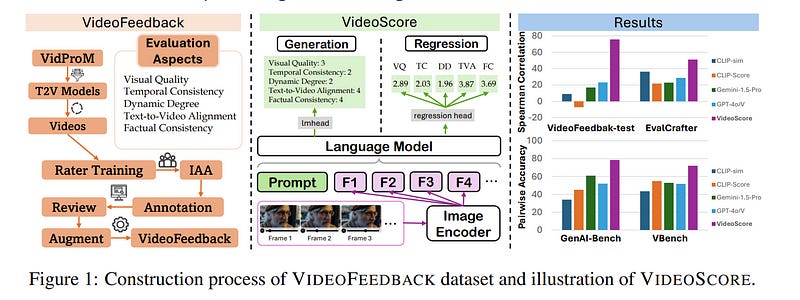

4.1. MantisScore: Building Automatic Metrics to Simulate Fine-grained Human Feedback for Video Generation

The recent years have witnessed great advances in video generation. However, the development of automatic video metrics is lagging significantly behind. None of the existing metrics can provide reliable scores over generated videos.

The main barrier is the lack of large-scale human-annotated datasets. In this paper, we release VideoFeedback, the first large-scale dataset containing human-provided multi-aspect score over 37.6K synthesized videos from 11 existing video generative models. We train MantisScore (initialized from Mantis) based on VideoFeedback to enable automatic video quality assessment.

Experiments show that the Spearman correlation between MantisScore and humans can reach 77.1 on the VideoFeedback test, beating the prior best metrics by about 50 points. Further results on other held-out EvalCrafter, GenAI-Bench, and VBench show that MantisScore has consistently much higher correlation with human judges than other metrics.

Due to these results, we believe MantisScore can serve as a great proxy for human raters to (1) rate different video models to track progress and (2) simulate fine-grained human feedback in Reinforcement Learning with Human Feedback (RLHF) to improve current video generation models.

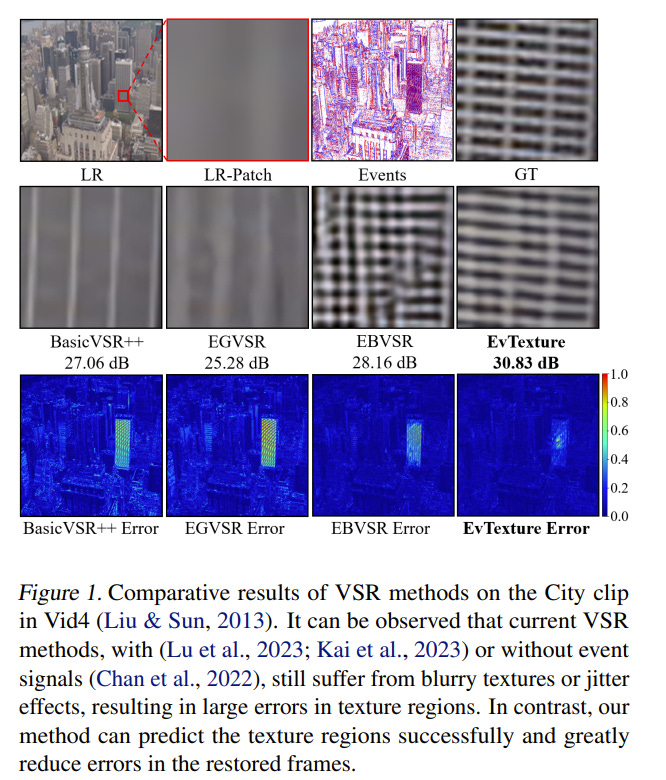

4.2. EvTexture: Event-driven Texture Enhancement for Video Super-Resolution

Event-based vision has drawn increasing attention due to its unique characteristics, such as high temporal resolution and high dynamic range. It has been used in video super-resolution (VSR) recently to enhance flow estimation and temporal alignment.

Rather than for motion learning, we propose in this paper the first VSR method that utilizes event signals for texture enhancement. Our method, called EvTexture, leverages high-frequency details of events to better recover texture regions in VSR.

In our EvTexture, a new texture enhancement branch is presented. We further introduce an iterative texture enhancement module to progressively explore the high-temporal-resolution event information for texture restoration.

This allows for gradual refinement of texture regions across multiple iterations, leading to more accurate and rich high-resolution details. Experimental results show that our EvTexture achieves state-of-the-art performance on four datasets.

For the Vid4 dataset with rich textures, our method can get up to 4.67dB gain compared with recent event-based methods.

4.3. Video-Infinity: Distributed Long Video Generation

Diffusion models have recently achieved remarkable results for video generation. Despite the encouraging performances, the generated videos are typically constrained to a small number of frames, resulting in clips lasting merely a few seconds.

The primary challenges in producing longer videos include the substantial memory requirements and the extended processing time required on a single GPU.

A straightforward solution would be to split the workload across multiple GPUs, which, however, leads to two issues:

Ensuring all GPUs communicate effectively to share timing and context information

Modifying existing video diffusion models, which are usually trained on short sequences, to create longer videos without additional training.

To tackle these, in this paper, we introduce Video-Infinity, a distributed inference pipeline that enables parallel processing across multiple GPUs for long-form video generation.

Specifically, we propose two coherent mechanisms: Clip parallelism and Dual-scope attention. Clip parallelism optimizes the gathering and sharing of context information across GPUs which minimizes communication overhead, while Dual-scope attention modulates the temporal self-attention to balance local and global contexts efficiently across the devices.

Together, the two mechanisms join forces to distribute the workload and enable the fast generation of long videos. Under an 8 x Nvidia 6000 Ada GPU (48G) setup, our method generates videos up to 2,300 frames in approximately 5 minutes, enabling long video generation at a speed 100 times faster than the prior methods.

4.4. Image Conductor: Precision Control for Interactive Video Synthesis

Filmmaking and animation production often require sophisticated techniques for coordinating camera transitions and object movements, typically involving labor-intensive real-world capturing.

Despite advancements in generative AI for video creation, achieving precise control over motion for interactive video asset generation remains challenging.

To this end, we propose Image Conductor, a method for precise control of camera transitions and object movements to generate video assets from a single image.

A well-cultivated training strategy is proposed to separate distinct camera and object motion by camera LoRA weights and object LoRA weights. To further address cinematographic variations from ill-posed trajectories, we introduce a camera-free guidance technique during inference, enhancing object movements while eliminating camera transitions.

Additionally, we develop a trajectory-oriented video motion data curation pipeline for training. Quantitative and qualitative experiments demonstrate our method’s precision and fine-grained control in generating motion-controllable videos from images, advancing the practical application of interactive video synthesis.

4.5. video-SALMONN: Speech-Enhanced Audio-Visual Large Language Models

Speech understanding as an element of the more generic video understanding using audio-visual large language models (av-LLMs) is a crucial yet understudied aspect.

This paper proposes video-SALMONN, a single end-to-end av-LLM for video processing, which can understand not only visual frame sequences, audio events, and music, but speech as well.

To obtain fine-grained temporal information required by speech understanding, while keeping efficient for other video elements, this paper proposes a novel multi-resolution causal Q-Former (MRC Q-Former) structure to connect pre-trained audio-visual encoders and the backbone large language model.

Moreover, dedicated training approaches including the diversity loss and the unpaired audio-visual mixed training scheme are proposed to avoid frames or modality dominance. On the introduced speech-audio-visual evaluation benchmark, video-SALMONN achieves more than 25\% absolute accuracy improvements on the video-QA task and over 30\% absolute accuracy improvements on audio-visual QA tasks with human speech.

In addition, video-SALMONN demonstrates remarkable video comprehension and reasoning abilities on tasks that are unprecedented by other av-LLMs.

4.6. ChronoMagic-Bench: A Benchmark for Metamorphic Evaluation of Text-to-Time-lapse Video Generation

We propose a novel text-to-video (T2V) generation benchmark, ChronoMagic-Bench, to evaluate the temporal and metamorphic capabilities of the T2V models (e.g. Sora and Lumiere) in time-lapse video generation.

In contrast to existing benchmarks that focus on the visual quality and textual relevance of generated videos, ChronoMagic-Bench focuses on the model’s ability to generate time-lapse videos with significant metamorphic amplitude and temporal coherence.

The benchmark probes T2V models for their physics, biology, and chemistry capabilities, in a free-form text query. For these purposes, ChronoMagic-Bench introduces 1,649 prompts and real-world videos as references, categorized into four major types of time-lapse videos: biological, human-created, meteorological, and physical phenomena, which are further divided into 75 subcategories.

This categorization comprehensively evaluates the model’s capacity to handle diverse and complex transformations. To accurately align human preference with the benchmark, we introduce two new automatic metrics, MTScore and CHScore, to evaluate the videos’ metamorphic attributes and temporal coherence.

MTScore measures the metamorphic amplitude, reflecting the degree of change over time, while CHScore assesses the temporal coherence, ensuring the generated videos maintain logical progression and continuity.

Based on the ChronoMagic-Bench, we conduct comprehensive manual evaluations of ten representative T2V models, revealing their strengths and weaknesses across different categories of prompts, and providing a thorough evaluation framework that addresses current gaps in video generation research.

Moreover, we create a large-scale ChronoMagic-Pro dataset, containing 460k high-quality pairs of 720p time-lapse videos and detailed captions ensuring high physical pertinence and large metamorphic amplitude.

Are you looking to start a career in data science and AI and do not know how? I offer data science mentoring sessions and long-term career mentoring:

Mentoring sessions: https://lnkd.in/dXeg3KPW

Long-term mentoring: https://lnkd.in/dtdUYBrM