Top Important Computer Vision Papers for the Week from 06/11 to 12/11

Stay Relevant to Recent Computer Vision Research

On a weekly basis, several top-tier academic conferences and journals showcased innovative research in computer vision, presenting exciting breakthroughs in various subfields such as image recognition, vision model optimization, generative adversarial networks (GANs), image segmentation, video analysis, and more.

This article provides a comprehensive overview of the most significant papers published in the Second week of November 2023, highlighting the latest research and advancements in computer vision. Whether you’re a researcher, practitioner, or enthusiast, this article will provide valuable insights into the state-of-the-art techniques and tools in computer vision.

Are you looking to start a career in data science and AI and need to learn how? I offer data science mentoring sessions and long-term career mentoring:

Mentoring sessions: https://lnkd.in/dXeg3KPW

Long-term mentoring: https://lnkd.in/dtdUYBrM

1. Image Generation

1.1. LDM3D-VR: Latent Diffusion Model for 3D VR

Latent diffusion models have proven to be state-of-the-art in the creation and manipulation of visual outputs. However, as far as we know, the generation of depth maps jointly with RGB is still limited.

In this paper, the authors introduce LDM3D-VR, a suite of diffusion models targeting virtual reality development that includes LDM3D-pano and LDM3D-SR. These models enable the generation of panoramic RGBD based on textual prompts and the upscaling of low-resolution inputs to high-resolution RGBD, respectively.

The models are fine-tuned from existing pretrained models on datasets containing panoramic/high-resolution RGB images, depth maps, and captions. Both models are evaluated in comparison to existing related methods.

1.2. LCM-LoRA: A Universal Stable-Diffusion Acceleration Module

Latent Consistency Models (LCMs) have achieved impressive performance in accelerating text-to-image generative tasks, producing high-quality images with minimal inference steps.

LCMs are distilled from pre-trained latent diffusion models (LDMs), requiring only ~32 A100 GPU training hours. This report further extends LCMs’ potential in two aspects: First, by applying LoRA distillation to Stable-Diffusion models including SD-V1.5, SSD-1B, and SDXL, they have expanded LCM’s scope to larger models with significantly less memory consumption, achieving superior image generation quality.

Second, they identify the LoRA parameters obtained through LCM distillation as a universal Stable-Diffusion acceleration module, named LCM-LoRA. LCM-LoRA can be directly plugged into various Stable-Diffusion fine-tuned models or LoRAs without training, thus representing a universally applicable accelerator for diverse image generation tasks.

Compared with previous numerical PF-ODE solvers such as DDIM, and DPM-Solver, LCM-LoRA can be viewed as a plug-in neural PF-ODE solver that possesses strong generalization abilities.

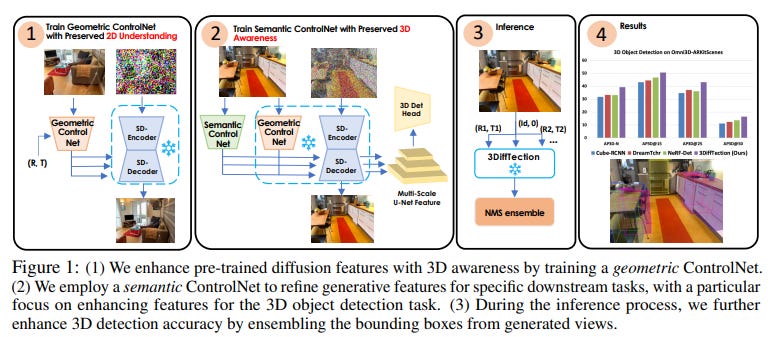

1.3. 3DiffTection: 3D Object Detection with Geometry-Aware Diffusion Features

In this paper the authors present 3DiffTection, a state-of-the-art method for 3D object detection from single images, leveraging features from a 3D-aware diffusion model. Annotating large-scale image data for 3D detection is resource-intensive and time-consuming. Recently, pretrained large image diffusion models have become prominent as effective feature extractors for 2D perception tasks.

However, these features are initially trained on paired text and image data, which are not optimized for 3D tasks, and often exhibit a domain gap when applied to the target data. The proposed approach bridges these gaps through two specialized tuning strategies: geometric and semantic.

For geometric tuning, they fine-tune a diffusion model to perform novel view synthesis conditioned on a single image, by introducing a novel epipolar warp operator. This task meets two essential criteria: the necessity for 3D awareness and reliance solely on posed image data, which are readily available (e.g., from videos) and do not require manual annotation.

For semantic refinement, they further train the model on target data with detection supervision. Both tuning phases employ ControlNet to preserve the integrity of the original feature capabilities.

In the final step, they harness these enhanced capabilities to conduct a test-time prediction ensemble across multiple virtual viewpoints. Through the proposed methodology, we obtain 3D-aware features that are tailored for 3D detection and excel in identifying cross-view point correspondences.

Consequently, the model emerges as a powerful 3D detector, substantially surpassing previous benchmarks, e.g., Cube-RCNN, a precedent in single-view 3D detection by 9.43\% in AP3D on the Omni3D-ARkitscene dataset. Furthermore, 3DiffTection showcases robust data efficiency and generalization to cross-domain data.

1.4. Holistic Evaluation of Text-To-Image Models

The stunning qualitative improvement of recent text-to-image models has led to their widespread attention and adoption. However, they lack a comprehensive quantitative understanding of their capabilities and risks. To fill this gap, we introduce a new benchmark, Holistic Evaluation of Text-to-Image Models (HEIM).

Whereas previous evaluations focus mostly on text-image alignment and image quality, we identify 12 aspects, including text-image alignment, image quality, aesthetics, originality, reasoning, knowledge, bias, toxicity, fairness, robustness, multilingualism, and efficiency.

They curate 62 scenarios encompassing these aspects and evaluate 26 state-of-the-art text-to-image models on this benchmark. The results reveal that no single model excels in all aspects, with different models demonstrating different strengths.

1.5. LRM: Large Reconstruction Model for Single Image to 3D

This paper proposes the first Large Reconstruction Model (LRM) that predicts the 3D model of an object from a single input image within just 5 seconds.

In contrast to many previous methods that are trained on small-scale datasets such as ShapeNet in a category-specific fashion, LRM adopts a highly scalable transformer-based architecture with 500 million learnable parameters to directly predict a neural radiance field (NeRF) from the input image.

They train the model in an end-to-end manner on massive multi-view data containing around 1 million objects, including both synthetic renderings from Objaverse and real captures from MVImgNet.

This combination of a high-capacity model and large-scale training data empowers our model to be highly generalizable and produce high-quality 3D reconstructions from various testing inputs including real-world in-the-wild captures and images from generative models.

1.6. I2VGen-XL: High-Quality Image-to-Video Synthesis via Cascaded Diffusion Models

Video synthesis has recently made remarkable strides benefiting from the rapid development of diffusion models. However, it still encounters challenges in terms of semantic accuracy, clarity, and spatio-temporal continuity.

They primarily arise from the scarcity of well-aligned text-video data and the complex inherent structure of videos, making it difficult for the model to simultaneously ensure semantic and qualitative excellence.

In this report, the authors propose a cascaded I2VGen-XL approach that enhances model performance by decoupling these two factors and ensures the alignment of the input data by utilizing static images as a form of crucial guidance. I2VGen-XL consists of two stages:

The base stage guarantees coherent semantics and preserves content from input images by using two hierarchical encoders

The refinement stage enhances the video’s details by incorporating an additional brief text and improves the resolution to 1280*720.

To improve the diversity, they collected around 35 million single-shot text-video pairs and 6 billion text-image pairs to optimize the model. By this means, I2VGen-XL can simultaneously enhance the semantic accuracy, continuity of details, and clarity of generated videos.

Through extensive experiments, we have investigated the underlying principles of I2VGen-XL and compared it with current top methods, which can demonstrate its effectiveness on diverse data.

1.7. Consistent4D: Consistent 360° Dynamic Object Generation from Monocular Video

In this paper, the authors present Consistent4D, a novel approach for generating 4D dynamic objects from uncalibrated monocular videos. Uniquely, they cast the 360-degree dynamic object reconstruction as a 4D generation problem, eliminating the need for tedious multi-view data collection and camera calibration.

This is achieved by leveraging the object-level 3D-aware image diffusion model as the primary supervision signal for training Dynamic Neural Radiance Fields (DyNeRF). Specifically, they propose a Cascade DyNeRF to facilitate stable convergence and temporal continuity under the supervision signal which is discrete along the time axis.

To achieve spatial and temporal consistency, the authors further introduce an Interpolation-driven Consistency Loss. It is optimized by minimizing the discrepancy between rendered frames from DyNeRF and interpolated frames from a pre-trained video interpolation model.

Extensive experiments show that the Consistent4D can perform competitively to prior art alternatives, opening up new possibilities for 4D dynamic object generation from monocular videos, whilst also demonstrating an advantage for conventional text-to-3D generation tasks.

2. Vision-Language Models

2.1. CogVLM: Visual Expert for Pretrained Language Models

This paper introduces CogVLM, a powerful open-source visual language foundation model. Different from the popular shallow alignment method which maps image features into the input space of the language model, CogVLM bridges the gap between the frozen pretrained language model and image encoder by a trainable visual expert module in the attention and FFN layers.

As a result, CogVLM enables a deep fusion of vision language features without sacrificing any performance on NLP tasks. CogVLM-17B achieves state-of-the-art performance on 10 classic cross-modal benchmarks, including NoCaps, Flicker30k captioning, RefCOCO, RefCOCO+, RefCOCOg, Visual7W, GQA, ScienceQA, VizWiz VQA and TDIUC, and ranks the 2nd on VQAv2, OKVQA, TextVQA, COCO captioning, etc., surpassing or matching PaLI-X 55B.

2.2. CoVLM: Composing Visual Entities and Relationships in Large Language Models Via Communicative Decoding

A remarkable ability of human beings resides in compositional reasoning, i.e., the capacity to make “infinite use of finite means”. However, current large vision-language foundation models (VLMs) fall short of such compositional abilities due to their “bag-of-words” behaviors and inability to construct words that correctly represent visual entities and the relations among the entities.

To this end, this paper proposes CoVLM, which can guide the LLM to explicitly compose visual entities and relationships among the text and dynamically communicate with the vision encoder and detection network to achieve vision-language communicative decoding.

Specifically, they first devise a set of novel communication tokens for the LLM, for dynamic communication between the visual detection system and the language system.

A communication token is generated by the LLM following a visual entity or a relation, to inform the detection network to propose regions that are relevant to the sentence generated so far. The proposed regions-of-interests (ROIs) are then fed back into the LLM for better language generation contingent on the relevant regions.

The LLM is thus able to compose the visual entities and relationships through the communication tokens. The vision-to-language and language-to-vision communication are iteratively performed until the entire sentence is generated.

This framework seamlessly bridges the gap between visual perception and LLMs and outperforms previous VLMs by a large margin on compositional reasoning benchmarks (e.g., ~20% in HICO-DET mAP, ~14% in Cola top-1 accuracy, and ~3% on ARO top-1 accuracy). We also achieve state-of-the-art performances on traditional vision-language tasks such as referring expression comprehension and visual question-answering.

2.3. On the Road with GPT-4V(ision): Early Explorations of Visual-Language Model on Autonomous Driving

The pursuit of autonomous driving technology hinges on the sophisticated integration of perception, decision-making, and control systems. Traditional approaches, both data-driven and rule-based, have been hindered by their inability to grasp the nuance of complex driving environments and the intentions of other road users.

This has been a significant bottleneck, particularly in the development of common sense reasoning and nuanced scene understanding necessary for safe and reliable autonomous driving. The advent of Visual Language Models (VLM) represents a novel frontier in realizing fully autonomous vehicle driving. This report provides an exhaustive evaluation of the latest state-of-the-art VLM, \modelnamefull, and its application in autonomous driving scenarios.

We explore the model’s abilities to understand and reason about driving scenes, make decisions, and ultimately act in the capacity of a driver. Our comprehensive tests span from basic scene recognition to complex causal reasoning and real-time decision-making under varying conditions. Our findings reveal that \modelname demonstrates superior performance in scene understanding and causal reasoning compared to existing autonomous systems.

It showcases the potential to handle out-of-distribution scenarios, recognize intentions, and make informed decisions in real driving contexts. However, challenges remain, particularly in direction discernment, traffic light recognition, vision grounding, and spatial reasoning tasks. These limitations underscore the need for further research and development.

3. Object Detection & Image Segmentation

3.1. NExT-Chat: An LMM for Chat, Detection and Segmentation

The development of large language models (LLMs) has greatly advanced the field of multimodal understanding, leading to the emergence of large multimodal models (LMMs). In order to enhance the level of visual comprehension, recent studies have equipped LMMs with region-level understanding capabilities by representing object-bound box coordinates as a series of text sequences (pixel2seq).

In this paper, we introduce a novel paradigm for object location modeling called the pixel2emb method, where we ask the LMM to output the location embeddings and then decode them by different decoders. This paradigm allows for different location formats (such as bounding boxes and masks) to be used in multimodal conversations.

Furthermore, this kind of embedding-based location modeling enables the utilization of existing practices in localization tasks, such as detection and segmentation. In scenarios with limited resources, our pixel2emb demonstrates superior performance compared to existing state-of-the-art (SOTA) approaches in both the location input and output tasks under fair comparison. Leveraging the proposed pixel2emb method, we train an LMM named NExT-Chat and demonstrate its capability of handling multiple tasks like visual grounding, region caption, and grounded reasoning.

3.2. Video Instance Matting

Conventional video matting outputs one alpha matte for all instances appearing in a video frame so that individual instances are not distinguished. While video instance segmentation provides time-consistent instance masks, results are unsatisfactory for matting applications, especially due to applied binarization.

To remedy this deficiency, they propose Video Instance Matting~(VIM), that is, estimating the alpha mattes of each instance at each frame of a video sequence. To tackle this challenging problem, we present MSG-VIM, a Mask Sequence Guided Video Instance Matting neural network, as a novel baseline model for VIM.

MSG-VIM leverages a mixture of mask augmentations to make predictions robust to inaccurate and inconsistent mask guidance. It incorporates temporal mask and temporal feature guidance to improve the temporal consistency of alpha matte predictions. Furthermore, we build a new benchmark for VIM, called VIM50, which comprises 50 video clips with multiple human instances as foreground objects.

To evaluate performances on the VIM task, we introduce a suitable metric called Video Instance-aware Matting Quality~(VIMQ). Our proposed model MSG-VIM sets a strong baseline on the VIM50 benchmark and outperforms existing methods by a large margin. The project is open-sourced at https://github.com/SHI-Labs/VIM.

4. Scene Decomposition

4.1. EmerNeRF: Emergent Spatial-Temporal Scene Decomposition via Self-Supervision

We present EmerNeRF, a simple yet powerful approach for learning spatial-temporal representations of dynamic driving scenes. Grounded in neural fields, EmerNeRF simultaneously captures scene geometry, appearance, motion, and semantics via self-bootstrapping.

EmerNeRF hinges upon two core components: First, it stratifies scenes into static and dynamic fields. This decomposition emerges from self-supervision, enabling our model to learn from general, in-the-wild data sources. Second, EmerNeRF parameterizes an induced flow field from the dynamic field and uses this flow field to aggregate multi-frame features further, amplifying the rendering precision of dynamic objects.

Coupling these three fields (static, dynamic, and flow) enables EmerNeRF to represent highly dynamic scenes self-sufficiently, without relying on ground truth object annotations or pre-trained models for dynamic object segmentation or optical flow estimation. Our method achieves state-of-the-art performance in sensor simulation, significantly outperforming previous methods when reconstructing static (+2.93 PSNR) and dynamic (+3.70 PSNR) scenes.

In addition, to bolster EmerNeRF’s semantic generalization, we lift 2D visual foundation model features into 4D space-time and address a general positional bias in modern Transformers, significantly boosting 3D perception performance (e.g., 37.50% relative improvement in occupancy prediction accuracy on average). Finally, we construct a diverse and challenging 120-sequence dataset to benchmark neural fields under extreme and highly dynamic settings.

Are you looking to start a career in data science and AI and do not know how? I offer data science mentoring sessions and long-term career mentoring:

Mentoring sessions: https://lnkd.in/dXeg3KPW

Long-term mentoring: https://lnkd.in/dtdUYBrM

👏👏👏👏👏👏