Large Language Model Selection Masterclass

The ultimate guide to picking an LLM in late 2025

This post is written by Marina Wyss

When you’re new to AI engineering, you probably just default to picking the newest LLM from the biggest provider. That must be the best one, right?

Not necessarily.

In late 2025, we now have dozens of different LLMs to choose from, and each one has distinct strengths. Some are great at coding, others excel at math, and some are specifically built for running on your own infrastructure.

Today, I want to walk you through how to actually pick the right model for your use case. We’ll cover what makes these models different from each other, the current landscape of options, and, most importantly, a framework for deciding which one to use when.

Let’s dive in.

Get All My Books, One Button Away With 40% Off

I have created a bundle for my books and roadmaps, so you can buy everything with just one button and for 40% less than the original price. The bundle features 8 eBooks, including:

What Actually Makes LLMs Different?

So before we start comparing models, we need to understand what actually makes one LLM different from another. Three main factors define a model’s capabilities and “personality.”

1. Architecture

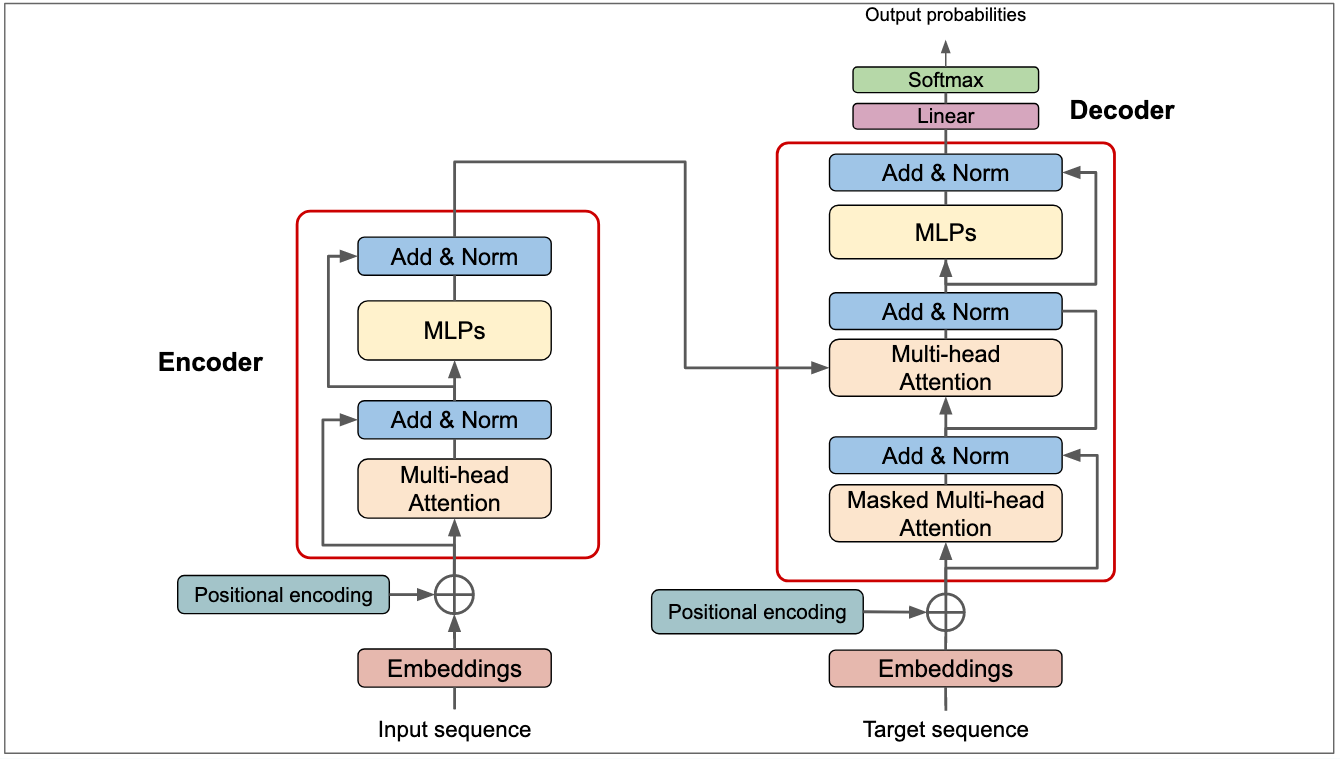

All modern LLMs are built with something called the Transformer architecture. This was the breakthrough that led to the most recent AI revolution. Basically, it processes entire sequences in parallel instead of word by word. The key is something called self-attention, where the model weighs the importance of different words in context. So it can understand complex relationships across really long passages of text.

But within that framework, there are some key variations you should know about.

The biggest one is Dense versus Mixture-of-Experts (or MoE for short).

Dense models like GPT and Claude activate all of their parameters for every single input. Think of it like using your entire brain for every thought.

MoE models like Gemini, Mistral, and Llama 4 work differently. They selectively activate “expert” sub-networks depending on the task. So instead of waking up every neuron, they route the query to the specific experts who are good at that type of problem. This lets them scale to massive sizes while keeping the actual compute per query much lower.

Then there’s GPT-5’s new approach. They introduced this router-based architecture that automatically switches between different models based on how complex your task is. So simple queries get handled by a fast model, and tough problems get routed to the deep reasoning model.

DeepSeek has another approach to queries of different complexities. DeepSeek trains strong base models, then uses large-scale preference optimization to favor explicit multi-step reasoning. Most releases expose a “reasoning” endpoint (i.e., more steps for hard problems) and a “fast/lite” endpoint with lower latency for general chat.

Another big difference is context windows. This is basically how much text the model can “remember” at once. We’re seeing everything from 128,000 tokens on the low end, all the way up to 10 million tokens for Llama 4 Scout.

Now, architecture tells you how the model processes information. But here’s what really determines how it thinks and what it knows…

2. Training data

This is probably the biggest differentiator in what models are good at.

For example, GPT-5 is trained on a massive, diverse mix of internet data, books, and academic papers. So it’s a good generalist. It can talk about pretty much anything.

Gemini, on the other hand, ingests trillions of text tokens, but also video frames and audio. That’s why it has such a strong native multimodal understanding.

Claude has a heavy focus on curated, high-quality code and structured documents. That’s part of why it’s so good at technical precision and following complex instructions.

Grok gets real-time access to the X platform data stream, so it’s pulling in current, unfiltered perspectives from what’s happening on Twitter right now.

Llama 4 was trained on text, images, and Meta’s social platforms, so you get this balanced capability across different modalities.

DeepSeek blends broad web text with heavy code, math, and bilingual (Chinese/English) sources. The mix makes it strong on symbolic manipulation and competitive on coding, while staying solid for general English use.

But even with the same training data, two models can behave completely differently. That’s because of what happens AFTER the initial training, and is the phase where models develop their actual personalities.

3. Alignment

Which leads us to the third factor: fine-tuning and alignment.

This is basically the specialization phase that happens after the initial training.

There are a few different processes here.

Supervised Fine-Tuning (or SFT) is where the model learns from curated instruction-response pairs. So you show examples like “Summarize this document,” followed by an ideal summary. This teaches the model how to follow instructions and handle specific tasks.

RLHF (Reinforcement Learning from Human Feedback) is where human reviewers rank multiple model outputs, and the model learns to prefer the highly-rated responses. This is how you align the model’s behavior with human values and preferences.

We also have DPO (Direct Preference Optimization), which is a newer, more stable alternative to RLHF. It optimizes directly on preference data without needing a separate reward model. It’s faster, uses less compute, and it’s being increasingly adopted in 2025.

Different companies have very different alignment philosophies.

Anthropic uses something called Constitutional AI for Claude, which is where the model learns from a set of ethical principles. This makes Claude pretty cautious and safety-focused (sometimes overly so).

OpenAI’s approach with GPT-5 combines traditional RLHF with their new router system. The base models go through extensive human feedback loops focused on helpfulness and harmlessness, then the router layer adds another level of alignment by selecting the appropriate model complexity. This dual-layer approach aims to balance capability with safety across different task types.

On the other hand, xAI takes a different approach with Grok. Despite using 10 times more reinforcement learning compute than its competitors, they apply minimal content filtering. So you get a model that’s heavily aligned through compute-intensive RL training, but with fewer restrictions on what it will discuss. It’s more natural and unfiltered in its responses compared to Claude or GPT-5.

DeepSeek’s alignment stance targets correctness on math/logic and software tasks via preference-style optimization. It tends to be direct and not overly verbose.

The point is, these alignment choices have a huge impact on how the model actually responds to you. Claude might refuse something that GPT-5 would answer, and Grok might give you an unfiltered take that the others wouldn’t.

Now, before we look at specific models, there’s one more thing that matters even more than architecture, training data, or alignment when it comes to making practical decisions for your projects. And most beginners completely overlook it.

Open-source vs. Open-weight vs. Closed Models

Licensing. This is way more important than most people realize.

There’s this common misconception that “open” means the same thing across all models, but it’s actually way more confusing than that.

Let me break this down into three distinct categories.

First, closed API models. These are models where you call their cloud service, and the weights aren’t available to you at all. Model weights are basically the learned parameters — the numerical values that encode everything the model knows. With closed models, these stay locked up on the vendor’s servers. This includes big names like GPT-5, Claude, Gemini, and Grok. You’re basically renting access to the model through an API.

Keep reading with a 7-day free trial

Subscribe to To Data & Beyond to keep reading this post and get 7 days of free access to the full post archives.