Important LLM Papers for the Week From 30/06 to 05/07

Stay Updated with Recent Large Language Models Research

Large language models (LLMs) have advanced rapidly in recent years. As new generations of models are developed, researchers and engineers must stay informed about the latest progress.

This article summarizes some of the most important LLM papers published during the First Week of July 2025. The papers cover various topics that shape the next generation of language models, including model optimization and scaling, reasoning, benchmarking, and performance enhancement.

Keeping up with novel LLM research across these domains will help guide continued progress toward models that are more capable, robust, and aligned with human values.

Table of Contents:

LLM Progress & Technical Reports

LLM Reasoning

Vision Language Models

Reinforcement Learning & Alignment

LLM Roadmap: From Beginner to Advanced - 2nd Edition

I am happy to announce that I have published the 2nd edition of the Complete LLM Roadmap: From Beginner to Advanced.

1. LLM Progress & Technical Reports

1.1. Kwai Keye-VL Technical Report

This paper introduces Kwai Keye-VL, an 8-billion-parameter multimodal foundation model from Kuaishou Group, designed specifically to excel at understanding short-form videos.

While current models are strong with static images, they struggle with the dynamic, information-rich nature of videos. Keye-VL aims to bridge this gap, delivering state-of-the-art performance on video-related tasks while maintaining strong general-purpose vision-language capabilities.

The model’s success is built on two key pillars: a massive, high-quality video-centric dataset and an innovative, multi-stage training process.

Core Problem and Goal

The digital world is dominated by short-form videos (like those on Kuaishou or TikTok), but most Multimodal Large Language Models (MLLMs) are designed for images.

Understanding a video requires grasping a sequence of events, causality, and narrative — a much harder task than analyzing a single image. The goal of Keye-VL is to create a model that not only “sees” the dynamic world of video but also “thinks” about it, enabling more intelligent applications in content creation, recommendation, and e-commerce.Key Innovations and Methodology

Massive Video-Centric Dataset: The model was trained on over 600 billion tokens, with a strong emphasis on high-quality video data. This data underwent a rigorous pipeline including filtering, re-captioning with advanced models, and frame-level annotation to ensure quality.

Four-Stage Pre-Training: This initial phase establishes a solid foundation for vision-language alignment, progressing from image-text matching to multi-task learning, and finally an “annealing” phase with model merging to reduce bias.

Two-Phase Post-Training: This is where the model’s advanced reasoning is developed:

Phase 1: Foundational Performance: The model is fine-tuned for basic instruction following using supervised fine-tuning (SFT) and mixed preference optimization (MPO).

Phase 2: Reasoning Breakthrough: This is a core contribution. The model is trained on a unique “cold-start” data mixture with five modes:

Thinking: For complex tasks requiring a step-by-step reasoning process (Chain-of-Thought).

Non-thinking: For simple questions requiring a direct answer.

Auto-think: Teaches the model to decide for itself whether a task requires deep reasoning or a quick response.

Think with image: An agentic mode where the model can write and execute code to manipulate or analyze images (e.g., cropping to count objects).

High-quality video data: To specialize in video understanding.

4. Reinforcement Learning (RL) and Alignment: After post-training, the model undergoes further RL to enhance its reasoning and correct undesirable behaviors like repetitive outputs.

Performance and Evaluation

State-of-the-Art on Video Benchmarks: Keye-VL significantly outperforms other leading models of a similar scale on both public video benchmarks and a new internal benchmark, KC-MMBench, which is tailored to real-world short-video scenarios.

Competitive on Image Tasks: While its primary focus is video, the model remains highly competitive on general image-based understanding and reasoning tasks.

Superior User Experience: Human evaluations confirm that Keye-VL provides a better user experience compared to other top models.

Thinking vs. Auto-Think Modes: The model demonstrates the ability to intelligently switch between a deep “Thinking” mode and a direct “Non-Thinking” mode, using reasoning only when necessary, which improves efficiency and performance.

Conclusion and Future Work

Kwai Keye-VL is a powerful foundation model that pushes the state of the art in short-video understanding. Its success highlights the importance of high-quality, video-focused data and a sophisticated, multi-stage training regimen that explicitly teaches different reasoning styles.

While the model is highly capable, the paper identifies areas for improvement, including optimizing the video encoder, enhancing perceptual abilities, and developing more efficient reward models for alignment.

Important Resources:

1.2. Ovis-U1 Technical Report

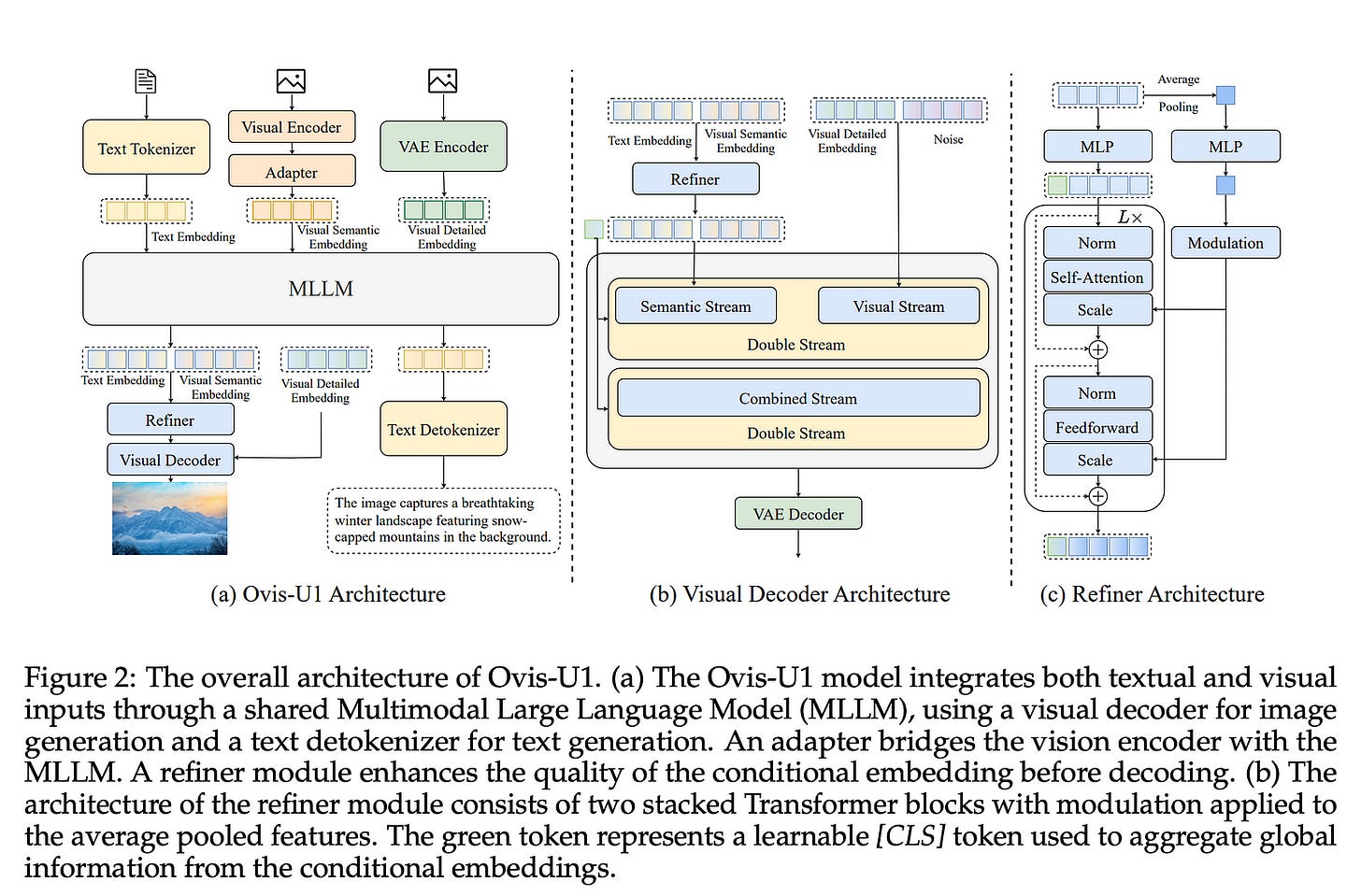

This technical report introduces Ovis-U1, a 3-billion-parameter, open-source multimodal AI model developed by Alibaba. Ovis-U1 is a “unified” model, meaning it can perform a wide range of tasks involving both text and images within a single framework.

It excels at multimodal understanding (analyzing images), text-to-image generation (creating images from text), and image editing (modifying images based on instructions). The report highlights that its strength comes from a novel architecture and a “unified training” approach, which collaboratively improves performance across all capabilities.

Core Capabilities

Ovis-U1 is designed to be a versatile, all-in-one vision-language tool. Its key functions, illustrated in the paper, include:

Image Understanding: The model can analyze an image and provide detailed descriptions, recognize and read text within it, and answer complex, context-based questions (e.g., answering riddles shown in an image).

Text-to-Image Generation: It can generate high-quality images from textual prompts, including creating scenes in specific artistic styles (e.g., “in the art style of Hayao Miyazaki”).

Image Editing: It can perform precise edits to an existing image based on natural language commands. This includes removing objects (e.g., “Remove the armchair”), replacing items (“Replace the yacht… with a hot air balloon”), or modifying specific text within an image (“Replace text ‘95’ with ‘123’”).

Key Technical Innovations

The paper attributes Ovis-U1’s strong performance to several key design choices:

Unified Architecture: Unlike models that bolt on separate components for different tasks, Ovis-U1 integrates everything. It uses a diffusion-based visual decoder for high-quality image generation and a novel bidirectional token refiner. This refiner enhances the interaction between text and image information, leading to more accurate and coherent outputs.

Unified Training: A core contribution is the training strategy. Instead of starting with a frozen, pre-trained multimodal model, Ovis-U1 begins with a language model and trains it jointly on a diverse mix of understanding, generation, and editing data. The authors show this collaborative approach leads to better performance than training on these tasks in isolation.

Progressive Training Pipeline: The model is trained through a carefully designed six-stage pipeline. This process progressively builds its capabilities, starting with basic image generation, then aligning the visual and text components, and finally fine-tuning for all tasks simultaneously.

Performance and Benchmarks

Despite its relatively compact size (3B parameters), Ovis-U1 demonstrates state-of-the-art performance, surpassing many larger and more specialized models:

Understanding: It achieves a score of 69.6 on the OpenCompass Multi-modal Academic Benchmark, outperforming other models in the ~3B parameter range.

Generation: It scores 83.72 on DPG-Bench and 0.89 on GenEval, showing competitive text-to-image generation capabilities.

Editing: It achieves strong scores of 4.00 on ImgEdit-Bench and 6.42 on GEdit-Bench-EN.

Significance and Conclusion

Ovis-U1 represents a significant step forward in creating powerful, efficient, and versatile open-source multimodal models. Its success underscores the value of unified training, where different abilities are learned together to enhance each other. By open-sourcing the model, the Alibaba team aims to accelerate community research and development in this area.

Future plans include scaling the model to a larger size, curating better training data, and incorporating a reinforcement learning stage to further align it with human preferences.

Important Resources:

1.3. SciArena: An Open Evaluation Platform for Foundation Models in Scientific Literature Tasks

This paper introduces SciArena, an open and collaborative platform for evaluating how well large language models (foundation models) perform on complex scientific literature tasks.

Inspired by the popular Chatbot Arena, SciArena allows real researchers to submit questions, compare two anonymous model-generated answers side-by-side, and vote for the better one.

The platform uses this community-driven feedback to rank models, providing a dynamic and realistic benchmark for a domain that requires deep expertise and literature-grounded responses.

The authors also release SciArena-Eval, a new benchmark dataset derived from these human votes, designed to test how well automated “LLM-as-a-judge” systems can replicate human expert preferences.

What is SciArena?

SciArena is a system with three main parts:

The Platform: Researchers ask real-world scientific questions (e.g., “What are the latest advancements in quantum computing?”). The system retrieves relevant scientific papers using a sophisticated RAG (Retrieval-Augmented Generation) pipeline and provides them to two randomly selected AI models. Each model generates a long-form, citation-backed answer. The researcher then votes for the better response (“A”, “B”, “Tie”, or “Both Bad”).

The Leaderboard: The platform collects votes (over 13,000 from 102 trusted researchers in the initial phase) and uses an Elo rating system to rank the 23 participating foundation models. This provides a continuously updated ranking of model performance on scientific tasks.

SciArena-Eval (A Meta-Benchmark): Using the collected human preference data, the authors created a benchmark to evaluate other evaluators. The task is to see if an AI judge can correctly predict which of two responses a human researcher preferred.

Key Contributions and Findings

A Realistic, Community-Driven Benchmark: Unlike static benchmarks with predefined questions, SciArena captures the diverse and evolving needs of real researchers across fields like Natural Science, Healthcare, Engineering, and Humanities.

2. High-Quality Human Data: The paper demonstrates that the collected votes are reliable, with high inter-annotator agreement (experts agree with each other) and self-consistency (experts are consistent in their own judgments over time).

3. Model Performance Leaderboard:

The top-performing proprietary models are o3 (from OpenAI) and the Claude-4-Opus model.

The highest-ranking open-source model is Deepseek-R1–0528.

Model performance varies significantly across different scientific domains and question types (e.g., conceptual explanation vs. methodology inquiry).

4. Analysis of User Preferences: Researchers on SciArena value substance over style. They show a clear preference for responses with correctly attributed and relevant citations, and are less influenced by superficial features like response length or a high number of citations, a key difference from general-purpose evaluation platforms.

5. Automated Evaluation is Still a Major Challenge: The SciArena-Eval benchmark reveals the difficulty of this task. The best model-based judge (o3) only achieved 65.1% accuracy in predicting human preferences, which is significantly lower than the performance of similar judges on general-purpose benchmarks. This highlights the need for more robust automated evaluation methods for specialized, knowledge-intensive domains.

Significance and Impact

The SciArena project makes several important contributions to the field:

It provides a much-needed, dynamic, and open evaluation tool for the critical task of scientific literature synthesis.

By open-sourcing the collected human preference data and the SciArena-Eval benchmark, it enables further research into building foundation models that are better aligned with the needs of scientists.

It clearly demonstrates that evaluating models in expert domains is uniquely challenging and that current automated evaluation methods are not yet reliable enough to replace human expert judgment.