Important LLM Papers for the Week From 12/05 to 18/05

Stay Updated with Recent Large Language Models Research

Large language models (LLMs) have advanced rapidly in recent years. As new generations of models are developed, researchers and engineers need to stay informed on the latest progress.

This article summarizes some of the most important LLM papers published during the Second Week of May 2025. The papers cover various topics shaping the next generation of language models, from model optimization and scaling to reasoning, benchmarking, and enhancing performance.

Keeping up with novel LLM research across these domains will help guide continued progress toward models that are more capable, robust, and aligned with human values.

Table of Contents:

LLM Progress & Technical Reports

LLM Reasoning

LLM Training & Fine-Tuning

Vision Language Models

LLM Alignment & Post Training

LLM Evaluation

AI Agents

My New E-Book: LLM Roadmap from Beginner to Advanced Level

I am pleased to announce that I have published my new ebook LLM Roadmap from Beginner to Advanced Level. This ebook will provide all the resources you need to start your journey towards mastering LLMs.

1. LLM Progress & Technical Reports

1.1. Seed1.5-VL Technical Report

This technical report introduces Seed1.5-VL, a vision-language foundation model from ByteDance Seed, designed for general-purpose multimodal understanding and reasoning.

Key Features and Architecture:

Architecture: Composed of a 532 M-parameter vision encoder (Seed-ViT) and a Mixture-of-Experts (MoE) LLM with 20B active parameters.

Seed-ViT (Vision Encoder):

Specifically designed for native-resolution feature extraction, supporting dynamic image resolutions and using 2D RoPE for positional encoding.

Undergoes a three-stage pre-training:

- Masked Image Modeling (MIM) with 2D RoPE.

- Native-Resolution Contrastive Learning (SigLIP + SuperClass loss).

- Omni-modal Pre-training (aligning video frames, audio, and captions using the MiCo framework).

3. Video Encoding: Employs “Dynamic Frame-Resolution Sampling,” which adjusts sampling frame rate and spatial resolution based on content complexity and task, prepending timestamp tokens to frames.

4. LLM Component: An internal pre-trained MoE LLM with ~20B active parameters, trained on trillions of text tokens.

Pre-training (VLM Stage):

Data:

3 trillion diverse, high-quality source tokens, categorized by target capabilities (generic image-text, OCR, visual grounding & counting, 3D spatial understanding, video, STEM, GUI).

Extensive data synthesis pipelines for OCR, charts, tables, 3D data, video captions/QA, STEM problems, and GUI interactions.

Strategies to handle long-tail distributions in generic image-text data (e.g., Max1k-46M).

2. Training Recipe (3 Stages):

Stage 0: Align the vision encoder with LLM by training only the MLP adapter.

Stage 1: All parameters trainable; focus on knowledge accumulation, visual grounding, and OCR using 3T tokens. Includes a small amount of text-only and instruction data.

Stage 2: More balanced data mixture, adding video, coding, 3D data. Increased sequence length (to 131,072). All parameters trainable.

3. Scaling Laws: Observes power-law relationship for training loss vs. tokens and log-linear relationship for training loss vs. downstream metrics in local neighborhoods.

Post-training:

An iterative process combining Supervised Fine-tuning (SFT) and Reinforcement Learning (RL).

SFT:

Dataset: ~50k high-quality multimodal samples + in-house text-only SFT data + LongCoT SFT data.

Data Construction: Crowdsourced initial data, augmented with community-sourced data, filtered by proprietary embedding models and LLM-as-a-judge, and refined using self-instruct and rejection sampling.

2. RLHF (Reinforcement Learning from Human Feedback):

Preference Data: List-wise multimodal preferences from human annotation (5-scale rating) and heuristic synthesis (for diverse prompts with clear ground-truths).

Reward Model: An instruction-tuned VLM prompted as a generative classifier to output preference, considering both orderings to mitigate positional bias. Iterative learning strategy for consistency.

3. RLVR (Reinforcement Learning with Verifiable Rewards):

For tasks with verifiable answers (Visual STEM, visual puzzles, grounding, perception games like “Spot the Differences”).

STEM verifier uses sympy; other tasks use rule-based or string-matching verifiers.

4. Hybrid Reinforcement Learning:

Combines RLHF and RLVR using a PPO variant.

Uses a shared critic, format rewards, and distinct KL coefficients for general vs. verifiable prompts.

5. Iterative Update by Rejection Sampling Fine-tuning:

The SFT model is improved iteratively by collecting correct responses from the latest RL model on challenging prompts (rejection sampling), which then forms the data for the next SFT iteration.

Training Infrastructure:

Large-Scale Pre-training: Hybrid parallelism (ZeRO for ViT/adapter, 4D parallelism for LLM), workload balancing for vision data, parallelism-aware data loading, fault tolerance (MegaScale, ByteCheckpoint).

Post-Training: Verl-based framework with a single controller for inter-RL-role dataflow and multi-controllers for intra-RL-role parallelism.

Evaluation:

Public Benchmarks (60 total):

Achieves SOTA on 38/60 benchmarks (21/34 vision-language, 14/19 video, 3/7 GUI agent).

Strong performance in multimodal reasoning (MathVista, V*, VLM are Blind), general VQA (RealWorldQA), document/chart understanding (TextVQA, DocVQA), grounding/counting (BLINK, CountBench, FSC-147), and 3D spatial understanding.

Competitive in video understanding across short, long, and streaming video tasks.

2. Multimodal Agent Tasks: Outperforms OpenAI CUA and Claude 3.7 in GUI control (ScreenSpot, OSWorld, WebVoyager, AndroidWorld) and gameplay (14 Poki games).

3. Internal Benchmarks: A hierarchical benchmark with >100 tasks and >12k samples, focusing on core capabilities and mitigating overfitting. Seed1.5-VL ranks second overall against leading industry models, excelling in OOD, Agent, and Atomic Instruction Following.

Limitations:

Struggles with fine-grained visual perception (e.g., counting in complex, occluded scenes), complex spatial relationships, and some higher-level reasoning tasks (e.g., Klotski puzzles, combinatorial search).

Deficiencies in temporal reasoning for multi-image scenarios and hallucination persist.

Seed1.5-VL demonstrates strong multimodal understanding and reasoning. Future work includes increasing model/data scale, improving 3D spatial reasoning, mitigating hallucination, and incorporating image generation capabilities (potentially enabling visual CoT) and tool-use.

Important Resoruces:

1.2. Bielik v3 Small: Technical Report

This paper introduces Bielik v3, a series of parameter-efficient generative text models (1.5B and 4.5B parameters) specifically optimized for the Polish language. The goal is to demonstrate that smaller, well-optimized models can achieve performance comparable to much larger models while using significantly fewer computational resources, making high-quality Polish AI more accessible.

Key Innovations and Techniques:

1. Model Adaptation and Scaling:

Models are adapted from Qwen2.5 1.5B and 3B architectures.

Depth Up-Scaling: Used to scale the models (e.g., the 4.5B model from the 3B Qwen2.5).

Custom Polish Tokenizer (APT4): Replaced the original Qwen tokenizer. APT4 significantly improves token efficiency for Polish. The FOCUS method was used for initializing new token embeddings. The outermost layers were duplicated and initially trained (frozen elsewhere) to adapt to new embeddings.

Adaptive Learning Rate (ALR): Dynamically adjusts the learning rate based on training progress and context length.

2. Pre-training:

Data Corpus: Meticulously curated corpus of 292 billion tokens spanning 303 million documents, primarily Polish texts from SpeakLeash, supplemented with English texts (SlimPajama) and instruction data.

Data Cleanup & Quality Evaluation: Heuristics for removing corrupted/irrelevant content, anonymizing data, and resolving encoding issues. A quality classifier was developed (95% accuracy, 0.85 macro F1) using an expanded stylometric feature set (200 features) and XGBoost, categorizing documents into HIGH, MEDIUM, and LOW quality.

Category Classification: A text category classifier (LinearSVC with TF-IDF) was developed to assign documents to one of 120 predefined categories, ensuring thematic diversity.

3. Synthetic Data Generation for Instruction Tuning:

Uses high-quality, thematically balanced documents (P(HIGH) > 0.9) to generate QA instructions.

Data Recycling: Medium/borderline quality texts are refined using Bielik v2.3 before inclusion.

4. Post-training (Supervised Fine-Tuning & Preference Learning):

SFT:

Uses dialogue-style datasets with masked user instructions and control tokens (loss applied only to content).

Employs ALR.

Dataset of over 19 million Polish instructions (13B+ tokens), cleaned and augmented from Bielik 11B v2 release.

2. Preference Learning:

Evaluated DPO, DPO-P, ORPO, and a novel Simple Preference Optimization (SimPO).

Found DPO-P to be most effective for Polish benchmarks.

Expanded Polish preference instruction dataset to 126,000 instructions, including function/tool calling and more reasoning/math.

3. Reinforcement Learning (RL):

Used Group Relative Policy Optimization (GRPO) on the Bielik-4.5B-v3 model with 12,000 Polish mathematical problems (RLVR).

Employed Volcano Engine Reinforcement Learning (VERL) framework.

4. Model Merging: Linear merging was the primary technique for improving model quality after SFT and RLHF stages.

5. Model Architecture Details (based on Transformer):

Self-attention with causal masks.

Grouped-query attention (GQA).

SwiGLU activation function.

Rotary Positional Embeddings (RoPE).

Root Mean Square Layer Normalization (RMSNorm).

Pre-normalization.

Evaluation and Results:

The Bielik v3 models were evaluated on several Polish benchmarks:

1.Open PL LLM Leaderboard:

Bielik-4.5B-v3 (base) scores 54.94, competitive with larger models like Qwen2.5–7B (53.35) and EuroLLM-9B (50.03).

Bielik-1.5B-v3 (base) scores 31.48, comparable to Qwen2.5–1.5B (31.83).

Bielik-4.5B-v3.0-Instruct scores 56.13, outperforming Qwen2.5–7B-Instruct (54.93).

Bielik-1.5B-v3.0-Instruct scores 41.36, exceeding Qwen2.5–3B-Instruct (41.23) with half the parameters.

2. Polish EQ-Bench (Emotional Intelligence): Bielik-4.5B-v3.0-Instruct scores 53.58, outperforming larger models like PLLuM-12B-chat (52.26).

3. Complex Polish Text Understanding Benchmark (CPTUB): Bielik-4.5B-v3.0-Instruct achieves an overall score of 3.38, outperforming phi-4 (3.30) and all PLLuM models. Shows strong implicature (3.68) and phraseology (3.67) handling.

4. Polish Medical Leaderboard:

Bielik-4.5B-v3.0-Instruct scores 43.55%, close to Bielik-11B-v2.5-Instruct (44.85%).

Bielik-1.5B-v3.0-Instruct scores 34.63%, outperforming Qwen2.5–1.5B-Instruct (32.64%).

5. Polish Linguistic and Cultural Competency Benchmark (PLCC):

Bielik-4.5B-v3.0-Instruct achieves 42.33%, outperforming Qwen-2.5–14B (26.67%).

Models also evaluated on Open LLM Leaderboard (English), MixEval, and Berkeley Function-Calling Leaderboard, showing competitive small-model performance.

Bielik v3 models demonstrate that smaller, well-optimized architectures, leveraging innovations like custom tokenizers and adaptive learning rates, can achieve strong performance in less-resourced languages like Polish, rivaling or exceeding much larger models. This work establishes new benchmarks for parameter-efficient Polish language modeling.

Important Resoruces:

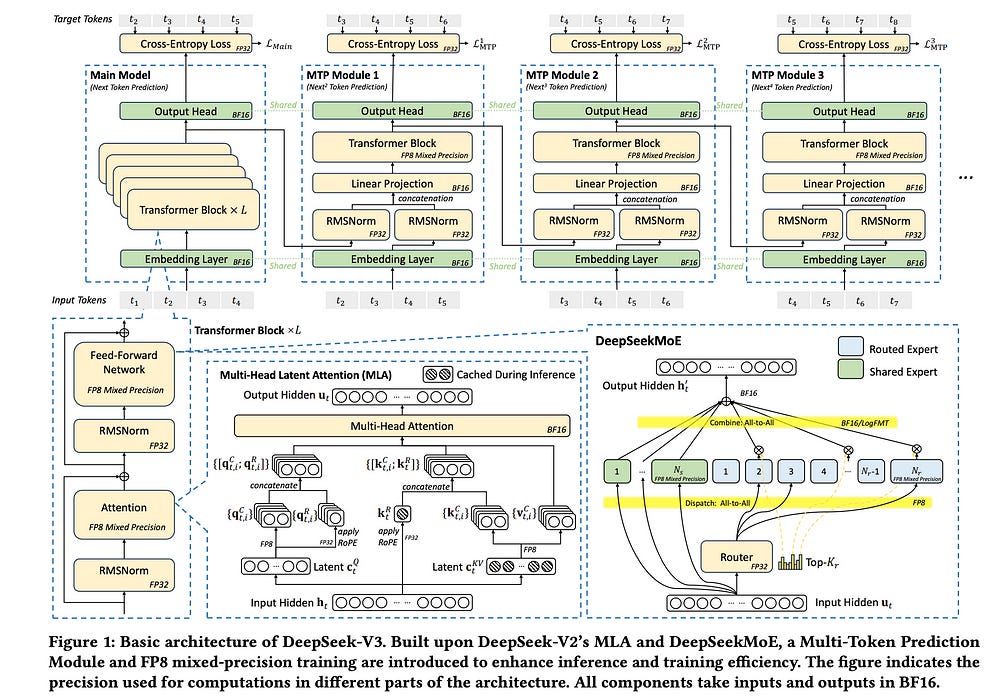

1.3. Insights into DeepSeek-V3: Scaling Challenges and Reflections on Hardware for AI Architectures

This paper, “Insights into DeepSeek-V3: Scaling Challenges and Reflections on Hardware for AI Architectures,” provides an in-depth analysis of the DeepSeek-V3/R1 large language model (LLM) and its AI infrastructure, focusing on how hardware-aware model co-design can address current limitations in hardware for AI. The authors share insights gained from training DeepSeek-V3 on 2,048 NVIDIA H800 GPUs.

Key Challenges in Current Hardware for LLMs:

Memory capacity constraints

Computational efficiency limitations

Interconnection bandwidth bottlenecks

DeepSeek-V3’s Hardware-Aware Co-Design Innovations:

Memory Efficiency:

Multi-head Latent Attention (MLA): Compresses Key-Value (KV) caches by projecting KV representations of all attention heads into a smaller latent vector. DeepSeek-V3 using MLA requires only 70 KB/token for KV cache, significantly less than GQA-based models like LLaMA-3.1 405B (516 KB) or Qwen-2.5 72B (327 KB).

Discusses other KV cache reduction techniques like GQA/MQA and Windowed KV.

Future directions: Linear-time attention (Mamba-2, Lightning Attention) and sparse attention.

2. Cost-Effectiveness & Computational Efficiency (Mixture of Experts — MoE):

DeepSeekMoE Architecture: Allows scaling total parameters (671B in V3) while only activating a subset per token (37B in V3), reducing training costs. DeepSeek-V3 training costs ~250 GFLOPS/token, much lower than comparable dense models.

Advantages for Personal/On-Premises Deployment: Reduced memory and compute demands enable running large MoE models on consumer hardware (e.g., DeepSeek-V3 on a server with one consumer GPU, achieving ~20 TPS).

Node-Limited Routing for TopK Expert Selection: In their H800 cluster (limited intra-node NVLink, more IB NICs), they co-designed expert routing to leverage higher intra-node bandwidth (NVLink) for some expert communication, reducing inter-node (IB) traffic and mitigating IB bottlenecks. Experts are grouped and deployed on single nodes, and tokens are routed to a limited number of nodes (up to 4).

3. Inference Speed:

Overlapping Computation and Communication: Decoupling MLA and MoE computation into stages to overlap with all-to-all communication, maximizing throughput.

Multi-Token Prediction (MTP): Enables the model to generate and verify multiple candidate tokens in parallel, accelerating inference (e.g., 1.8x TPS increase with 80–90% acceptance for the second token).

High Inference Speed for Reasoning Models: Emphasizes the need for fast inference (high TPS) for test-time scaling in reasoning models (like DeepSeek-R1) and RL workflows.

4. Low-Precision Driven Design:

FP8 Mixed-Precision Training: DeepSeek-V3 utilizes FP8, significantly reducing computational costs and memory. They developed an FP8-compatible training framework for MoE models and open-sourced DeepGEMM for fine-grained FP8 GEMM.

LogFMT (Logarithmic Floating-Point Formats): A custom data type tested for communication compression. While showing superior accuracy to FP8 at the same bit-width (e.g., LogFMT-8Bit vs. E4M3/E5M2), its practical deployment was hindered by GPU overhead for log/exp operations and register pressure during encode/decode.

5. Network Co-Design:

Multi-Plane Fat-Tree (MPFT): Deployed an 8-plane, 2-layer fat-tree for their scale-out network, offering cost and latency advantages over 3-layer fat-trees and good scalability, robustness, and traffic isolation. Each GPU-NIC pair belongs to a distinct network plane.

Performance Analysis: MPFT shows similar all-to-all and EP communication performance to single-plane multi-rail fat-trees (MRFT) due to NCCL’s PXN. Training throughput for DeepSeek-V3 on 2048 GPUs was nearly identical between MPFT and MRFT.

Low Latency Networks: Explores IB vs. RoCE, finding IB has lower latency. Recommends RoCE improvements like specialized switches, optimized routing (e.g., Adaptive Routing), and better congestion control.

InfiniBand GPUDirect Async (IBGDA): Advocated for its use to reduce latency by allowing GPUs to directly manage RDMA control plane.

Reflections and Future Hardware Directions:

FP8 Support: Need for increased accumulation precision (e.g., FP32) and native hardware support for fine-grained quantization in Tensor Cores.

Communication Compression: Native hardware support for LogFMT or other custom compression/decompression units.

Interconnect Convergence (Scale-up & Scale-out):

- Unified network adapters, dedicated communication co-processors (I/O dies), flexible forwarding/broadcast/reduce mechanisms.

- Hardware synchronization primitives for memory-semantic communication.Bandwidth Contention & Latency: Dynamic NVLink/PCIe traffic prioritization, I/O die chiplet integration for NICs, CPU-GPU interconnects using NVLink-like fabrics.

CPU Bottlenecks: Need for direct CPU-GPU interconnects, high memory bandwidth for CPUs, high single-core CPU performance, and sufficient cores per GPU.

Intelligent Networks: Co-packaged optics, lossless networks with advanced congestion control, adaptive routing, efficient fault-tolerant protocols, dynamic resource management.

Memory-Semantic Communication: Hardware support for ordering guarantees (e.g., Region Acquire/Release mechanism).

In-Network Computation/Compression: For EP dispatch (multicast) and combine (reduction), native LogFMT support.

Memory-Centric Innovations: DRAM-stacked accelerators (e.g., SeDRAM), System-on-Wafer (SoW).

Overall, the paper argues that the co-design of hardware and LLM architectures, as exemplified by DeepSeek-V3, is crucial for achieving cost-efficient scaling and unlocking the full potential of AI. It provides a practical blueprint and valuable lessons for future innovations in AI systems.

Important Resoruces:

2. LLM Reasoning

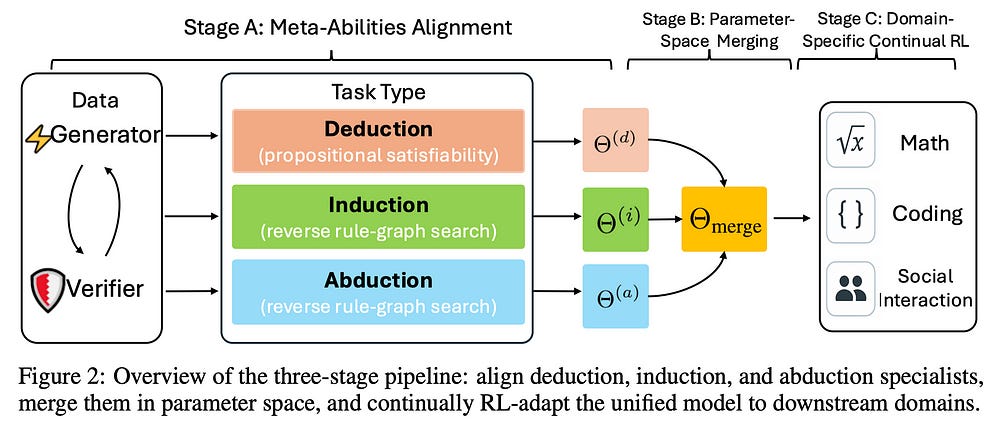

2.1. Beyond ‘Aha!’: Toward Systematic Meta-Abilities Alignment in Large Reasoning Models

This paper proposes a method to move beyond relying on unpredictable “aha moments” (emergent advanced reasoning like self-correction) in Large Reasoning Models (LRMs) and instead explicitly align them with three fundamental, domain-general reasoning meta-abilities: deduction, induction, and abduction. The authors argue that while outcome-based RL can incidentally elicit these behaviors, their inconsistency limits the scalability and reliability of LRMs.

The core idea is to instill these meta-abilities systematically using automatically generated, self-verifiable synthetic tasks, and then leverage this foundation for improved downstream reasoning.

The Three Meta-Abilities (based on Peirce’s inference triad):

Deduction (H + R → O): Inferring specific outcomes (O) from general rules (R) and hypotheses (H). Implemented as propositional satisfiability tasks.

Induction (H + O → R): Abstracting rules (R) from repeated observations (O) and hypotheses/partial inputs (H). Implemented as masked-sequence completion.

Abduction (O + R → H): Inferring the most plausible explanation/hypothesis (H) for surprising observations (O) given a set of rules (R). Implemented as a reverse rule-graph search.

The Proposed Three-Stage Pipeline:

Stage A: Meta-Abilities Alignment (Individual Alignment):

Three separate specialist models are trained using RL (critic-free REINFORCE++ with rule-based rewards) on large-scale, automatically generated synthetic diagnostic tasks, each targeting one of the three meta-abilities (deduction, induction, abduction).

These tasks are designed to be out-of-distribution relative to common pretraining data to ensure genuine meta-ability acquisition.

2. Stage B: Parameter-Space Merging:

The parameters of the three specialist models are linearly interpolated (merged) to create a single unified model.

The authors find that merging improves performance over individual specialists and vanilla instruction-tuned baselines, indicating complementary strengths. Optimal merging weights are found empirically.

3. Stage C: Domain-Specific Reinforcement Learning:

The merged model from Stage B is used as a stronger starting point for domain-specific RL (e.g., on math tasks using GRPO, similar to SimpleRL-Zoo).

This stage aims to show that prior meta-ability alignment raises the performance ceiling for subsequent specialized learning.

Key Contributions and Findings:

Keep reading with a 7-day free trial

Subscribe to To Data & Beyond to keep reading this post and get 7 days of free access to the full post archives.