Important Computer Vision Papers for the Week from 09/09 to 15/09

Stay Updated with Recent Computer Vision Research

Every week, researchers from top research labs, companies, and universities publish exciting breakthroughs in various topics such as diffusion models, vision language models, image editing and generation, video processing and generation, and image recognition.

This article provides a comprehensive overview of the most significant papers published in the Second Week of September 2024, highlighting the latest research and advancements in computer vision.

Whether you’re a researcher, practitioner, or enthusiast, this article will provide valuable insights into the state-of-the-art techniques and tools in computer vision.

Table of Contents:

Diffusion Models

Vision Language Models (VLMs)

Video Understanding & Generation

Text to Image Generation

My New E-Book: Prompt Engineering Best Practices for Instruction-Tuned LLM

I am happy to announce that I have published a new ebook Prompt Engineering Best Practices for Instruction-Tuned LLM. Prompt Engineering Best Practices for Instruction-Tuned LLM is a comprehensive guide designed to equip readers with the essential knowledge and tools to master the fine-tuning and prompt engineering of large language models (LLMs). The book covers everything from foundational concepts to advanced applications, making it an invaluable resource for anyone interested in leveraging the full potential of instruction-tuned models.

1. Diffusion Models

1.1. Hi3D: Pursuing High-Resolution Image-to-3D Generation with Video Diffusion Models

Despite having tremendous progress in image-to-3D generation, existing methods still struggle to produce multi-view consistent images with high-resolution textures in detail, especially in the paradigm of 2D diffusion that lacks 3D awareness.

In this work, we present a high-resolution image-to-3D model (Hi3D), a new video diffusion-based paradigm that redefines a single image to multi-view images as 3D-aware sequential image generation (i.e., orbital video generation).

This methodology delves into the underlying temporal consistency knowledge in the video diffusion model that generalizes well to geometry consistency across multiple views in 3D generation.

Technically, Hi3D first empowers the pre-trained video diffusion model with 3D-aware prior (camera pose condition), yielding multi-view images with low-resolution texture details.

A 3D-aware video-to-video refiner is learned to further scale up the multi-view images with high-resolution texture details. Such high-resolution multi-view images are further augmented with novel views through 3D Gaussian Splatting, which is finally leveraged to obtain high-fidelity meshes via 3D reconstruction.

Extensive experiments on both novel view synthesis and single view reconstruction demonstrate that our Hi3D manages to produce superior multi-view consistency images with highly detailed textures.

2. Vision Language Models (VLMs)

2.1. POINTS: Improving Your Vision-language Model with Affordable Strategies

In recent years, vision-language models have made significant strides, excelling in tasks like optical character recognition and geometric problem-solving. However, several critical issues remain:

Proprietary models often lack transparency about their architectures, while open-source models need more detailed ablations of their training strategies.

Pre-training data in open-source works is under-explored, with datasets added empirically, making the process cumbersome.

Fine-tuning often focuses on adding datasets, leading to diminishing returns.

To address these issues, we propose the following contributions:

We trained a robust baseline model using the latest advancements in vision-language models, introducing effective improvements and conducting comprehensive ablation and validation for each technique.

Inspired by recent work on large language models, we filtered pre-training data using perplexity, selecting the lowest perplexity data for training. This approach allowed us to train on a curated 1M dataset, achieving competitive performance.

During visual instruction tuning, we used model soup on different datasets when adding more datasets yielded marginal improvements. These innovations resulted in a 9B parameter model that performs competitively with state-of-the-art models. Our strategies are efficient and lightweight, making them easily adoptable by the community.

3. Video Understanding & Generation

3.1. PiTe: Pixel-Temporal Alignment for Large Video-Language Model

Fueled by the Large Language Models (LLMs) wave, Large Visual-Language Models (LVLMs) have emerged as a pivotal advancement, bridging the gap between image and text.

However, video makes it challenging for LVLMs to perform adequately due to the complexity of the relationship between language and spatial-temporal data structure.

Recent Large Video-Language Models (LVidLMs) align features of static visual data like images into latent space of language feature, by general multi-modal tasks to leverage the abilities of LLMs sufficiently.

In this paper, we explore a fine-grained alignment approach via object trajectory for different modalities across both spatial and temporal dimensions simultaneously. Thus, we propose a novel LVidLM by trajectory-guided Pixel-Temporal Alignment, dubbed PiTe, that exhibits promising applicable model properties.

To achieve fine-grained video-language alignment, we curate a multi-modal pre-training dataset PiTe-143k, the dataset provision of moving trajectories in pixel level for all individual objects, that appear and mention in the video and caption both, by our automatic annotation pipeline.

Meanwhile, PiTe demonstrates astounding capabilities on myriad video-related multi-modal tasks by beating the state-of-the-art methods by a large margin.

4. Text to Image Generation

4.1. Open-MAGVIT2: An Open-Source Project Toward Democratizing Auto-regressive Visual Generation

We present Open-MAGVIT2, a family of auto-regressive image generation models ranging from 300M to 1.5B. The Open-MAGVIT2 project produces an open-source replication of Google’s MAGVIT-v2 tokenizer, a tokenizer with a super-large codebook (i.e., 2^{18} codes), and achieves the state-of-the-art reconstruction performance (1.17 rFID) on ImageNet 256 times 256.

Furthermore, we explore its application in plain auto-regressive models and validate scalability properties. To assist auto-regressive models in predicting with a super-large vocabulary, we factorize it into two sub-vocabulary of different sizes by asymmetric token factorization and further introduce “next sub-token prediction” to enhance sub-token interaction for better generation quality. We release all models and codes to foster innovation and creativity in the field of auto-regressive visual generation.

4.2. IFAdapter: Instance Feature Control for Grounded Text-to-Image Generation

While Text-to-Image (T2I) diffusion models excel at generating visually appealing images of individual instances, they struggle to accurately position and control the features generation of multiple instances.

The Layout-to-Image (L2I) task was introduced to address the positioning challenges by incorporating bounding boxes as spatial control signals, but it still falls short in generating precise instance features. In response, we propose the Instance Feature Generation (IFG) task, which aims to ensure both positional accuracy and feature fidelity in generated instances. To address the IFG task, we introduce the Instance Feature Adapter (IFAdapter).

The IFAdapter enhances feature depiction by incorporating additional appearance tokens and utilizing an Instance Semantic Map to align instance-level features with spatial locations. The IFAdapter guides the diffusion process as a plug-and-play module, making it adaptable to various community models.

For evaluation, we contribute an IFG benchmark and develop a verification pipeline to objectively compare models’ abilities to generate instances with accurate positioning and features. Experimental results demonstrate that IFAdapter outperforms other models in both quantitative and qualitative evaluations.

4.3. TextBoost: Towards One-Shot Personalization of Text-to-Image Models via Fine-tuning Text Encoder

Recent breakthroughs in text-to-image models have opened up promising research avenues in personalized image generation, enabling users to create diverse images of a specific subject using natural language prompts. However, existing methods often suffer from performance degradation when given only a single reference image.

They tend to overfit the input, producing highly similar outputs regardless of the text prompt. This paper addresses the challenge of one-shot personalization by mitigating overfitting, enabling the creation of controllable images through text prompts.

Specifically, we propose a selective fine-tuning strategy that focuses on the text encoder. Furthermore, we introduce three key techniques to enhance personalization performance:

Augmentation tokens to encourage feature disentanglement and alleviate overfitting.

A knowledge-preservation loss to reduce language drift and promote generalizability across diverse prompts.

SNR-weighted sampling for efficient training. Extensive experiments demonstrate that our approach efficiently generates high-quality, diverse images using only a single reference image while significantly reducing memory and storage requirements.

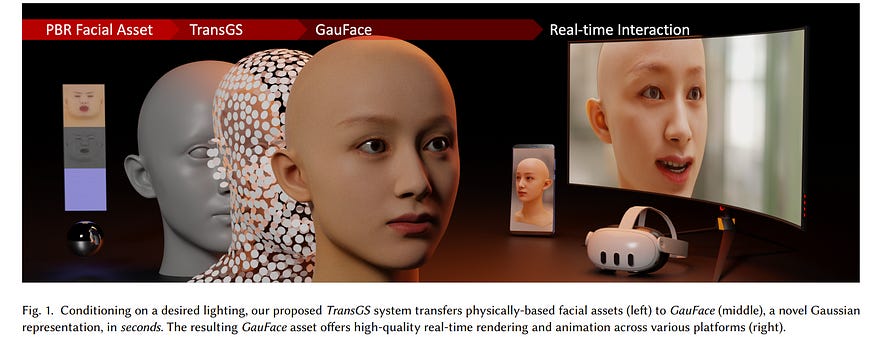

4.4. Instant Facial Gaussians Translator for Relightable and Interactable Facial Rendering

We propose GauFace, a novel Gaussian Splatting representation, tailored for efficient animation and rendering of physically-based facial assets. Leveraging strong geometric priors and constrained optimization, GauFace ensures a neat and structured Gaussian representation, delivering high fidelity and real-time facial interaction of 30fps@1440p on a Snapdragon 8 Gen 2 mobile platform.

Then, we introduce TransGS, a diffusion transformer that instantly translates physically-based facial assets into the corresponding GauFace representations. Specifically, we adopt a patch-based pipeline to handle the vast number of Gaussians effectively.

We also introduce a novel pixel-aligned sampling scheme with UV positional encoding to ensure the throughput and rendering quality of GauFace assets generated by our TransGS. Once trained, TransGS can instantly translate facial assets with lighting conditions to GauFace representation, With the rich conditioning modalities, it also enables editing and animation capabilities reminiscent of traditional CG pipelines.

We conduct extensive evaluations and user studies, compared to traditional offline and online renderers, as well as recent neural rendering methods, which demonstrate the superior performance of our approach for facial asset rendering. We also showcase diverse immersive applications of facial assets using our TransGS approach and GauFace representation, across various platforms like PCs, phones, and even VR headsets.

Are you looking to start a career in data science and AI and do not know how? I offer data science mentoring sessions and long-term career mentoring:

Mentoring sessions: https://lnkd.in/dXeg3KPW

Long-term mentoring: https://lnkd.in/dtdUYBrM