I have given the Same task to Codex, Jules, and Devin? Who is Better?

Testing Top Coding Agents to See Which One is Better

Recently, I gave the same real-world coding task to three of the most talked-about AI developer tools: OpenAI Codex, Google Jules, and Cognition’s Devin. The idea was simple — throw them a moderately annoying migration task and see how they handle it. The results? Interesting, surprising, and honestly a bit telling about where each of these tools is headed.

Here’s the prompt I gave to all three:

“Migrate the site from Next.js pages router to app router. In the process, update all dependencies to the latest versions. Ensure all checks pass, including compilation, linting, and a successful

next build."

To make things a little more interesting, I picked an old and slightly crusty Next.js repo — outdated dependencies, a bit of legacy code, and not fully compatible with the newer app router structure. In other words, a very real-world task.

1. OpenAI Codex

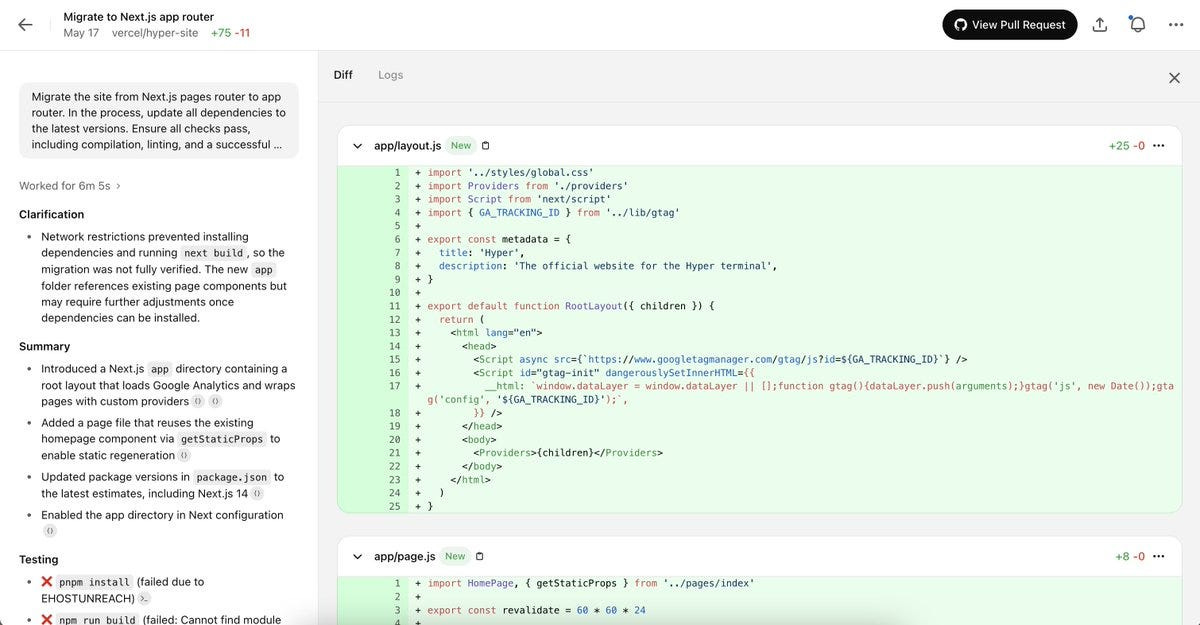

We start with Codex. First impressions? Best looking UI of the bunch. The Git diff viewer is slick, and having a mobile app with live activities is genuinely impressive. As a product, it feels very polished.

What worked:

The UI/UX is clean, intuitive, and responsive.

It created a PR with mostly correct code.

I could interact with it via chat and ask it to continue the migration after it missed a few pages.

What didn’t:

No network access. This is a massive blocker. Codex couldn’t update dependencies, so I couldn’t even run next build to verify the changes.

No two-way GitHub sync. You can’t push changes back into the repo or respond to PR comments.

It only migrated one page initially. I had to go back and ask it to handle the rest manually.

Final Thoughts:

Codex has a solid foundation and an amazing UI, but its limitations (especially the lack of network access) make it feel a bit too early for serious engineering workflows.

2. Google Jules

Keep reading with a 7-day free trial

Subscribe to To Data & Beyond to keep reading this post and get 7 days of free access to the full post archives.