Hands-On LangSmith Course[3/7]: Playground & Prompts Hub in LangSmith

Managing Prompts at Scale with Versioning and Collaboration

As your agent evolves, hardcoding prompts directly in your code becomes problematic. What happens when you want to experiment with different prompt variations? Or when multiple team members need to collaborate on prompt engineering? This is where LangSmith’s Prompt Hub and Playground shine.

The Playground provides an interactive environment to test and refine prompts in real-time, while the Prompt Hub allows you to version control your prompts separately from your codebase. You can experiment with different models, push prompts to LangSmith, iterate on them through the web interface, and pull specific versions into your application.

This separation of concerns means prompt engineers can refine instructions without touching production code, while developers can update application logic without worrying about breaking carefully crafted prompts.

Table of Contents:

Introduction to Prompt Hub & Playground in LangSmith

Prompt Versioning

Pulling Prompts from LangSmith

Prompt Canvas: Auto Optimize your Prompts

If you have ever tried to build an application on top of a Large Language Model (LLM), then you know the struggle. When things go wrong, it’s rarely a simple bug.

Instead, you’re left trying to trace a single user interaction through a complex run tree or make sense of a sea of unstructured conversations, trying to understand why the model failed to reason correctly. Debugging its logic and figuring out how to consistently improve its performance can often feel more like an unpredictable art than a repeatable science.

Here, where LangSmith comes into action, it is designed for the entire LLM development lifecycle and reveals some fundamental principles that challenge common assumptions.

LangSmith offers essential capabilities such as observability, which includes features like automated tracing and a run tree view for debugging, identifying expensive steps, and analyzing latency bottlenecks.

Furthermore, the platform supports evaluation and experimentation, allowing users to define custom evaluators, conduct systematic testing with curated datasets, and compare experimental results side by side to assess the trade-offs of architectural changes.

This series will cover the following topics:

Playground & Prompts in LangSmith (You are here!)

Datasets & Evaluations in LangSmith

Annotation Queues with LangSmith

Automations & Online Evaluation with LangSmith

Dashboards in LangSmith

Get All My Books, One Button Away With 40% Off

I have created a bundle for my books and roadmaps, so you can buy everything with just one button and for 40% less than the original price. The bundle features 8 eBooks, including:

1. Introduction to Prompt Hub & Playground in LangSmith

LangSmith Prompt Hub and Playground work together to streamline prompt engineering workflows. The Hub is a centralized repository for versioning and sharing prompts across your organization, where you can push prompts with specific configurations (including model settings), track every change with commit hashes, and pull them into your code using simple API calls.

The Playground serves as an interactive testing environment where you can experiment with these prompts, compare different models side-by-side, adjust parameters in real-time, and see token usage and costs instantly. Once you’re satisfied with a prompt in the Playground, you can push it directly to the Hub, separating prompt engineering from code deployment and allowing teams to iterate on prompts without touching production code. Together, they transform prompt development from scattered trial-and-error into a systematic, observable, and collaborative process.

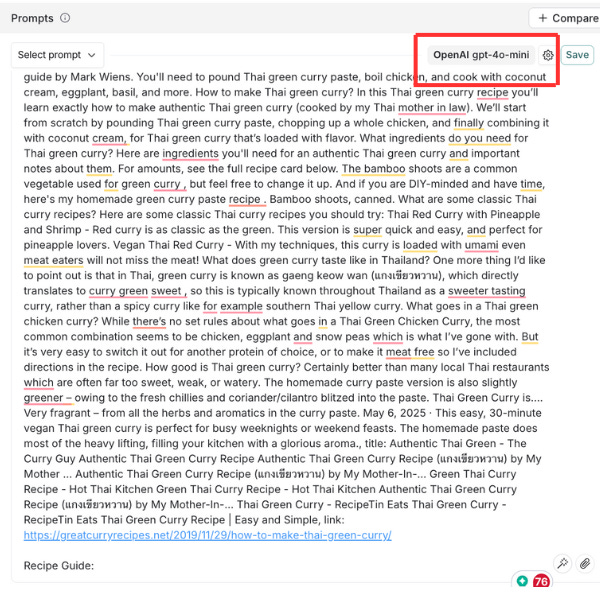

When you first go to the playground, you will see this window. You can change the prompt and see how this reflects in the output. You can also test different LLMs from different providers and compare the output. You can simply choose your LLM from the button shown in the figure below and run the LLM request.

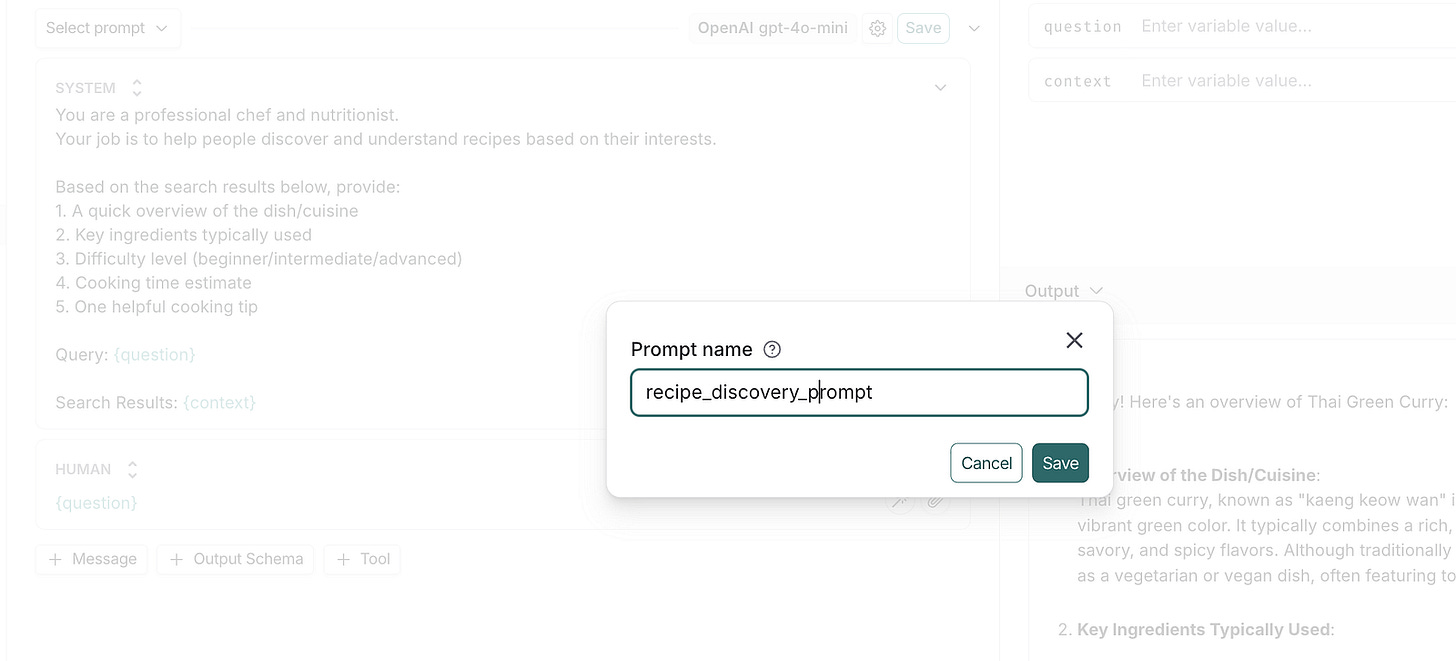

Here, we will remove the query and the search results and add them as an input to the prompt, and leave only the system prompt, so it can look neat, and we will be able to use it in our code later.

We will save this prompt so we can use it after that.

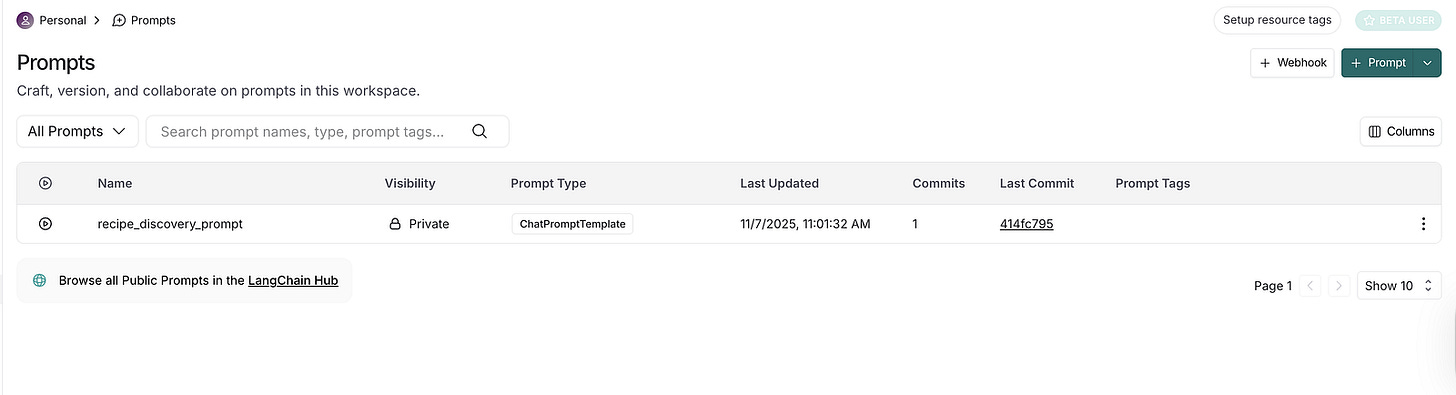

If you want to see this prompt, you have to go to the prompts hub, and you can see all your prompts.

If you select this prompt, you can see the prompt template and the details of the used LLM. If you want to change it, you can change it in the playground and commit these changes, as we will see in the next section.

2. Prompt Versioning

One of LangSmith’s most powerful features is automatic prompt versioning. Every time you push a prompt to the Hub, LangSmith generates a unique commit hash that captures that exact version of your prompt, its variables, and any associated model configurations.

This means you can experiment freely, knowing that you can always roll back to a previous version if a change degrades performance. When debugging production issues, LangSmith traces show you exactly which prompt version was used for each execution, making it trivial to correlate output quality with specific prompt changes.

Keep reading with a 7-day free trial

Subscribe to To Data & Beyond to keep reading this post and get 7 days of free access to the full post archives.