Google’s new open-source model family, Gemma 3, is quickly gaining attention for its remarkable performance, rivaling that of some of the latest proprietary models.

With advanced multimodal features, improved reasoning abilities, and support for over 140 languages, Gemma 3 stands out as a versatile tool for various AI applications.

In this tutorial, we will dive into the capabilities of Gemma 3 and demonstrate how to fine-tune it step by step using a financial reasoning question-answering dataset.

This fine-tuning process will enhance the model’s ability to accurately understand, reason, and respond to complex financial questions, providing contextually relevant and precise answers.

Table of Contents:

Setting Up the Working Environment

Loading the Model and Tokenizer

Test the Model with Zero-Shot Inference

Processing the Dataset for Fine-Tuning

Setting up the Model Training Pipeline

Model Fine-Tuning with LoRA

Model Inference After Fine-Tuning

Saving the Model and Tokenizer to Hugging Face

My New E-Book: LLM Roadmap from Beginner to Advanced Level

I am pleased to announce that I have published my new ebook LLM Roadmap from Beginner to Advanced Level. This ebook will provide all the resources you need to start your journey towards mastering LLMs.

1. Setting Up the Working Environment

We will start by installing and updating all the essential libraries needed to fine-tune and run inferences with Google’s Gemma-3 model using Hugging Face tools. This ensures compatibility by updating transformers, datasets, accelerate, peft, trl, and bitsandbytes to their latest versions, which are required for efficient training and memory-optimized model loading.

Finally, we will install a specific development branch of transformers that includes early support for Gemma-3, making sure the environment is ready for both fine-tuning with LoRA and generating responses from the model.

%%capture

!pip install -U datasets

!pip install -U transformers

!pip install -U accelerate

!pip install -U peft

!pip install -U trl

!pip install -U bitsandbytes

!pip install git+https://github.com/huggingface/transformers@v4.49.0-Gemma-3Next, we authenticate with the Hugging Face Hub to access pre-trained models and datasets. We retrieve the authentication token from kaggle secrets and log in using the huggingface_hub package:

from huggingface_hub import login

from kaggle_secrets import UserSecretsClient

user_secrets = UserSecretsClient()

hf_token = user_secrets.get_secret("HUGGINGFACE_TOKEN")

login(hf_token)This step allows seamless interaction with Hugging Face resources, ensuring smooth model loading and saving. Now we are ready to download the model and tokenizer and try it with zero-shot inference.

2. Loading the Model and Tokenizer

To begin working with the Gemma 3 model for instruction tuning and reasoning tasks, we will first load the base model and tokenizer. We’re using the google/gemma–3–4b–it variant, which is the instruction-tuned 4B parameter version of Gemma, suitable for tasks like question answering or multi-step reasoning fine-tuning.

The model is instantiated using Gemma3ForConditionalGeneration.from_pretrained, specifying torch_dtype=torch.bfloat16 to optimize memory usage and inference efficiency without a significant loss in numerical precision.

Setting device_map=”auto” ensures the model is automatically distributed across available devices, typically GPUs, which is essential for handling larger models like this one.

The attn_implementation=’eager’ argument is required for Gemma, as it uses a specific attention mechanism that relies on eager execution rather than FlashAttention.

Finally, the .eval() call puts the model in inference mode, which disables dropout and other training-specific behavior. The corresponding tokenizer is loaded using AutoTokenizer.from_pretrained, ensuring that the input text is properly tokenized to match the model’s vocabulary and expected format.

from transformers import AutoTokenizer, Gemma3ForConditionalGeneration

import torch

GEMMA_PATH = "/kaggle/input/gemma-3/transformers/gemma-3-4b-it/1"

model = Gemma3ForConditionalGeneration.from_pretrained(

GEMMA_PATH, device_map="auto",attn_implementation='eager'

).eval()

tokenizer = AutoTokenizer.from_pretrained(GEMMA_PATH)Now that we have loaded the model, we will first test it without any fine-tuning.

3. Test the Model with Zero-Shot Inference

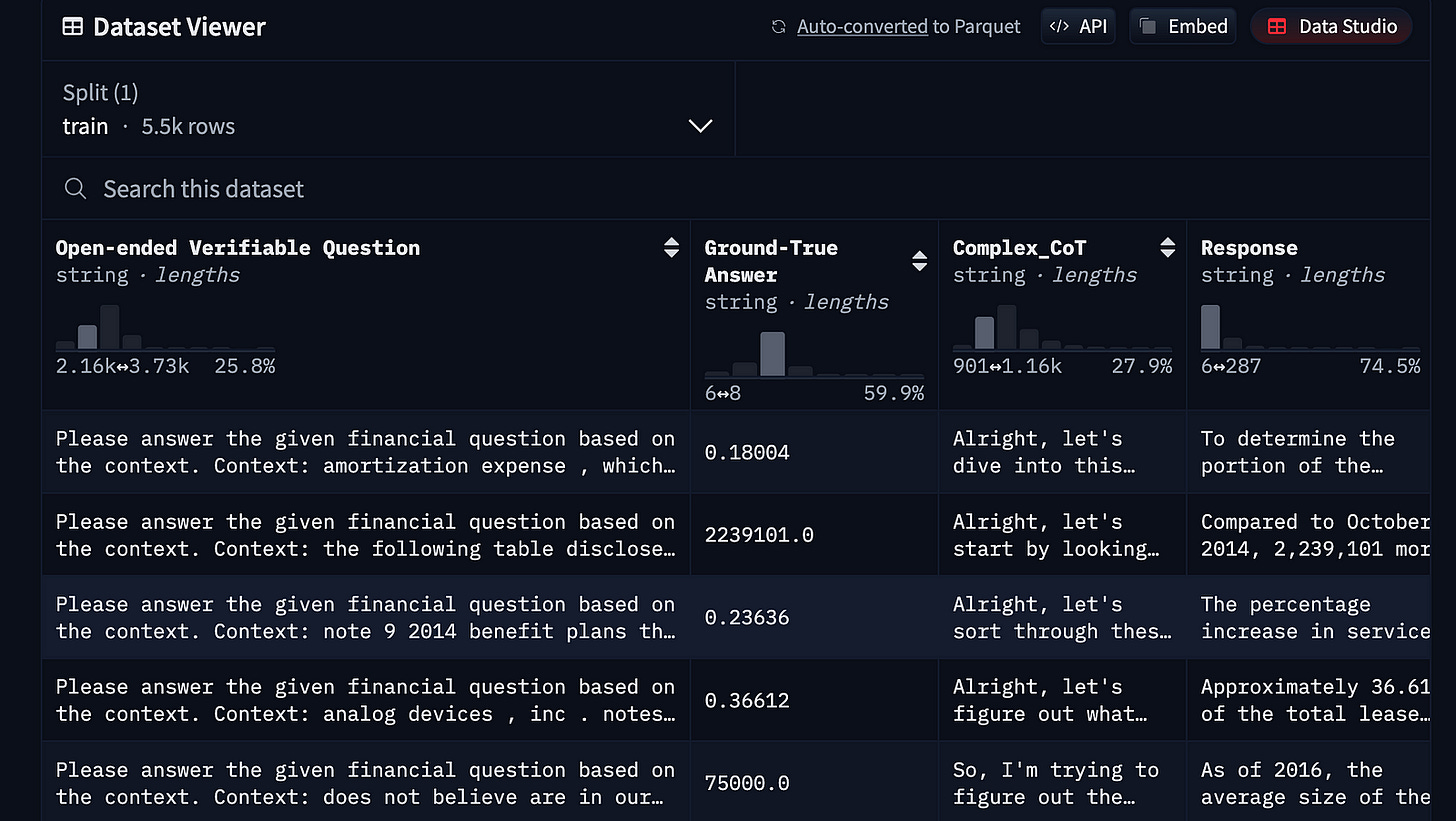

With the model and tokenizer ready, we can now run zero-shot inference on a financial reasoning question. First, we will load the TheFinAI/Fino1_Reasoning_Path_FinQA dataset, which is a financial reasoning dataset based on FinQA, enhanced with GPT-4o generated reasoning paths for structured financial question answering.

from datasets import load_dataset

dataset = load_dataset("TheFinAI/Fino1_Reasoning_Path_FinQA", split = "train[0:500]",trust_remote_code=True)We will create the prompt style with two placeholders, one for the input question and the other for the generated answer:

prompt_style = """Below is an instruction that describes a task, paired with an input that provides further context.

Write a response that appropriately completes the request.

Before answering, think carefully about the question and create a step-by-step chain of thoughts to ensure a logical and accurate response.

### Question:

{}

### Response:

<think>

{}

"""Then, we will apply the prompt style to the question, convert it into tokens, and provide it to the model. After that, we will generate the response and convert the tokens back into text, and then we will only print the response.

question = dataset[0]['Open-ended Verifiable Question']

inputs = tokenizer(

[prompt_style.format(question, "") + tokenizer.eos_token],

return_tensors="pt"

).to("cuda")

outputs = model.generate(

input_ids=inputs.input_ids,

attention_mask=inputs.attention_mask,

max_new_tokens=1200,

eos_token_id=tokenizer.eos_token_id,

use_cache=True,

)

response = tokenizer.batch_decode(outputs, skip_special_tokens=True)

print(response[0].split("### Response:")[1])<think>

The question asks for the portion of the estimated amortization expense that will be recognized in 2017.

The provided table shows the estimated amortization expense for intangible assets for the years 2017, 2018, 2019, 2020, 2021, and 2022 and thereafter.

The amortization expense for 2017 is $10,509.

</think>

$10,509

The response is short and has no reasoning, and above that, it is far from accurate.

Here is the ground truth answer:

It is important to compare this answer with the answer we will get from the model after fine-tuning, as it will determine whether the model was improved or not.

4. Processing the Dataset for Fine-Tuning

When fine-tuning a language model for reasoning, structuring the training dataset into a reasoning response is an important step before fine-tuning the model.

This section covers how we define a structured prompt format, preprocess dataset entries, and apply transformations before feeding data into the model.

To ensure the model generates structured financial responses, we define a prompt template that includes an instruction, a medical question, and a structured reasoning process. The template follows this format:

train_prompt_style="""

Below is an instruction that describes a task, paired with an input that provides further context.

Write a response that appropriately completes the request.

Before answering, think carefully about the question and create a step-by-step chain of thoughts to ensure a logical and accurate response.

### Question:

{}

### Response:

<think>

{}

</think>

{}

"""This template structures the model’s response by incorporating a chain of thought (CoT) inside <think></think> tags. The {} placeholders are dynamically replaced with a financial question, reasoning process, and final response.

Once the prompt format is defined, we create a function to transform dataset entries into structured prompts. The function extracts the question, reasoning (CoT), and response from the dataset and formats them using the template:

def formatting_prompts_func(examples):

inputs = examples["Open-ended Verifiable Question"]

complex_cots = examples["Complex_CoT"]

outputs = examples["Response"]

texts = []

for question, cot, response in zip(inputs, complex_cots, outputs):

# Append the EOS token to the response if it's not already there

if not response.endswith(tokenizer.eos_token):

response += tokenizer.eos_token

text = train_prompt_style.format(question, cot, response)

texts.append(text)

return {"text": texts}The function loops through each entry, formats it using train_prompt_style, and appends an end-of-sequence token (EOS_TOKEN). This ensures the model correctly learns when a response ends during training.

Now that we have the formatting function, we load a subset of the dataset and apply the transformation. In the code below, we will:

Apply the formatting_prompts_func transformation to structure each sample.

Print the first formatted entry to verify the changes.

This process ensures the dataset is properly structured for fine-tuning, enabling the model to learn from well-defined medical reasoning patterns.

dataset = dataset.map(formatting_prompts_func, batched = True,)

dataset["text"][0]“\nBelow is an instruction that describes a task, paired with an input that provides further context. \nWrite a response that appropriately completes the request. \nBefore answering, think carefully about the question and create a step-by-step chain of thoughts to ensure a logical and accurate response.\n\n### Question:\nPlease answer the given financial question based on the context.\nContext: amortization expense , which is included in selling , general and administrative expenses , was $ 13.0 million , $ 13.9 million and $ 8.5 million for the years ended december 31 , 2016 , 2015 and 2014 , respectively . the following is the estimated amortization expense for the company 2019s intangible assets as of december 31 , 2016 : ( in thousands ) .\n|2017|$ 10509|\n|2018|9346|\n|2019|9240|\n|2020|7201|\n|2021|5318|\n|2022 and thereafter|16756|\n|amortization expense of intangible assets|$ 58370|\nat december 31 , 2016 , 2015 and 2014 , the company determined that its goodwill and indefinite- lived intangible assets were not impaired . 5 . credit facility and other long term debt credit facility the company is party to a credit agreement that provides revolving commitments for up to $ 1.25 billion of borrowings , as well as term loan commitments , in each case maturing in january 2021 . as of december 31 , 2016 there was no outstanding balance under the revolving credit facility and $ 186.3 million of term loan borrowings remained outstanding . at the company 2019s request and the lender 2019s consent , revolving and or term loan borrowings may be increased by up to $ 300.0 million in aggregate , subject to certain conditions as set forth in the credit agreement , as amended . incremental borrowings are uncommitted and the availability thereof , will depend on market conditions at the time the company seeks to incur such borrowings . the borrowings under the revolving credit facility have maturities of less than one year . up to $ 50.0 million of the facility may be used for the issuance of letters of credit . there were $ 2.6 million of letters of credit outstanding as of december 31 , 2016 . the credit agreement contains negative covenants that , subject to significant exceptions , limit the ability of the company and its subsidiaries to , among other things , incur additional indebtedness , make restricted payments , pledge their assets as security , make investments , loans , advances , guarantees and acquisitions , undergo fundamental changes and enter into transactions with affiliates . the company is also required to maintain a ratio of consolidated ebitda , as defined in the credit agreement , to consolidated interest expense of not less than 3.50 to 1.00 and is not permitted to allow the ratio of consolidated total indebtedness to consolidated ebitda to be greater than 3.25 to 1.00 ( 201cconsolidated leverage ratio 201d ) . as of december 31 , 2016 , the company was in compliance with these ratios . in addition , the credit agreement contains events of default that are customary for a facility of this nature , and includes a cross default provision whereby an event of default under other material indebtedness , as defined in the credit agreement , will be considered an event of default under the credit agreement . borrowings under the credit agreement bear interest at a rate per annum equal to , at the company 2019s option , either ( a ) an alternate base rate , or ( b ) a rate based on the rates applicable for deposits in the interbank market for u.s . dollars or the applicable currency in which the loans are made ( 201cadjusted libor 201d ) , plus in each case an applicable margin . the applicable margin for loans will .\nQuestion: what portion of the estimated amortization expense will be recognized in 2017?\nAnswer:\n\n### Response:\n<think>\nAlright, let’s dive into this question about amortization expenses. First, I need to identify the table that illustrates the estimated amortization expenses from 2017 onwards. It looks like this table is super important in figuring out the expense for 2017. \n\nOkay, found it! The table clearly states that the estimated amortization expense for the year 2017 is $10,509, but it notes that this is in thousands. \n\nLet me think for a second. If the numbers are in thousands, that means I need to convert that $10,509 into actual dollars, which becomes $10,509,000. Easy enough, I just multiply by a thousand. \n\nI need to make sure this is consistent throughout. Let me double-check if there’s any other information that throws a wrench in this conversion… Nope, everything seems straightforward here. \n\nSo, what’s the conclusion? The amortization expense for 2017 is a clear-cut $10,509,000. Hmm, that makes sense based on the conversion from thousands of dollars, and it looks like there aren’t any surprise twists or exceptions here. \n\nBut hold up, let me consider the perspective of portions. The context refers to percentages or parts, and for that, I should be thinking about the total amortization expense. The whole amount listed is $58,370,000 for all the intangible assets. \n\nTo find out what part of the total this 2017 expense represents, I’ll calculate a ratio. So, I divide $10,509,000 by the grand total, $58,370,000. Crunching these numbers gives me around 0.18004.\n\nSo, in conclusion, the portion of the total estimated amortization expense that is specifically for 2017 is approximately 0.18004 of the total. I’m confident that’s correct since that ratio aligns perfectly with the expectation of finding a portion or part. \n\nPhew, that should do it!\n</think>\nTo determine the portion of the estimated amortization expense that will be recognized in 2017, we first examine the table provided. The estimated amortization expense for 2017 is $10,509,000 (noting that the figures are in thousands, hence $10,509 becomes $10,509,000). \n\nThe total estimated amortization expense for all the listed years is $58,370,000. To find out what portion of the total this represents for 2017, we take the 2017 expense and divide it by the total:\n\n\\[ \\text{Portion for 2017} = \\frac{\\text{2017 expense}}{\\text{Total expense}} = \\frac{10,509,000}{58,370,000} \\approx 0.18004 \\]\n\nTherefore, approximately 18.004% of the total estimated amortization expense will be recognized in 2017.<eos>\n”

Finally, we will have to create our data collection using the tokenizer and provide it to the trainer later, since the new STF trainer doesn’t accept the tokenizers.

from transformers import DataCollatorForLanguageModeling

data_collator = DataCollatorForLanguageModeling(

tokenizer=tokenizer,

mlm=False # we're doing causal LM, not masked LM

)Keep reading with a 7-day free trial

Subscribe to To Data & Beyond to keep reading this post and get 7 days of free access to the full post archives.