Efficient Python for Data Scientists Course [6/14]: How to Use Caching to Speed Up Your Python Code & LLM Application

Boost Your Application Performance with Code Caching

A seamless user experience is crucial for the success of any user-facing application. Developers often aim to minimize application latencies to enhance this experience, with data access delays typically being the main culprit.

By caching data, developers can drastically reduce these delays, resulting in faster load times and happier users. This principle applies to web scraping as well, where large-scale projects can see significant speed improvements.

But what exactly is caching, and how can it be implemented? This article will explore caching, its purpose and benefits, and how to leverage it to speed up your Python code and also speed up your LLM calls at a lower cost.

Table of Contents:

What is a cache in programming?

Why is Caching Helpful?

Common Uses for Caching

Common Caching Strategies

Python caching using a manual decorator

Python caching using LRU cache decorator

Function Calls Timing Comparison

Use Caching to Speed up Your LLM

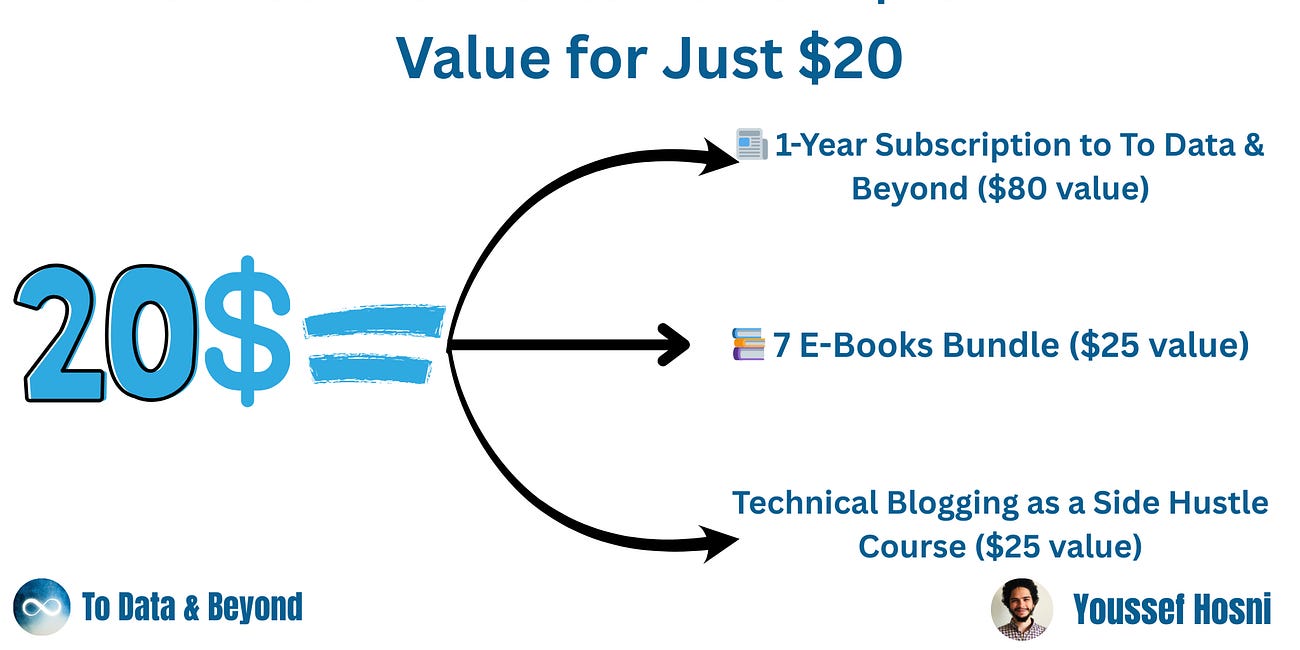

20k Subscribers Celebration: $130 Worth of Value for Just $20

To Data & Beyond just reached a big milestone — 20,000 subscribers!

1. What is a cache in programming?

Caching is a mechanism for improving the performance of any application. In a technical sense, caching is storing the data in a cache and retrieving it later.

A cache is a fast storage space (usually temporary) where frequently accessed data is kept to speed up the system’s performance and decrease the access times. For example, a computer’s cache is a small but fast memory chip (usually an SRAM) between the CPU and the main memory chip (usually a DRAM).

The CPU first checks the cache when it needs to access the data. If it’s in the cache, a cache hit occurs, and the data is thereby read from the cache instead of a relatively slower main memory. It results in reduced access times and enhanced performance.

2. Why is Caching Helpful?

Caching can improve the performance of applications and systems in several ways. Here are the primary reasons to use caching:

2.1. Reduced Access Time

The primary goal of caching is to accelerate access to frequently used data. By storing this data in a temporary, easily accessible storage area, caching dramatically decreases access time. This leads to a notable improvement in the overall performance of applications and systems.

2.2. Reduced System Load

Caching also alleviates system load by minimizing the number of requests sent to external data sources, such as databases. By storing frequently accessed data in cache storage, applications can retrieve this data directly from the cache instead of repeatedly querying the data source. This reduces the load on the external data source and enhances system performance.

2.3. Improved User Experience

Caching ensures rapid data retrieval, enabling more seamless interactions with applications and systems. This is especially crucial for real-time systems and web applications, where users expect instant responses. Consequently, caching plays a vital role in enhancing the overall user experience.

3. Common Uses for Caching

Caching is a general concept and has several prominent use cases. You can apply it in any scenario where data access has some patterns, and you can predict what data will be demanded next. You can prefetch the demanded data in the cache store and improve application performance.

Web Content: Frequently accessed web pages, images, and other static content are often cached to reduce load times and server requests.

Database Queries: Caching the results of common database queries can drastically reduce the load on the database and speed up application responses.

API Responses: External API call responses are cached to avoid repeated network requests and to provide faster data access.

Session Data: User session data is cached to quickly retrieve user-specific information without querying the database each time.

Machine Learning Models: Intermediate results and frequently used datasets are cached to speed up machine learning workflows and inference times.

Configuration Settings: Application configuration data is cached to avoid repeated reading from slower storage systems.

4. Common Caching Strategies

Different caching strategies can be devised based on specific spatial or temporal data access patterns.

Cache-Aside (Lazy Loading): Data is loaded into the cache only when it is requested. If the data is not found in the cache (a cache miss), it is fetched from the source, stored in the cache, and then returned to the requester.

Write-Through: Every time data is written to the database, it is simultaneously written to the cache. This ensures that the cache always has the most up-to-date data but may introduce additional write latency.

Write-Back (Write-Behind): Data is written to the cache and acknowledged to the requester immediately, with the cache asynchronously writing the data to the database. This improves write performance but risks data loss if the cache fails before the write to the database completes.

Read-Through: The application interacts only with the cache, and the cache is responsible for loading data from the source if it is not already cached.

Time-to-Live (TTL): Cached data is assigned an expiration time, after which it is invalidated and removed from the cache. This helps to ensure that stale data is not used indefinitely.

Cache Eviction Policies: Strategies to determine which data to remove from the cache when it reaches its storage limit. Common policies include:

Last-In, First-Out (LIFO): The most recently added data is the first to be removed when the cache needs to free up space. This strategy assumes that the oldest data will most likely be required again soon.

Least Recently Used (LRU): The least recently accessed data is the first to be removed. This strategy works well when the most recently accessed data is more likely to be reaccessed.

Most Recently Used (MRU): The most recently accessed data is the first to be removed. This can be useful in scenarios where the most recent data is likely to be used only once and not needed again.

Least Frequently Used (LFU): The data that is accessed the least number of times is the first to be removed. This strategy helps in keeping the most frequently accessed data in the cache longer.

5. Python caching using a manual decorator

A decorator in Python is a function that accepts another function as an argument and outputs a new function. We can alter the behavior of the original function using a decorator without changing its source code.

One common use case for decorators is to implement caching. This involves creating a dictionary to store the function’s results and then saving them in the cache for future use.

Let’s code a function that computes the n-th Fibonacci number. Here’s the recursive implementation of the Fibonacci sequence:

def fibonacci(n):

if n <= 1:

return n

return fibonacci(n-1) + fibonacci(n-2)Without caching, the recursive calls result in redundant computations. If the values are cached, it’d be much more efficient to look up the cached values. For this, you can use the @cache decorator.

The @cache decorator from the functools a module in Python 3.9+ is used to cache the results of a function. It works by storing the results of expensive function calls and reusing them when the function is called with the same arguments. Now, let’s wrap the function with the @cache decorator:

from functools import cache

@cache

def fibonacci(n):

if n <= 1:

return n

return fibonacci(n-1) + fibonacci(n-2)We’ll get to the performance comparison later. Now let’s see another way to cache return values from functions using the LRU cache decorator.

6. Python caching using LRU cache decorator

Another method to implement caching in Python is to use the built-in @lru_cache decorator from functools. This decorator implements cache using the least recently used (LRU) caching strategy. This LRU cache is a fixed-size cache, which means it’ll discard the data from the cache that hasn’t been used recently.

In LRU caching, when the cache is full and a new item needs to be added, the least recently used item in the cache is removed to make room for the new item. This ensures that the most frequently used items are retained in the cache, while less frequently used items are discarded.

The @lru_cache decorator is similar to @cache but allows you to specify the maximum size—as the maxsize argument—of the cache. Once the cache reaches this size, the least recently used items are discarded. This is useful if you want to limit memory usage.

Here, the fibonacci function caches up to 7 most recently computed values:

from functools import lru_cache

@lru_cache(maxsize=7) # Cache up to 7 most recent results

def fibonacci(n):

if n <= 1:

return n

return fibonacci(n-1) + fibonacci(n-2)

fibonacci(5) # Computes Fibonacci(5) and caches intermediate results

fibonacci(3) # Retrieves Fibonacci(3) from the cacheHere, the fibonacci function is decorated with @lru_cache(maxsize=7), specifying that it should cache up to the 7 most recent results. When fibonacci(5) is called, the results for fibonacci(4), fibonacci(3), and fibonacci(2) are cached.

When fibonacci(3) is called subsequently, fibonacci(3) is retrieved from the cache since it was one of the seven most recently computed values, avoiding redundant computation.

7. Function Calls Timing Comparison

Now let’s compare the execution times of the functions with and without caching. For this example, we don’t set an explicit value for maxsize. So maxsize will be set to the default value of 128:

from functools import cache, lru_cache

import timeit

# without caching

def fibonacci_no_cache(n):

if n <= 1:

return n

return fibonacci_no_cache(n-1) + fibonacci_no_cache(n-2)

# with cache

@cache

def fibonacci_cache(n):

if n <= 1:

return n

return fibonacci_cache(n-1) + fibonacci_cache(n-2)

# with LRU cache

@lru_cache

def fibonacci_lru_cache(n):

if n <= 1:

return n

return fibonacci_lru_cache(n-1) + fibonacci_lru_cache(n-2)To compare the execution times, we’ll use the timeit function from the timeit module:

# Compute the n-th Fibonacci number

n = 35

no_cache_time = timeit.timeit(lambda: fibonacci_no_cache(n), number=1)

cache_time = timeit.timeit(lambda: fibonacci_cache(n), number=1)

lru_cache_time = timeit.timeit(lambda: fibonacci_lru_cache(n), number=1)

print(f”Time without cache: {no_cache_time:.6f} seconds”)

print(f”Time with cache: {cache_time:.6f} seconds”)

print(f”Time with LRU cache: {lru_cache_time:.6f} seconds”)Time without cache: 7.562178 seconds

Time with cache: 0.000062 seconds

Time with LRU cache: 0.000020 seconds

We see a significant difference in the execution times. The function call without caching takes much longer to execute, especially for larger values of n. While the cached versions (both @cache and @lru_cache) execute much faster and have comparable execution times.

8. Use Caching to Speed up Your LLM Application

We can use the same concept to speed up your LLM application. As your LLM application grows in popularity and encounters higher traffic levels, the expenses related to LLM API calls can become substantial. Additionally, LLM services might exhibit slow response times, especially when dealing with many requests.

To tackle this challenge, you can use the 𝐆𝐏𝐓𝐂𝐚𝐜𝐡𝐞 package dedicated to building a semantic cache for storing LLM responses. GPTCache first performs embedding operations on the input to obtain a vector and then conducts a vector approximation search in the cache storage. After receiving the search results, it performs a similarity evaluation and returns when the set threshold is reached.

To use, we will start by initializing the cache to run GPTCache and import openai from gptcache.adapter, which will automatically set the map data manager to match the exact cache. However, you will need this version of the openai package = 0.28.1

After that, if you ask ChatGPT the exact same two questions, the answer to the second question will be obtained from the cache without requesting ChatGPT again.

import time

def response_text(openai_resp):

return openai_resp[’choices’][0][’message’][’content’]

print(”Cache loading.....”)

# To use GPTCache, that’s all you need

# -------------------------------------------------

from gptcache import cache

from gptcache.adapter import openai

cache.init()

cache.set_openai_key()

# -------------------------------------------------

question = “what’s github”

for _ in range(2):

start_time = time.time()

response = openai.ChatCompletion.create(

model=’gpt-3.5-turbo’,

messages=[

{

‘role’: ‘user’,

‘content’: question

}

],

)

print(f’Question: {question}’)

print(”Time consuming: {:.2f}s”.format(time.time() - start_time))

print(f’Answer: {response_text(response)}\n’)Cache loading…..

Question: what’s github

Time consuming: 6.04s

Answer: GitHub is an online platform where developers can share and collaborate on software development projects. It is used as a hub for code repositories and includes features such as issue tracking, code review, and project management tools. GitHub can be used for open source projects, as well as for private projects within organizations. GitHub has become an essential tool within the software development industry and has over 40 million registered users as of 2021.Question: what’s github

Time consuming: 0.00s

Answer: GitHub is an online platform where developers can share and collaborate on software development projects. It is used as a hub for code repositories and includes features such as issue tracking, code review, and project management tools. GitHub can be used for open source projects, as well as for private projects within organizations. GitHub has become an essential tool within the software development industry and has over 40 million registered users as of 2021.

We can see that the first question took 6.04 seconds, which is the average response time of an LLM. But if we ask the same question again, we can see it takes no time, and also it will decrease the cost.

References

This newsletter is a personal passion project, and your support helps keep it alive. If you would like to contribute, there are a few great ways:

Subscribe. A paid subscription helps to make my writing sustainable and gives you access to additional content.*

Grab a copy of my book Bundle. Get my 7 hands-on books and roadmaps for only 40% of the price

Thanks for reading, and for helping support independent writing and research!

Are you looking to start a career in data science and AI, but do not know how? I offer data science mentoring sessions and long-term career mentoring:

Mentoring sessions: https://lnkd.in/dXeg3KPW

Long-term mentoring: https://lnkd.in/dtdUYBrM