Depth estimation is the process of determining the distance of objects from a viewpoint, which is crucial for various applications in computer vision, including autonomous driving, augmented reality, and 3D reconstruction.

This article provides a practical guide to building a zero-shot depth estimation application using the Dense Prediction Transformer (DPT) model and Gradio.

It begins with an introduction to the concepts and importance of depth estimation, followed by a step-by-step setup of the working environment. The article then delves into implementing depth estimation using the DPT model and concludes with instructions on creating an interactive demo using Gradio.

This guide is particularly beneficial for developers, researchers, and machine learning enthusiasts who seek to develop depth estimation applications without requiring extensive datasets or deep technical expertise.

Table of Contents:

Introduction to Depth Estimation

Setting up Working Environment

Depth Estimation using DPT

Building a Demo Using Gardio

My New E-Book: LLM Roadmap from Beginner to Advanced Level

I am pleased to announce that I have published my new ebook LLM Roadmap from Beginner to Advanced Level. This ebook will provide all the resources you need to start your journey towards mastering LLMs.

1. Introduction to Depth Estimation

Depth estimation is a computer vision technique used to determine the distance of objects in a scene relative to the camera. This process essentially involves predicting the depth (distance from the camera) for each pixel in an image, thereby enabling the creation of a 3D representation from 2D data. Depth estimation is fundamental to various applications, including autonomous driving, robotics, augmented reality, and 3D scene reconstruction.

There are six main types of depth estimation, each with its own applications and pros and cons. Let's explore each of them briefly:

Monocular Depth Estimation:

Input: A single image.

Challenge: This is the hardest form of depth estimation because there is no explicit geometric information about the scene. Instead, it relies on learned features from a dataset where depth information is available.

Methods: Typically involves deep learning models such as Convolutional Neural Networks (CNNs) or Transformers that have been trained on large datasets with depth labels.

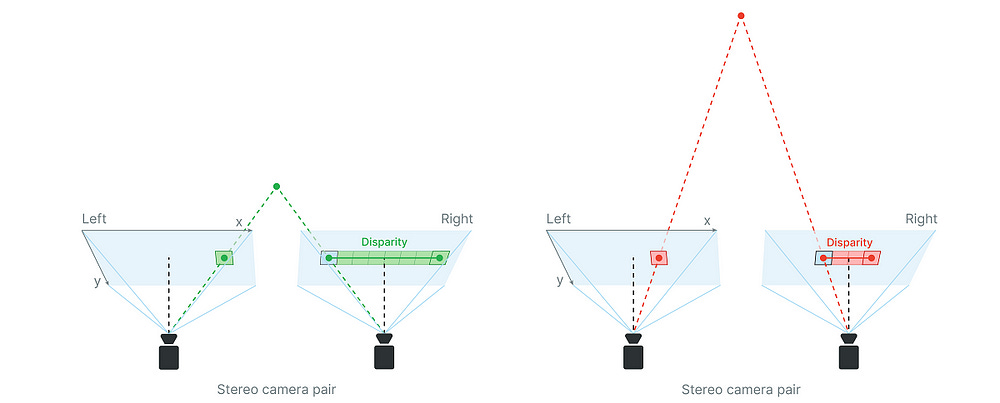

2. Stereo Depth Estimation:

Input: A pair of images taken from slightly different perspectives (similar to human binocular vision).

Method: The process involves matching corresponding points between the two images (stereo matching) and using triangulation to estimate the distance to these points.

Challenge: The main challenge is finding accurate correspondences between the images, especially in textureless or repetitive areas.

3. Multi-View Stereo (MVS):

Input: Multiple images taken from different viewpoints.

Method: MVS involves combining depth information from several images to produce a dense 3D reconstruction. It leverages multiple correspondences between images to refine depth estimates.

Applications: Used in photogrammetry, 3D scanning, and structure-from-motion techniques.

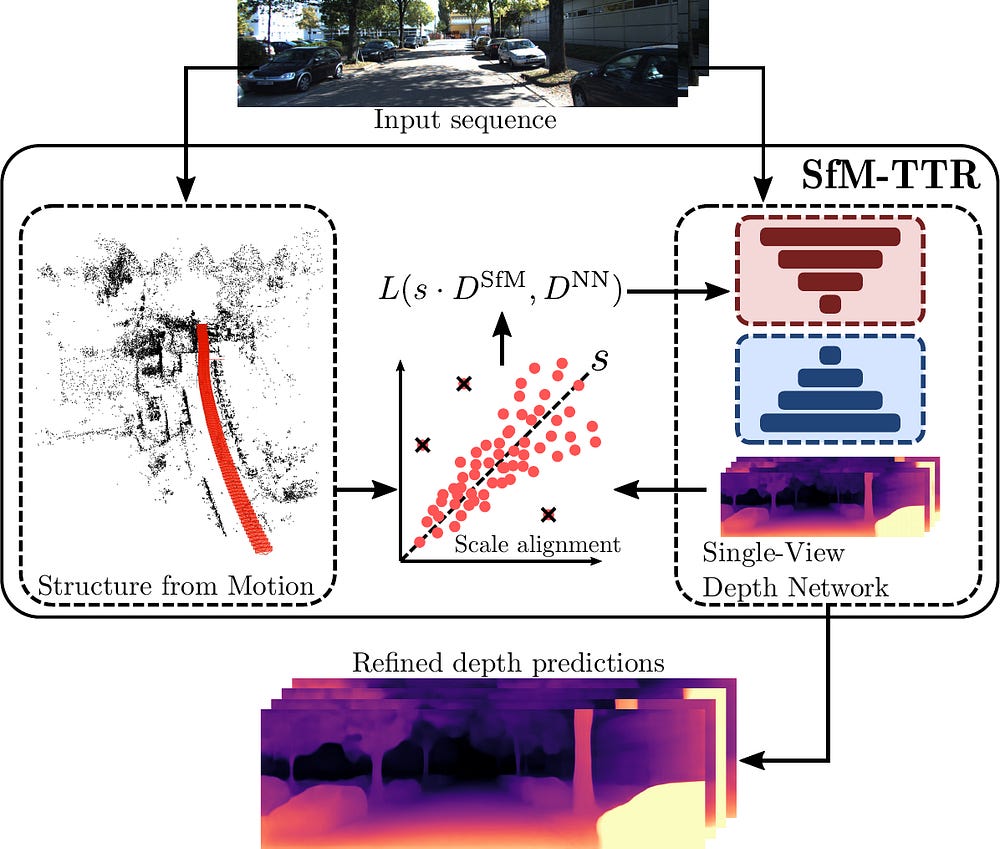

4. Depth from Motion (Structure-from-Motion, SfM):

Input: A sequence of images from a moving camera.

Method: This technique uses the relative motion between frames to estimate depth. It typically requires estimating both the camera’s motion and the 3D structure simultaneously.

Application: Commonly used in robotics and drone navigation.

5. Time-of-Flight (ToF) Sensors:

Input: Directly captures depth information by measuring the time it takes for light (usually infrared) to travel to and return from an object.

Advantages: Provides accurate depth information in real-time.

Disadvantages: It can be expensive and may have issues with reflective or transparent surfaces.

6. Lidar (Light Detection and Ranging):

Input: Similar to ToF, Lidar sensors emit laser pulses and measure the time they take to reflect back.

Application: Widely used in autonomous vehicles for precise distance measurement of surrounding objects.

Depth estimation has many practical applications in different domains. Here are some of the applications of it in different domains:

Autonomous Driving: Understanding the environment in 3D helps in object detection, path planning, and obstacle avoidance.

Augmented Reality (AR): Depth estimation enables the placement of virtual objects in the real world, maintaining their position relative to the user’s viewpoint.

Robotics: Robots use depth estimation to navigate environments, avoid obstacles, and interact with objects.

3D Reconstruction: Creating 3D models from 2D images for virtual reality (VR), gaming, and heritage preservation.

Medical Imaging: Depth information is crucial for reconstructing 3D models of anatomical structures from 2D scans.

There are some challenges in Depth Estimation. Here are some of the most important:

Occlusions: Handling areas where one object blocks another from view.

Textureless Regions: Difficulties arise in regions with little to no texture, making it hard to match corresponding points in stereo or multi-view systems.

Computational Complexity: High-resolution depth estimation can be computationally expensive, requiring powerful hardware, especially in real-time applications.

Generalization: Models trained on one type of scene may not generalize well to other types without retraining or fine-tuning.

2. Setting up the Working Environment

Let’s start by setting up the working environments. First, we will download the packages we will use in this article. We will download the Transformers package, the torch package to use Pytorch, and Gardio for demo deployment of the application.

!pip install transformers

!pip install gradio

!pip install timm

!pip install torchvisionKeep reading with a 7-day free trial

Subscribe to To Data & Beyond to keep reading this post and get 7 days of free access to the full post archives.