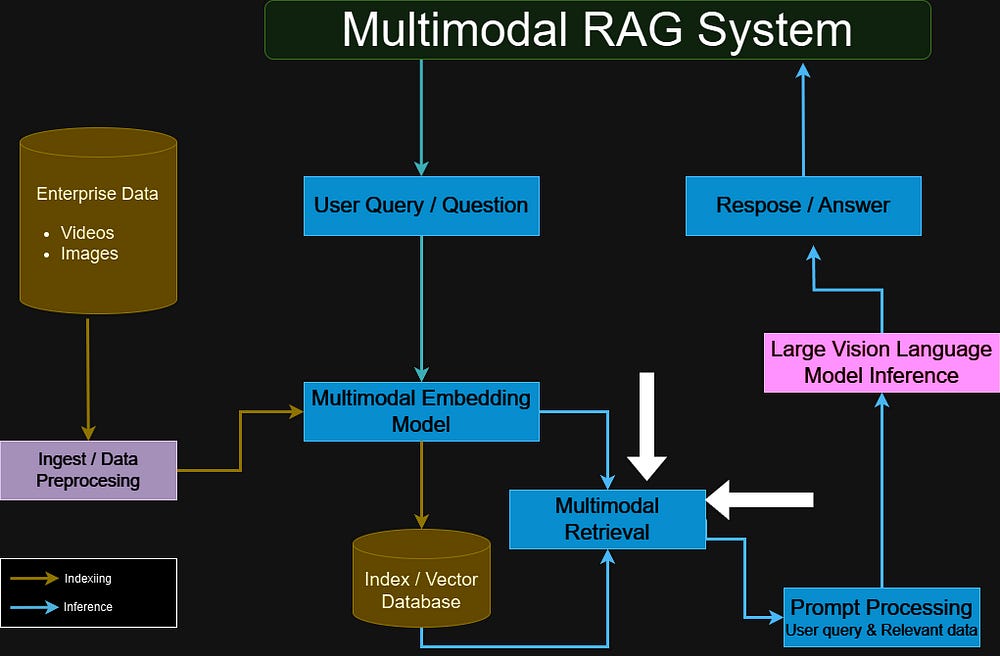

Multimodal RAG combines textual and visual data to enrich the retrieval process, enhancing large language models’ ability to generate more contextually accurate and detailed responses by accessing multiple data types.

This article, the fifth in an ongoing series on building Multimodal Retrieval-Augmented Generation (RAG) applications, dives into the essentials of setting up multimodal retrieval using vector stores.

Starting with environment setup, this guide covers installing and configuring the LanceDB vector database, a robust solution for managing and querying multimodal data. Next, it demonstrates how to ingest both text and image data into LanceDB using LangChain, a popular framework for managing LLM workflows.

The article concludes with a practical walkthrough of performing multimodal retrieval, enabling efficient searches across both text and image data, which can significantly enhance RAG applications by leveraging rich, diverse information sources.

This article is the Fourth in the ongoing series of Building Multimodal RAG Application:

Introduction to Multimodal RAG Applications (Published)

Multimodal Embeddings (Published)

Multimodal RAG Application Architecture (Published)

Processing Videos for Multimodal RAG (Published)

Multimodal Retrieval from Vector Stores (You are here!)

Large Vision Language Models (LVLMs) (Coming soon!)

Multimodal RAG with Multimodal LangChain (Coming soon!)

Putting it All Together! Building Multimodal RAG Application (Coming soon!)

You can find the codes and datasets used in this series in this GitHub Repo

Table of Contents:

Setting-Up Working Environment

Setup LanceDB Vector Database

Ingest Data to LanceDB Using Langchain

Multimodal Retrieval Using Langchain

My New E-Book: LLM Roadmap from Beginner to Advanced Level

I am pleased to announce that I have published my new ebook LLM Roadmap from Beginner to Advanced Level. This ebook will provide all the resources you need to start your journey towards mastering LLMs.

1. Setting-Up Working Environment

The first step is to set up the working environment and prepare for the next steps. We will import the packages we will be using in this tutorial, define two helper functions, and finally wrap up the multimodal embedding tasks from the previous article in one class to be able to use them easily.

Let's start by importing these four packages:

LanceDB: The Database for Multimodal AI

json: To load Json files

os: Miscellaneous operating system interfaces to define file paths

PIL: To load images easily

import lancedb

import json

import os

from PIL import ImageNext, we will define the first helper function load_json_file which will take a file path for a json file and will return the json data.

def load_json_file(file_path):

# Open the JSON file in read mode

with open(file_path, 'r') as file:

data = json.load(file)

return dataThe second helper function is display_retrieved_results which we will use to display the retrieved results from the vector database. It shows the caption of the retrieved video frame with its order (in case there are multiple results) and the corresponding video frame.

def display_retrieved_results(results):

print(f'There is/are {len(results)} retrieved result(s)')

print()

for i, res in enumerate(results):

print(f'The caption of the {str(i+1)}-th retrieved result is:\n"{results[i].page_content}"')

print()

display(Image.open(results[i].metadata['metadata']['extracted_frame_path']))

print("------------------------------------------------------------")The final step is to wrap up the multimodal embeddings function from the previous article in the BridgeTowerEmbeddings class that we will use to create multimodal embeddings for our video frames and their corresponding captions before storing them in a multimodal vector database.

from typing import List

from langchain_core.embeddings import Embeddings

from langchain_core.pydantic_v1 import (

BaseModel,

)

from tqdm import tqdm

def bt_embedding_from_prediction_guard(prompt, base64_image):

# get PredictionGuard client

client = _getPredictionGuardClient()

message = {"text": prompt,}

if base64_image is not None and base64_image != "":

if not isBase64(base64_image):

raise TypeError("image input must be in base64 encoding!")

message['image'] = base64_image

response = client.embeddings.create(

model="bridgetower-large-itm-mlm-itc",

input=[message]

)

return response['data'][0]['embedding']

# encoding image at given path or PIL Image using base64

def encode_image(image_path_or_PIL_img):

if isinstance(image_path_or_PIL_img, PIL.Image.Image):

# this is a PIL image

buffered = BytesIO()

image_path_or_PIL_img.save(buffered, format="JPEG")

return base64.b64encode(buffered.getvalue()).decode('utf-8')

else:

# this is a image_path

with open(image_path_or_PIL_img, "rb") as image_file:

return base64.b64encode(image_file.read()).decode('utf-8')

class BridgeTowerEmbeddings(BaseModel, Embeddings):

""" BridgeTower embedding model """

def embed_documents(self, texts: List[str]) -> List[List[float]]:

"""Embed a list of documents using BridgeTower.

Args:

texts: The list of texts to embed.

Returns:

List of embeddings, one for each text.

"""

embeddings = []

for text in texts:

embedding = bt_embedding_from_prediction_guard(text, "")

embeddings.append(embedding)

return embeddings

def embed_query(self, text: str) -> List[float]:

"""Embed a query using BridgeTower.

Args:

text: The text to embed.

Returns:

Embeddings for the text.

"""

return self.embed_documents([text])[0]

def embed_image_text_pairs(self, texts: List[str], images: List[str], batch_size=2) -> List[List[float]]:

"""Embed a list of image-text pairs using BridgeTower.

Args:

texts: The list of texts to embed.

images: The list of path-to-images to embed

batch_size: the batch size to process, default to 2

Returns:

List of embeddings, one for each image-text pairs.

"""

# the length of texts must be equal to the length of images

assert len(texts)==len(images), "the len of captions should be equal to the len of images"

embeddings = []

for path_to_img, text in tqdm(zip(images, texts), total=len(texts)):

embedding = bt_embedding_from_prediction_guard(text, encode_image(path_to_img))

embeddings.append(embedding)

return embeddingsNow that our project environment is ready we can jump into the next step and set up the core of this component of our multimodal RAG which is the vector storage or the vector database.

Keep reading with a 7-day free trial

Subscribe to To Data & Beyond to keep reading this post and get 7 days of free access to the full post archives.