Building MCP-Powered Agentic RAG Application: Step-by-Step Guide (1/2)

While many tutorials show how to build Retrieval-Augmented Generation (RAG) systems by connecting a language model to a single database, this approach is often too limited.

This guide takes you to the next level with Agentic RAG, where you won’t just build a system that retrieves information — you’ll build one that intelligently decides where to look. It’s the difference between an AI that can only read one assigned book and an AI that knows exactly which book to pull from the library.

To achieve this, we will use the Model Context Protocol (MCP), a modern framework designed specifically for creating powerful, multi-tool AI agents. In this step-by-step tutorial, we will walk you through the entire process:

Setting up a complete MCP server.

Defining custom tools for both private data and live web searches.

Constructing a full RAG pipeline that puts the agent in control.

By the end, you’ll have a fully functional and intelligent application capable of fielding complex queries by dynamically sourcing the best possible context for a truly accurate answer.

Table of Contents:

Introduction to Model Context Protocol (MCP)

MCP-Powered Agentic RAG Architecture Overview

Setting Up the Working Environment

Set up an MCP server instance

Define MCP Tools

Building RAG Pipeline

Putting Everything Together and Testing the System

Get All My Books, One Button Away With 40% Off

I have created a bundle for my books and roadmaps, so you can buy everything with just one button and for 40% less than the original price. The bundle features 8 eBooks, including:

1. Introduction to Model Context Protocol (MCP)

MCP, or Model Context Protocol, is an open standard that enables large language models (LLMs) to securely access and utilize external data, tools, and services.

Think of it as a universal connector, like USB-C for AI, allowing different AI applications to interact with various data sources and functionalities. It essentially standardizes how AI models receive and use context, such as files, prompts, or callable functions, to perform tasks more effectively.

Standardized Connection: MCP provides a consistent way for AI models to interact with external systems, regardless of the specific AI model or the underlying technology.

Secure Access: MCP ensures secure access to resources by defining clear boundaries and permissions for how AI models can interact with external data and tools.

Two-Way Communication: MCP facilitates both sending information to the AI model (context) and receiving results or actions back from the model, enabling dynamic and interactive AI applications.

Ecosystem of Tools: MCP is designed to foster a vibrant ecosystem of “MCP servers,” which are applications or services that expose their functionality or data through the MCP standard, and “MCP clients,” which are the AI applications that connect to these servers.

2. MCP-Powered Agentic RAG Architecture Overview

Before we roll up our sleeves and dive into the code, it’s crucial to understand the high-level architecture of our MCP-powered Agentic RAG application. This system is designed to be intelligent, resourceful, and responsive.

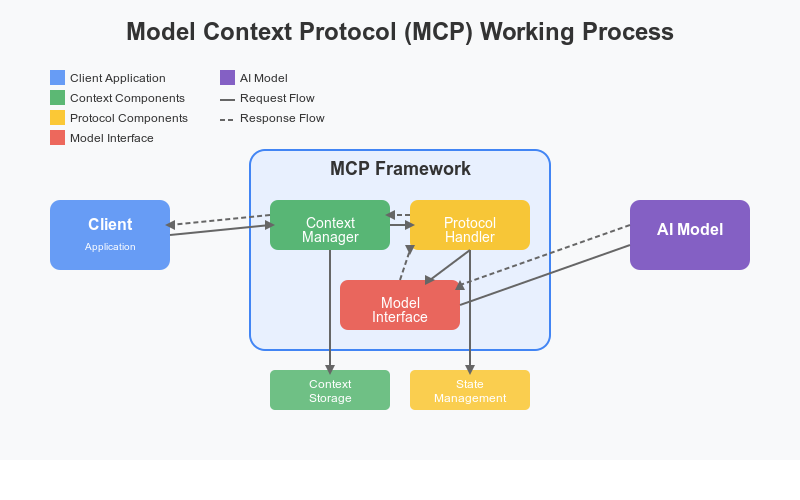

The diagram below illustrates the complete workflow, from the user’s initial question to the final, context-enriched answer.

The architecture is composed of several key components that work in concert:

The User: The starting point of the entire process. A user has a question and interacts with the system via a front-end application (e.g., a web interface on their laptop).

MCP Client: This is the user-facing part of our application. It’s responsible for receiving the user’s Query, communicating with the backend server, and ultimately presenting the final Response back to the user.

MCP Server (The Agent): This is the core of our system — the “brain” of the operation. When the MCP Server receives a request, it doesn’t just try to answer from its own pre-trained knowledge. Instead, it acts as an agent, intelligently deciding if it needs more information to provide a high-quality answer.

Tools: These are external resources the MCP Server can use to find information. Our architecture features two powerful tools:

Vector DB search MCP tool: This tool connects to a specialized vector database. This database is ideal for storing and searching through your private documents, internal knowledge bases, or any custom text corpus. It excels at finding semantically similar information (the “Retrieval” in RAG).

Web search MCP tool: When the answer isn’t in our private data, this tool allows the agent to search the live internet for the most current and relevant public information.

Now, let’s trace the path of a request through the system:

Query Submission: The user types a question (“Query”) into the application.

Client to Server Communication: The MCP Client receives the query and forwards it to the MCP Server for processing.

The Agentic Decision (Tool Call): This is where the magic happens. The MCP Server analyzes the query and determines the best course of action. It makes a “Tool call,” deciding whether it should:

Query the Vector DB for internal, proprietary knowledge.

Perform a Web search for real-time, public information.

Use a combination of both, or none at all if the question is general enough.

4. Information Retrieval (The “R” in RAG): The selected tool(s) execute the search and retrieve relevant documents, articles, or data snippets. This retrieved information is the “context.”

5. Context Augmentation: The retrieved context is sent back and used to augment the original request. The system now has both the user’s original query and a rich set of relevant information.

6. Response Generation: The MCP Client, now armed with this powerful context, generates a comprehensive and accurate answer. This process is known as “Retrieval-Augmented Generation” (RAG) because the final response is generated based on the retrieved information.

7. Final Answer: The final, context-aware Response is sent back to the user, providing an answer that is not just plausible, but well-supported by real data.

By orchestrating these components, we create a system that can answer complex questions by intelligently seeking out and synthesizing information from multiple sources. Now that we understand the “what” and “why,” let’s move on to the “how.”

3. Setting Up the Working Environment

With the architecture mapped out, it’s time to get our hands dirty and set up the foundation for our application. In this section, we’ll configure our project, install the necessary libraries, and launch the external services our agent will rely on.

Before you begin, make sure you have the following installed on your system:

Python 3.8+ and pip

Docker (for running our vector database)

Let’s start by organizing our files. A clean structure makes the project easier to manage. Create a root folder for your project (e.g., mcp-agentic-rag) and create the following files inside it. We’ll populate them as we go.

/mcp-agentic-rag

|-- .env

|-- mcp_server.py # Our main application and server logic

|-- rag_app.py # Our RAG-specific code and dataNow, open your mcp_server.py file and add the following code. This script will handle imports, load environment variables, and define the core configuration constants for our service.

Keep reading with a 7-day free trial

Subscribe to To Data & Beyond to keep reading this post and get 7 days of free access to the full post archives.