Building a Multimodal Agentic System with Crew AI: Step-by-Step Guide[1/3]

How to Build a Multimodal Agentic Workflow from Scratch?

In this hands-on tutorial, you’ll learn how to build a powerful multimodal agentic workflow from the ground up. Instead of depending on a single-function AI, we’ll create a system where specialized agents work together to tackle complex tasks across text, images, speech, and web search.

You’ll design an architecture that includes a Task Router Agent to assign tasks smartly, and a Task Executor Agent equipped with tools like text-to-image, text-to-speech, image-to-text, and web search.

Using frameworks like CrewAI and fast, reliable APIs such as Groq, Replicate, and Tavily, this guide walks you through each step needed to build the next generation of intelligent, interactive AI applications.

Table of Contents:

Introduction to Multimodal Agentic Workflows

Multimodal Agent Application Architecture

Setting Up the Working Environment

Define Project Structure

Defining Agents and Their Tasks

Define the Tools of the Agents

Assembling and Running the Workflow

Testing the Workflow with Real-World Queries

Conclusion: Recap and What’s Next?

Get All My Books, One Button Away With 40% Off

I have created a bundle for my books and roadmaps, so you can buy everything with just one button and for 40% less than the original price. The bundle features 8 eBooks, including:

1. Introduction to Multimodal Agentic Workflows

Multimodal agentic workflows refer to systems where AI agents can autonomously process, reason over, and take actions using multiple data modalities — such as text, images, audio, video, or structured data — within a goal-driven workflow.

Think of them as AI assistants capable of understanding and working across different types of input/output (like a document, a screenshot, and a voice command) while coordinating steps toward a specific task — like summarizing a video, answering questions about a chart, or creating a report from a mix of image and text data.

At its core, a multimodal AI system can handle diverse data inputs, much like how humans use multiple senses to understand the world.[1] When this capability is combined with an “agentic workflow,” where autonomous AI agents collaborate to perform tasks, the result is a powerful and flexible system.[2][3]

These workflows can tackle more complex problems than single-modal systems and can unlock applications that were once in the realm of science fiction.[1]

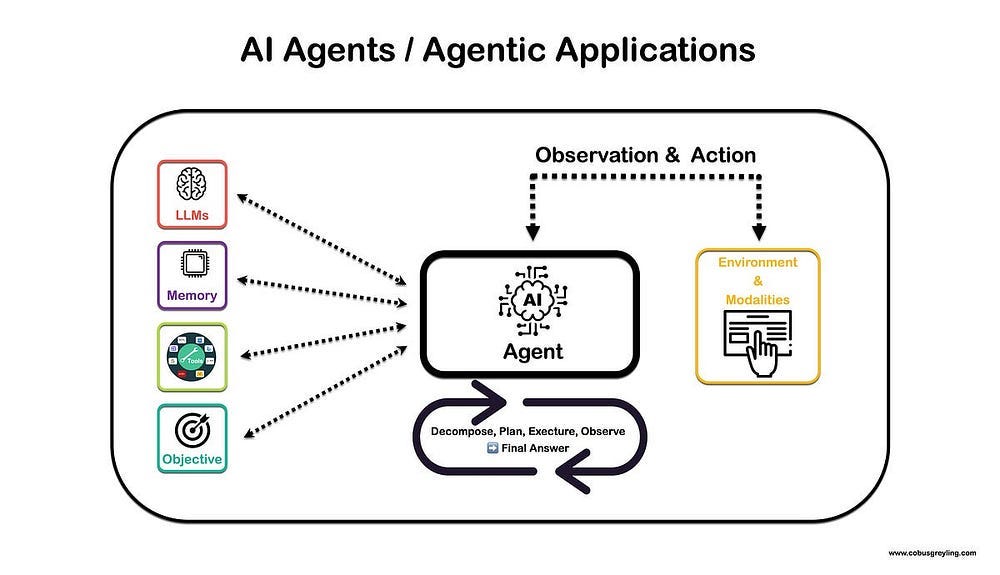

2. Multimodal Agent Application Architecture

The architecture of this application is designed to be a modular and scalable solution for handling complex user queries.[4] Let’s break down each component to understand its role in this intelligent system.

1. The “Brains”: Task Router Agent

When a user submits a query, it first goes to the Task Router Agent. Think of this agent as the project manager or the “brains” of the operation. Its primary responsibility is to analyze the user’s request and determine the best tool for the job.[5] For example, if the user asks to have an image created, the Task Router will know to select the “Text to image tool.” This intelligent routing is crucial for an efficient and accurate workflow.[6][7]

2. The “Doer”: Task Executor Agent

Once the Task Router Agent has selected the appropriate tool, it passes the task to the Task Executor Agent. This agent is the “doer” in the system; its job is to take the instructions from the router and use the selected tool to perform the action.[8] This separation of concerns, where the router decides what to do and the executor does it, creates a more organized and effective workflow.[5]

3. A Versatile Toolbox

The power of this agentic workflow lies in the variety of tools the Task Executor Agent has at its disposal. In our architecture, these include:

Text-to-image tool: Creates an image from a text description.

Text-to-speech tool: Converts written text into spoken words.

Image to text tool: Analyzes an image and provides a text description of its content.

Web search tool: Searches the Internet for information.

This collection of tools allows the system to handle a wide range of multimodal tasks.

After the Task Executor Agent has completed its task using one of the tools, the output is formulated into a response and sent back to the user. This completes the workflow, from the initial query to the final, value-added result.

3. Setting Up the Working Environment

Before we can start building our sophisticated multimodal agent, we need to prepare our digital workspace. A clean and well-organized environment is fundamental to any successful software project. It ensures that our code runs consistently and that we can manage our dependencies and secret keys securely.

In this section, we’ll walk through creating a virtual environment, installing the necessary Python packages, and setting up our configuration to handle API keys safely.

Step 1: Create a Virtual Environment

First, let’s create an isolated space for our project. A virtual environment is a self-contained directory that houses a specific version of Python, along with all the necessary packages for a particular project. This prevents conflicts between the dependencies of different projects.

Open your terminal or command prompt and run the following command to create a new virtual environment named crew-venv:

python -m venv crew-venvTo activate it, use the appropriate command for your operating system:

On Windows:

Keep reading with a 7-day free trial

Subscribe to To Data & Beyond to keep reading this post and get 7 days of free access to the full post archives.