“Imagine you could collect pictures of anything you love — flowers, shoes, cars, or in my case, birds. You stumble upon a website bursting with hundreds of those images, and naturally, you’d want to save them all to your device, right?*

But let’s be honest; who has the time to click ‘Save As’ hundreds of times? And what if you could gather more than just pictures? For instance, in my case, information about the species of birds or, in another case, the models of the cars.

This is where web scraping comes into play! In this article, I’ll take you through my 10-step adventure of scraping beautiful bird images from the African Bird Club’s website using BeautifulSoup — without eating a single bird along the way!”

Table of Contents:

What is Web Scraping

Web Scraping with BeautifulSoup: 10 Easy Steps

Step 1: Installing the required libraries

Step 2: Setting up the links to the website

Step 3: Making your requests look “Real”

Step 4: Finding the Gold: Where is the Data Hiding?

Step 5: Accessing and Preparing the Webpage for Scraping

Step 6: Locating the Data

Step 7: Extracting the Data

Step 8: Downloading the Data

Step 9: Storing the Data in a Useful Format

Step 10: Viewing the Stored Data

Conclusion

My New E-Book: Efficient Python for Data Scientists

I am happy to announce publishing my new E-book Efficient Python for Data Scientists. Efficient Python for Data Scientists is your practical companion to mastering the art of writing clean, optimized, and high-performing Python code for data science. In this book, you'll explore actionable insights and strategies to transform your Python workflows, streamline data analysis, and maximize the potential of libraries like Pandas.

1. What is Web Scraping

Web scraping is the process of automating the collection of information — images, text, or any other data — from websites using programming languages like Python or JavaScript.

With libraries in Python like BeautifulSoup, and Selenium for more dynamic pages, collecting information from websites can be automated.

Why BeautifulSoup? Think of it as an “internet librarian” that helps you locate what you’re looking for and organizes it in a neat, accessible way.

It can quickly isolate specific tags — like image URLs or text content — allowing you to gather precise pieces of information effortlessly from websites, helping you turn unstructured web data into well-organized information.

Why Bother Scraping Bird Data? (Or Any Data, Really)

But why scrape data, especially images of birds, in the first place? For my project, collecting images of bird species in Kenya supports my broader goal: developing a pest control system to mitigate the impact of avian pests on agriculture.

Beyond my project, there are many reasons why web scraping can be valuable. You might want to:

Create a Personal Collection: Whether it’s images of wildlife, rare cars, or architecture, web scraping lets you efficiently gather large collections without the hassle of manual downloading.

Optimizing Business Performance: Collecting data on e-commerce sites, such as price trends, availability, and customer reviews, can help businesses and consumers make more informed decisions.

Build Training Data for Machine Learning: For data science projects, web scraping is essential for creating datasets for image recognition, text analysis, or other applications.

The possibilities with web scraping are vast, and whether you’re motivated by a personal hobby, a research project, or a larger mission, having this skill up your sleeves makes it possible to efficiently gather the information you need.

Now let’s get into web scraping in 10 steps in the next section.

2. Web Scraping with BeautifulSoup: 10 Easy Steps

Step 1: Installing the required libraries

If you haven’t already, you’ll need to install BeautifulSoup and requests:

# Install necessary libraries!pip install beautifulsoup4, requestsNow, let’s get into the actual code!

Step 2: Setting up the links to the website

The first step in web scraping is setting up the links to the website where we’ll access the data. I used two links for better code flexibility and cleanliness.

# Set the link(s) to the pages to retrieve information fromabc_base_url = 'https://www.africanbirdclub.org' # Remember to replace with the link to your websiteabc_species_info_url = f'{abc_base_url}/afbid/search/category/-/-/28'Here’s the breakdown:

abc_base_url: This variable holds the main address of the African Bird Club site. Think of it as the base structure, or foundation, on which we’ll build other specific links.

abc_species_info_url: This variable points directly to the page with Kenyan bird species information (only include if the page you want to get information from is not the primary page).

Separating the two adds flexibility to our code!

Step 3: Making your requests look “Real”

When you request data from a website, it’s essential to present yourself as a real user rather than an automated bot. This is important because many websites are designed to block requests from scrapers to protect their content.

To ensure our requests are welcomed, we use something called HTTP headers, which can include a friendly identifier — a pass key — known as the User-Agent.

# Setting headers to mimic a legitimate

userheaders = { "User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/89.0.4389.82 Safari/537.36"}The User-Agent acts like an introduction when you knock on a website’s door. It tells the site who you are — basically, it lets them know you’re using a standard web browser (like Chrome on Windows).

By doing this, we make it less likely that the site will block our access.

With these foundations, we’re all set to dive deeper into scraping bird species information from the site. Trust the process! (And yes, the process does know that you are trusting it).

Step 4: Finding the Gold: Where is the Data Hiding?

Next, explore the HTML structure of the webpage to locate the information we want. Think of it like following a treasure map: the more familiar we are with the map, the easier it is to find the treasure (data) we need.

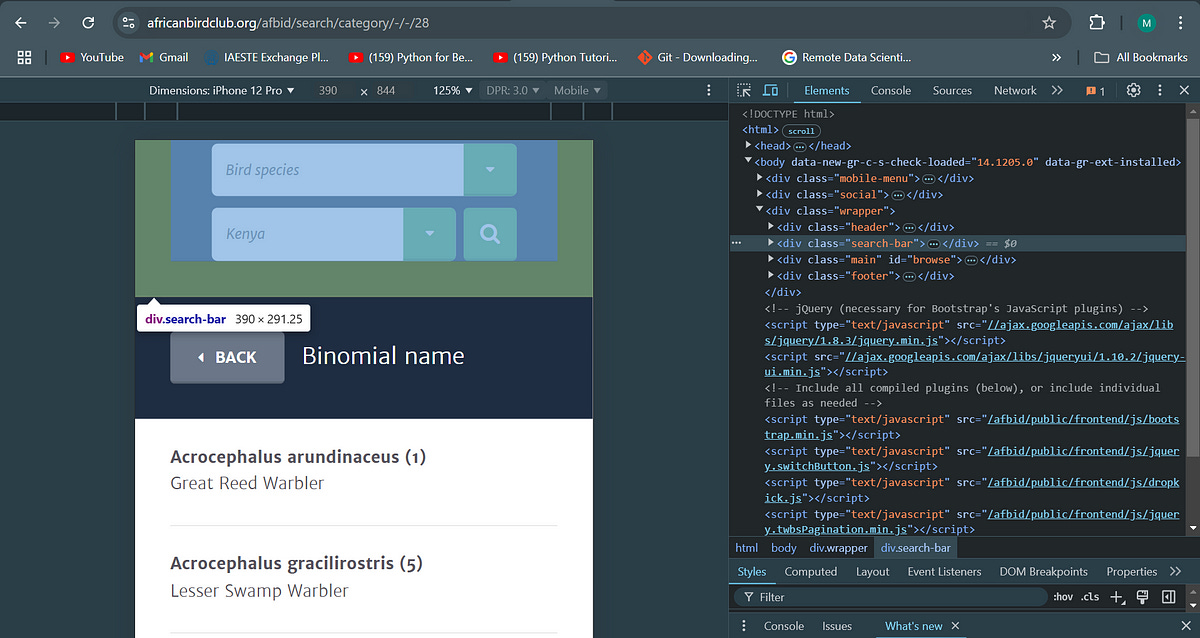

To start, open the webpage you want to scrape and right-click on it. Select “Inspect” or “Inspect Element” to open the Developer Console, a tool that reveals the HTML code structure — our map — behind the website. You should see something that looks like this:

On the African Bird Club’s website, in the Developer Console to the right, you’ll see the HTML elements arranged like a family tree, where each “branch” represents a different part of the webpage.

For example, a <div> tag may contain a section of text, an image, or even multiple other tags within it. This nested structure might look complicated, but with a bit of practice, you’ll get comfortable navigating it!

Hovering your cursor over different elements in the HTML view will highlight the corresponding areas on the webpage.

For instance, hovering over a <div> with the class "search-bar" highlights the search bar.

In my case, the list of names of birds that I wanted was located within a <div> tag with the class panel-inner as shown below:

Inside this <div> tag, there is a <ul>tag which basically stores an unordered list of items, and each bird is listed within an <li> tag, structured as shown below:

<li>

<a href="/afbid/search/browse/species/1503/28">

<h5>Acrocephalus arundinaceus <b>(1)</b></h5>

<span>Great Reed Warbler</span>

</a>

</li>Here:

The <a> tag contains the href link that directs to a page with the image of the bird

The <h5> tag contains the scientific name of each species.

The <span> tag contains the common name.

In short, understanding the HTML structure is key to locating the data you want to scrape. Whether you’re saving bird images, flower names, or product descriptions, this process helps you pinpoint and extract specific information from any webpage.

Keep reading with a 7-day free trial

Subscribe to To Data & Beyond to keep reading this post and get 7 days of free access to the full post archives.