6 Kaggle Notebooks to Build RAG Applications In LangChain

Unlock RAG with LangChain: 6 Must-Try Kaggle Notebooks

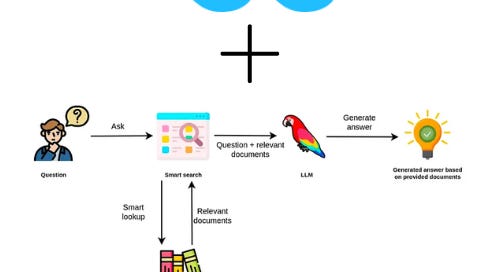

Retrieval augmented generation (RAG) is a powerful framework that enhances large language models (LLMs) by integrating external data sources, enabling more accurate and context-aware responses for tasks like question-answering and document analysis.

This article presents six Kaggle notebooks that guide you through building RAG applications using LangChain. You’ll start with document loading and splitting, learn how to implement vector databases and text embeddings and explore information retrieval techniques. Additionally, you’ll build systems for answering questions from documents and create a chatbot that interacts with files.

This article is perfect for developers, data scientists, and AI enthusiasts who want practical, hands-on experience in applying RAG and LangChain to real-world use cases.

Table of Contents:

Hands-On LangChain for LLM Applications Development: Documents Loading

Hands-On LangChain for LLM Applications Development: Documents Splitting

Hands-On LangChain for LLM Applications Development: Vector Database & Text Embeddings

Hands-On LangChain for LLM Applications Development: Information Retrieval

Hands-On LangChain for LLMs App: Answering Questions From Documents

My New E-Book: LLM Roadmap from Beginner to Advanced Level

I am pleased to announce that I have published my new ebook LLM Roadmap from Beginner to Advanced Level. This ebook will provide all the resources you need to start your journey towards mastering LLMs.

1. Hands-On LangChain for LLM Applications Development: Documents Loading

In order to create an application where you can chat with your data, you first have to load your data into a format that can be worked with. That’s where LangChain document loaders come into play.

LangChain has over 80 different types of document loaders, and in this notebook, we’ll cover a few of the most important ones and get you comfortable with the concept in general.

We will end the notebook with a practical tip that you can use to use them in an efficient way for your Large Language Model (LLM) applications.

2. Hands-On LangChain for LLM Applications Development: Documents Splitting

Once you’ve loaded documents, you’ll often want to transform them to better suit your application. The simplest example is you may want to split a long document into smaller chunks that can fit into your model’s context window.

When you want to deal with long pieces of text, it is necessary to split up that text into chunks. As simple as this sounds, there is a lot of potential complexity here. Ideally, you want to keep the semantically related pieces of text together.

LangChain has a number of built-in document transformers that make it easy to split, combine, filter, and otherwise manipulate documents. In this notebook, we will explore the importance of document splitting, and the available LangChain text splitters and will explore four of them in depth.

Keep reading with a 7-day free trial

Subscribe to To Data & Beyond to keep reading this post and get 7 days of free access to the full post archives.